Meet Nvidia’s Ampere Architecture

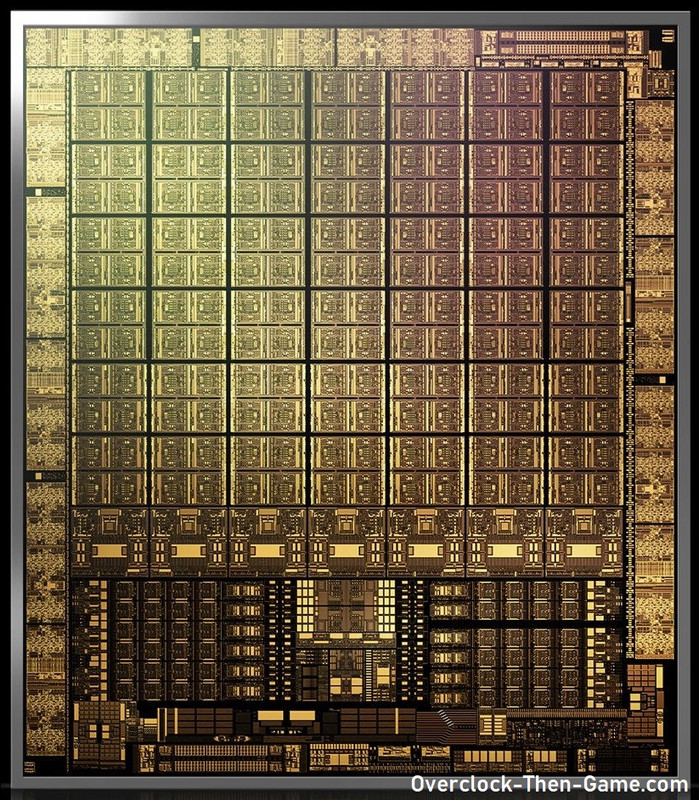

Fresh off of their previous architecture, Turing (RTX 2000 series), Nvidia is once again establishing themselves for market leadership across the board. While Turing was a powerhouse fabricated on TSMC’s 12nm FinFet with 18.6 billion transistors, Ampere is using Samsung’s 8nm fab and contains 28.3 billion transistors for the RTX consumer focused GPUs (i.e RTX 3070\3080\3090) while the enterprise & professional GPUs are using TSMC 7nm (i.e A100 with 54 Billion transistors). Nvidia latest flagship and workstation GPU, the RTX 3080 and RTX 3090 respectfully, uses GA102 GPUs while the RTX 3070 uses the GA104. All of the GA100 series GPUs includes several new features such as revised & more Streaming Multiprocessors, Second Generation RT (Ray Tracing Cores), Third Generation Tensor Cores, a new reference design for cooling the GPU, AV1 encoding and GDDR6x memory. The biggest discussion in gaming right now and over the past few years is Ray Tracing so I will start there.

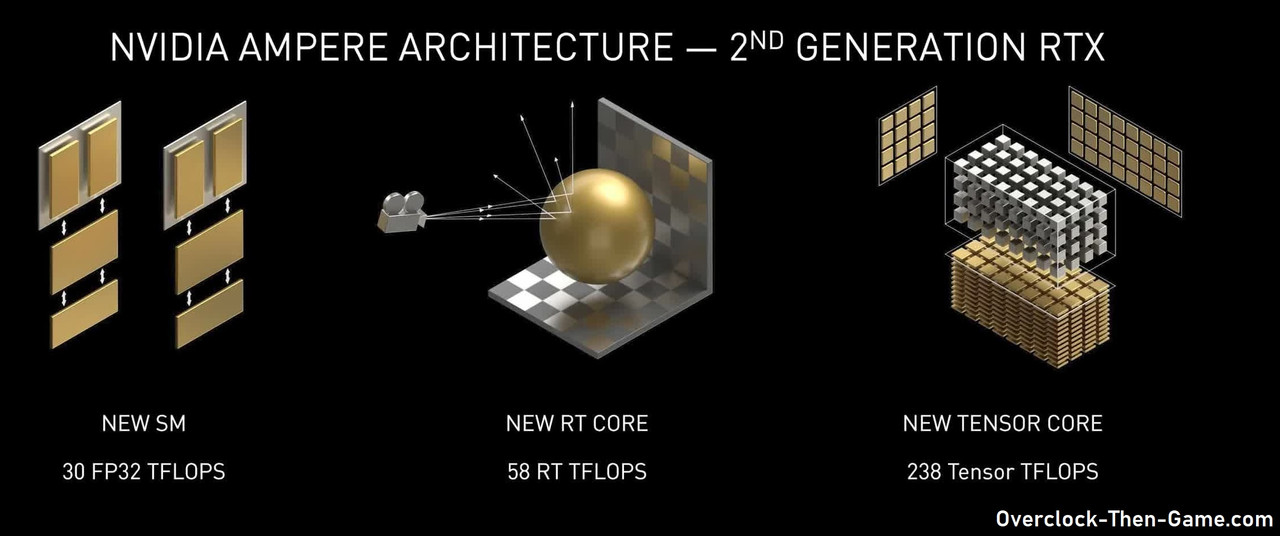

2nd Generation Ray Tracing

Ray Tracing has been all the talk lately and with more games supporting the technology Nvidia is going all in with it. Ray Tracing has also been very demanding to compute over the years, but Nvidia has been pushing the technology further to process the massive amounts of data. As games continue to rely on both rasterization and Ray Tracing for certain workloads the Ampere architecture will allow higher performance and more possibilities to be accomplished.

DLSS 2.0 & Tensor Core Improvements

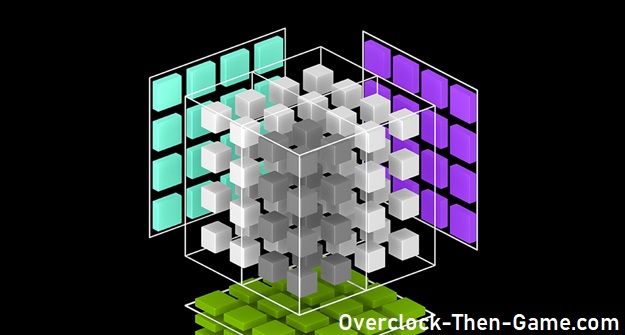

DLSS (Deep Learning Super Sampling) has also gained a lot of attention and traction since Nvidia first rolled out DLSS 1.0, withdrawn it twice from the market (1.0 then 2.0) and released DLSS (2.0-fixed) again for the third time. Nvidia has been working hard to perfect the DLSS technology. I will try to explain DLSS using a very basic description; DLSS allows RTX GPUs to use a DNN (Deep Neural Network) to take multiple renders to use as a reference, then use AI to create a new image based on the previous captured reference renders, then upscale that new image back to the native resolutions. It seemed counterintuitive at first and that was my initial belief with this technology years ago, but I've learned over the years that there is more to DLSS. This technology will allow more graphically demanding titles to produce playable FPS at higher resolutions such as 1440p, 4K, 5K and 8K without losing image fidelity. Now with Ray Tracing involved this will be more crucial for playable frame rates at higher resolutions.

GDDR6X

Normally we see AMD be the company first to bring new memory tech to the GPU market, but Nvidia has released the first GPU to use GDDR6X. Nvidia seems to be on a role as a market leader, releasing hardware based Ray Tracing AI tech in a consumer grade GPU and now GDDR6X. This new technology is exciting since it allows the RTX 3080 to deliver 760 GB/s over a 320-bit memory interface. GDDR6X peak memory is 936 GB/s. While the RTX 3080 and 3090 has a full 320-bit memory bus and use GDDR6X, the RTX 3070 only has a 256-bit memory bus and only uses GDDR6. GDDR6X allows two bits of data to be at a time at a faster rate which is two times faster than GDDR6 using a new PAM4 signaling techniques. Instead of the typical 0 and 1 binary data on the rising and falling clock, PAM4 allows for four possible bits of data to be proccessed (00/01/10/11) at 4 different signals per clock. This new technique was needed to assist the Ray Tracing Cores and Tensor Cores (Deep Neural Network-AI-DLSS) as well as help gamers enjoy high resolution games in 4K that require complex renders. There is a ton of data that need to be crunched and GDDR6X will ensure that the GPU isn’t starved for data. In addition to GDDR6X, Nvidia has spoken about something named "EDR" to the GA100 series. EDR is short for ""Error Detection and Replay". This technology will allow gamers\overclockers to realize when they have hit their pinnacle performance, but there a catch; the GDDR6X Memory sub system will resend the bad data while lowering the memory bandwidth. Lowering the bandwidth will results in lower performance so that overclockers will know that have already hit their max performance. It is worth noting that CRC is a standard in the GDDR tech since GDDR5. There are two ways that the error detection can prevent corrupted data and the latest can compress two seprate 8-bits for validation. In previous memory overclocking scenario’s enthusiast would overclock their GPU Memory, if you exceeded a certain threshold errors would occur (black screens, drivers crashing\reset, artifacts and weird pixels on screen, OS crash, and so on). To prevent this, GDDR6X EDR actively checks the CRC to ensure that the data sent and received is correct and if it isn’t correctly verified the memory bandwidth is lowered as explained earlier. I’m sure the ultimate goal is to prevent the user from damaging their GPU in the long run, but this is a pretty cool feature that will help overclockers. This tech will hopefully prevent the gamers and overclockers from needlessly continuing memory overclocks and possibly dial back some of their memory overclock settings when they see dminishing returns.

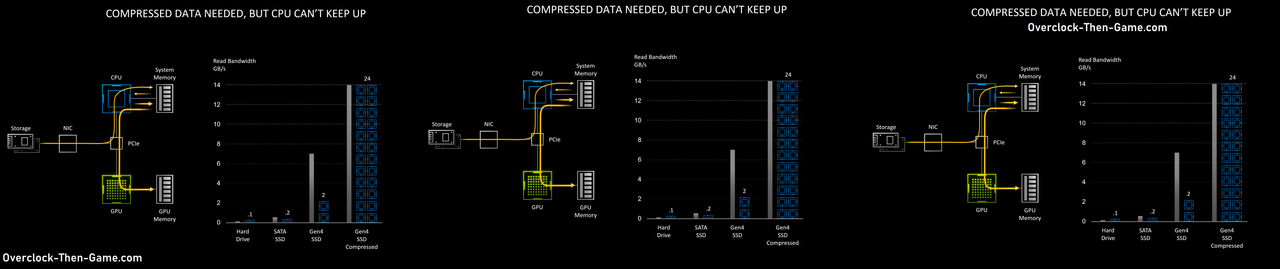

RTX I/O

Nvidia’s RTX I/O basically allows the RTX GPU to decompress game data directly instead of using and waiting on the slower CPU threads to decompress data. Now with NVMe SSDs moving tons of data (2 to 6+ GBs ), that data needs to be handled quickly and by allowing the GPU direct access to the NVMe\SSDs, this will allow all of that data to be loaded and decompressed very quickly. This will be helpful for quicker loading times and prevent images or objects from “popping” in during gameplay. This also will happen concurrently so the CPU will still be able to handle data while the RTX I/O takes care of the heavy lifting which should lead to increased FPS. During all of this the RTX will continue to leverage async compute workloads and keep all of the data flowing smoothly during gaming sessions. This technology is in the works and Nvidia is working closely with Microsoft. We should hear more about this tech later this year.