Nvidia RTX 3080 Review

Introduction

In early May 2020 Nvidia's CEO, Jensen Huang, was seen standing in his kitchen while cooking. From that point he pulled something from his oven. The “dish” he revealed from his oven contained Nvidia’s next generation architecture, Ampere and the A100 GPU. A few days later Jensen Huang properly introduced the groundbreaking A100, yes from his actual kitchen, and from that point gamers waited patiently for Nvidia’s next GPU reveal based on the Ampere architecture.

Later in September 2020 Nvidia’s CEO stated that the RTX 3000 series will be the “greatest generational leap in company history” so naturally we had to know more. The first thing everyone was concerned about with the RTX 3000 series was the price and if Nvidia could deliver on the price and performance. Little did we know that a RTX 3070 priced at $500 would match a previously released flagship RTX 2080 Ti which retailed for $1,200+. The price for 3rd party RTX 2080 Ti’s were well above $1,200 in some cases so a cheaper alternative sounded great, well at least it sounded great at the time. Limited supplies and ridiculous price markups ruined the initial launch and many months after the RTX 3080 released. It is an ongoing issue. This limited supply release has also affected RTX 3070 and 3090, but more on that later in this article.

That also meant that the next tier, the flagship RTX 3080, would be even more powerful than the RTX 2080 Ti. We can comfortable say after many reviews have been released that the GTX 3080 is roughly 30% faster than the RTX 3070 & RTX 2080 Ti which makes the RTX 3000 series even more exciting. The expensive RTX 3090 ($1,499) is aimed at prosumers with workloads outside of gaming. The 3090 leaves Nvidia with a ton of space to place their inevitable RTX 3080 “Ti” variant.

Lack Of Competition

Back in 2016 AMD decided to tackle the majority of gamers in the “mainstream” market, or in other words gamers who didn’t spend more than $300 on a GPU. This left Nvidia in control of the high-end GPU tier market throughout the RTX 1000 and 2000 series. This different pace in the high-end market lead to GPUs, such as the Nvidia 2080 Ti, being released at $1,199. This lack of competition also caused the GPU market to stagnate over the years. During these years Nvidia sandbagged and didn’t feel the need to compete unless they had to. Nvidia basically matched the competition until AMD could catch up while profiting. These actions also lead to practices and releases such as the “Super” (RTX 20xx SUPER) variants being release. This kept Nvidia GPUs within arm’s length reach of AMD in the high, mid and low tier GPU markets. The Super variants did contain architectural improvements as well as more CUDA cores and higher clocks and so on. This gave Nvidia time to get their concurrent workloads under wrap which was something that their GTX 1000 series struggled with. Nvidia GPUs now perform well in DX12 and Vulkan titles that leveraged Async Compute workloads at the hardware level. So Nvidia did use their time wisely during AMDs late to market high-end GPU market releases, the Vega series & Radeon VII releases. You can read more about AMDs late to market Vega series here and several reasons why AMD was late to match the competition from Nvidia by clicking here.

X58 + RTX 3080 YouTube Review

I created my second YouTube review video for those who would like to see a compressed version of this article. I cover several games and topics.

Meet Nvidia’s Ampere Architecture

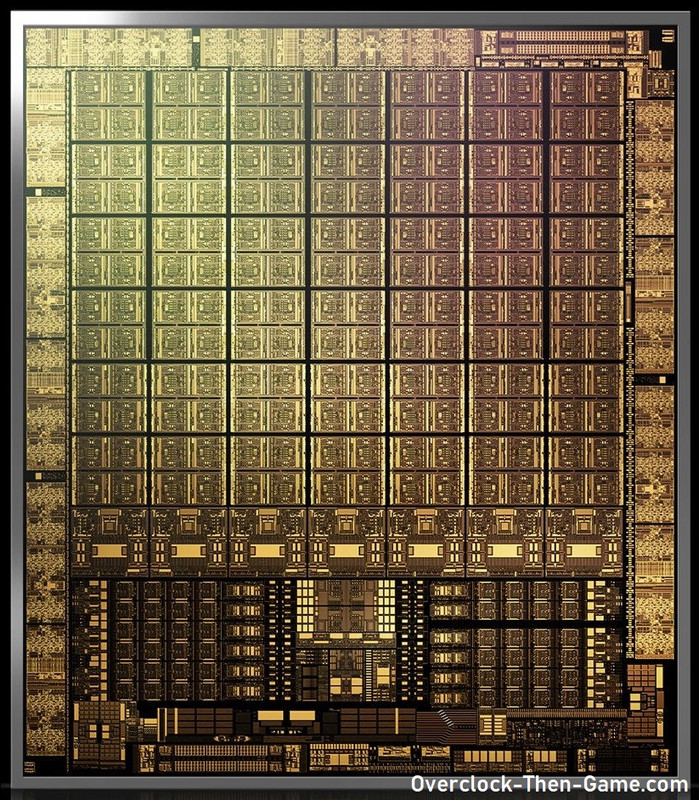

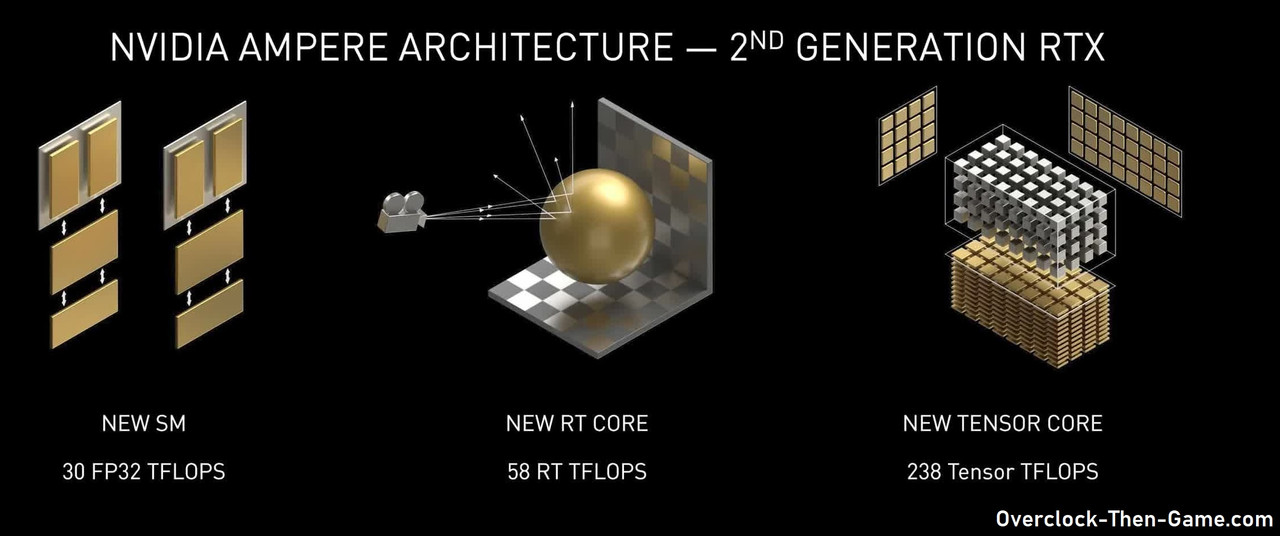

Fresh off of their previous architecture, Turing (RTX 2000 series), Nvidia is once again establishing themselves for market leadership across the board. While Turing was a powerhouse fabricated on TSMC’s 12nm FinFet with 18.6 billion transistors, Ampere is using Samsung’s 8nm fab and contains 28.3 billion transistors for the RTX consumer focused GPUs (i.e RTX 3070\3080\3090) while the enterprise & professional GPUs are using TSMC 7nm (i.e A100 with 54 Billion transistors). Nvidia latest flagship and workstation GPU, the RTX 3080 and RTX 3090 respectfully, uses GA102 GPUs while the RTX 3070 uses the GA104. All of the GA100 series GPUs includes several new features such as revised & more Streaming Multiprocessors, Second Generation RT (Ray Tracing Cores), Third Generation Tensor Cores, a new reference design for cooling the GPU, AV1 encoding and GDDR6x memory. The biggest discussion in gaming right now and over the past few years is Ray Tracing so I will start there.

2nd Generation Ray Tracing

Ray Tracing has been all the talk lately and with more games supporting the technology Nvidia is going all in with it. Ray Tracing has also been very demanding to compute over the years, but Nvidia has been pushing the technology further to process the massive amounts of data. As games continue to rely on both rasterization and Ray Tracing for certain workloads the Ampere architecture will allow higher performance and more possibilities to be accomplished.

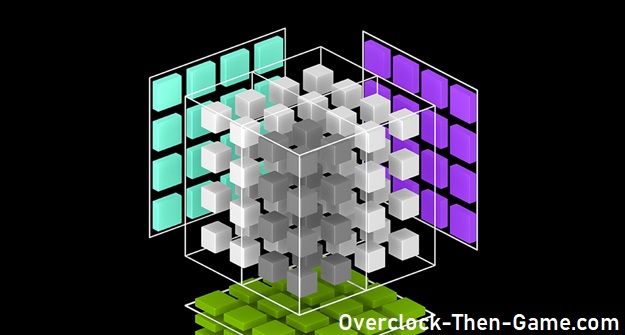

DLSS 2.0 & Tensor Core Improvements

DLSS (Deep Learning Super Sampling) has also gained a lot of attention and traction since Nvidia first rolled out DLSS 1.0, withdrawn it twice from the market (1.0 then 2.0) and released DLSS (2.0-fixed) again for the third time. Nvidia has been working hard to perfect the DLSS technology. I will try to explain DLSS using a very basic description; DLSS allows RTX GPUs to use a DNN (Deep Neural Network) to take multiple renders to use as a reference, then use AI to create a new image based on the previous captured reference renders, then upscale that new image back to the native resolutions. It seemed counterintuitive at first and that was my initial belief with this technology years ago, but I've learned over the years that there is more to DLSS. This technology will allow more graphically demanding titles to produce playable FPS at higher resolutions such as 1440p, 4K, 5K and 8K without losing image fidelity. Now with Ray Tracing involved this will be more crucial for playable frame rates at higher resolutions.

GDDR6X

Normally we see AMD be the company first to bring new memory tech to the GPU market, but Nvidia has released the first GPU to use GDDR6X. Nvidia seems to be on a role as a market leader, releasing hardware based Ray Tracing AI tech in a consumer grade GPU and now GDDR6X. This new technology is exciting since it allows the RTX 3080 to deliver 760 GB/s over a 320-bit memory interface. GDDR6X peak memory is 936 GB/s. While the RTX 3080 and 3090 has a full 320-bit memory bus and use GDDR6X, the RTX 3070 only has a 256-bit memory bus and only uses GDDR6. GDDR6X allows two bits of data to be at a time at a faster rate which is two times faster than GDDR6 using a new PAM4 signaling techniques. Instead of the typical 0 and 1 binary data on the rising and falling clock, PAM4 allows for four possible bits of data to be proccessed (00/01/10/11) at 4 different signals per clock. This new technique was needed to assist the Ray Tracing Cores and Tensor Cores (Deep Neural Network-AI-DLSS) as well as help gamers enjoy high resolution games in 4K that require complex renders. There is a ton of data that need to be crunched and GDDR6X will ensure that the GPU isn’t starved for data. In addition to GDDR6X, Nvidia has spoken about something named "EDR" to the GA100 series. EDR is short for ""Error Detection and Replay". This technology will allow gamers\overclockers to realize when they have hit their pinnacle performance, but there a catch; the GDDR6X Memory sub system will resend the bad data while lowering the memory bandwidth. Lowering the bandwidth will results in lower performance so that overclockers will know that have already hit their max performance. It is worth noting that CRC is a standard in the GDDR tech since GDDR5. There are two ways that the error detection can prevent corrupted data and the latest can compress two seprate 8-bits for validation. In previous memory overclocking scenario’s enthusiast would overclock their GPU Memory, if you exceeded a certain threshold errors would occur (black screens, drivers crashing\reset, artifacts and weird pixels on screen, OS crash, and so on). To prevent this, GDDR6X EDR actively checks the CRC to ensure that the data sent and received is correct and if it isn’t correctly verified the memory bandwidth is lowered as explained earlier. I’m sure the ultimate goal is to prevent the user from damaging their GPU in the long run, but this is a pretty cool feature that will help overclockers. This tech will hopefully prevent the gamers and overclockers from needlessly continuing memory overclocks and possibly dial back some of their memory overclock settings when they see dminishing returns.

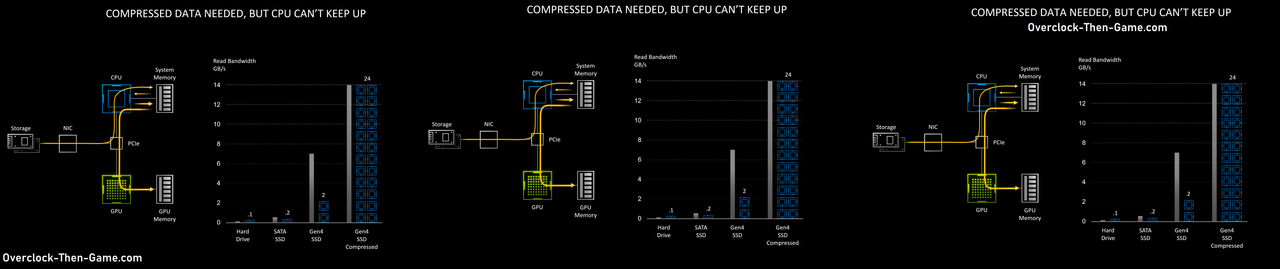

RTX I/O

Nvidia’s RTX I/O basically allows the RTX GPU to decompress game data directly instead of using and waiting on the slower CPU threads to decompress data. Now with NVMe SSDs moving tons of data (2 to 6+ GBs ), that data needs to be handled quickly and by allowing the GPU direct access to the NVMe\SSDs, this will allow all of that data to be loaded and decompressed very quickly. This will be helpful for quicker loading times and prevent images or objects from “popping” in during gameplay. This also will happen concurrently so the CPU will still be able to handle data while the RTX I/O takes care of the heavy lifting which should lead to increased FPS. During all of this the RTX will continue to leverage async compute workloads and keep all of the data flowing smoothly during gaming sessions. This technology is in the works and Nvidia is working closely with Microsoft. We should hear more about this tech later this year.

The Ultimate Play

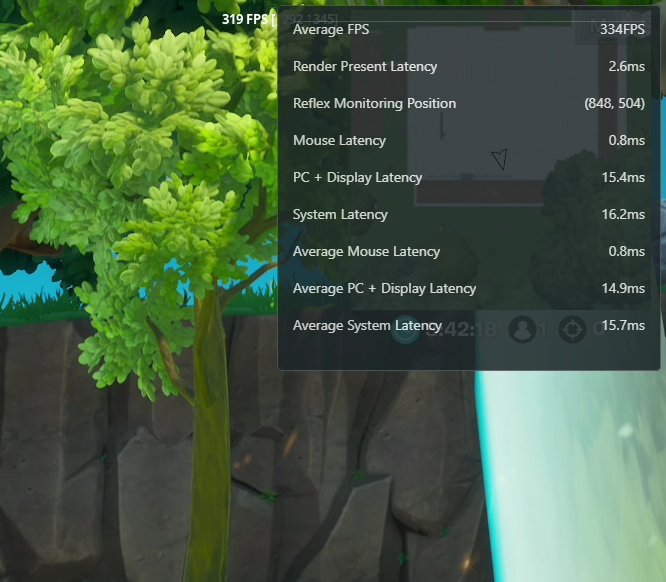

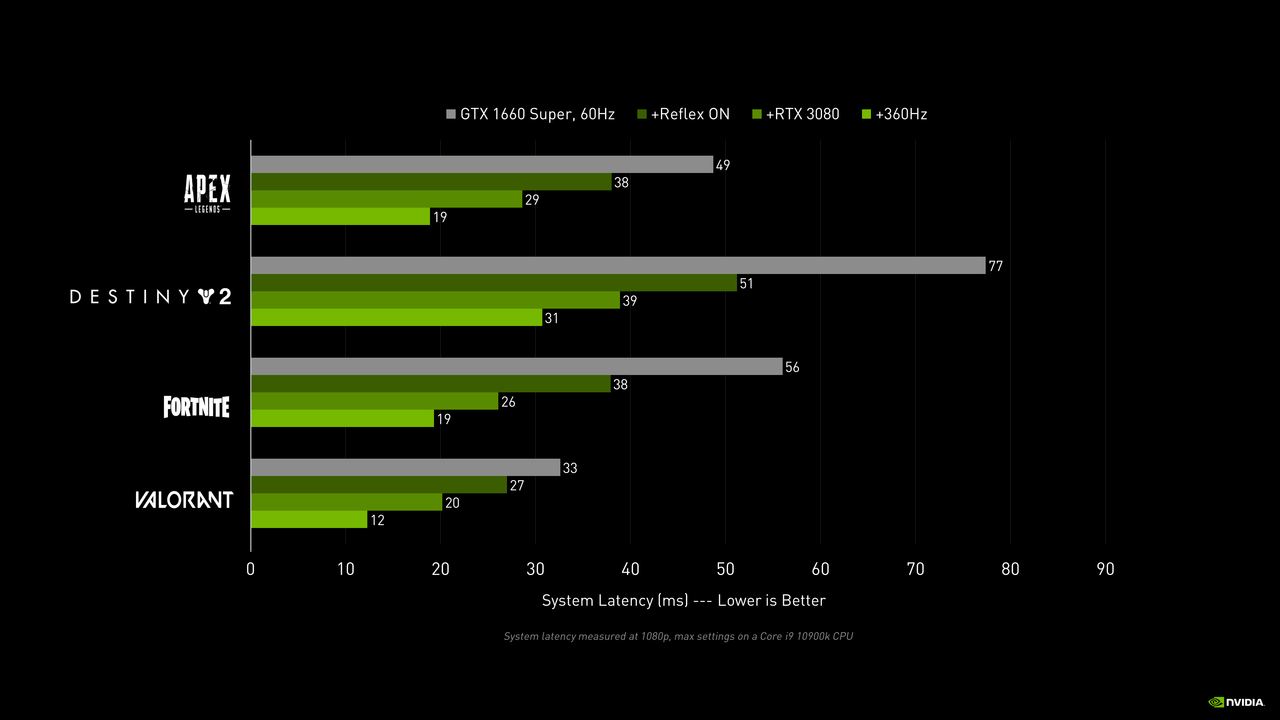

Nvidia Reflex

Nvidia latest marketing slogan “The Ultimate Play” is Nvidia’s way to tackle all of the current trends in gaming, all the way down to streaming. Starting with e-sport gamers Nvidia is touting its “Nvidia Reflex” tech which allows a reduction in latency when playing e-sports and competitive type of games. There is a huge market for this type of technology and AMD has capitalized on it with their Anti Lag and Freesync tech a few years back. Nvidia Reflex is part of Nvidia proprietary “Nvidia Reflex SDK” APIs.

NVIDIA Reflex Latency Analyzer in action

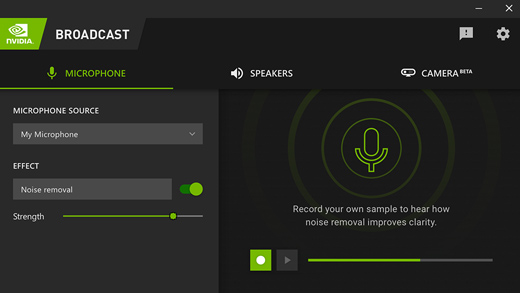

Nvidia Broadcast App

Nvidia is definitely tackling the competition and taking things to the next level with this tech. Nvidia Broadcast App take a lot of current features and incorporates them in one app. This will make streaming very easy to setup and can solve a lot of issues, such as noise removal which allows the App to only output your voice. An example could be when you are typing on a keyboard during a stream or moving certain "loud" items around your computer desk, anything other than the sound of your voice will be removed. Background noise was a big issue and many different types of software, including Nvidia’s Broadcast App solve these issues.

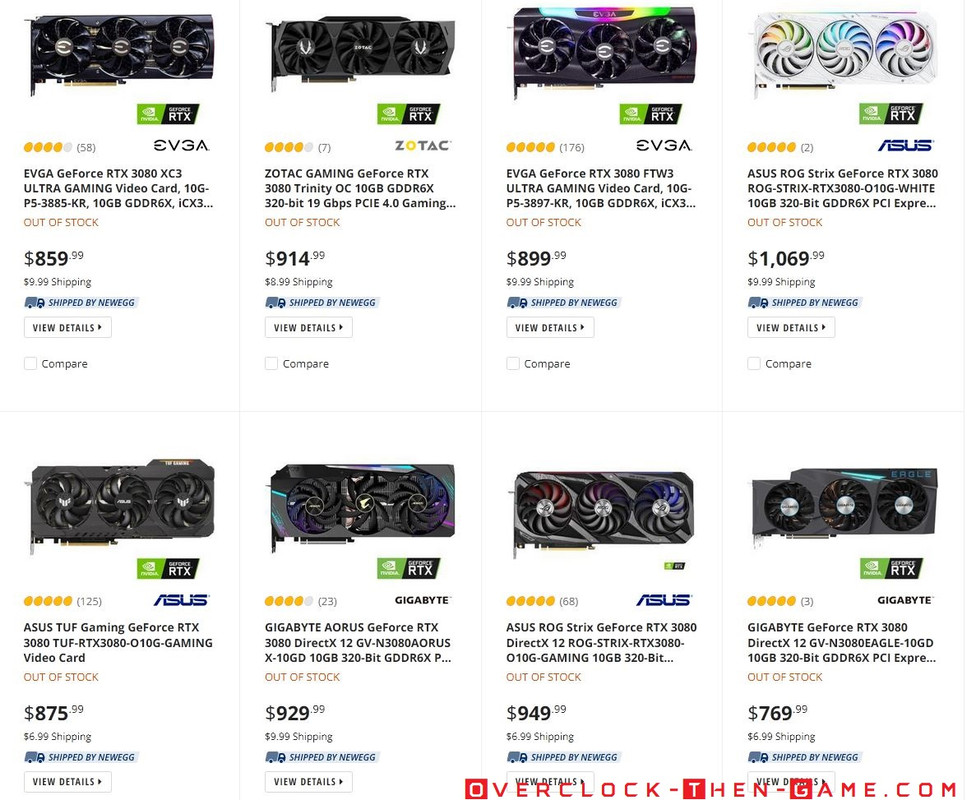

Nvidia’s RTX 3000 Series Launch Has Been A Disaster

My RTX 3080 review is so late because it took many months before I could get my hands on a RTX 3080. I wrote an article on Nvidia’s RTX 3000 series and AMD’s 6000 series stock issues. The RTX 3000 series wasn’t the roll out that Nvidia was expecting and the community did not hold back punches. Many have labeled the RTX series a paper launch given the low number of available units months after release, but I think there is more to the situation than that. Of course we have COVID which caused a pandemic, GDP slowing down around the world, manufacturing & shipping issues worldwide, people being unable to work properly due to the pandemic, civil unrest in different nations and so on. Jensen literally gave his presentation in his kitchen at his home so this year will definitely be one for the history books. Despite all of those issues in 2020 the RTX 3000 series release has been labeled a paper launch by some in the online community. We aren’t sure if it is due to Samsung 8nm yield issues or some of the issues I listed earlier, but there have been some serious issues with this RTX 3000 launch which I will explain below. If that wasn’t bad enough independent journalist and reviewers, such as myself, wasn’t able to get their hands on the RTX 3080 prior to release, but sponsored “journalist/reviewers” were able to review the GPU. Nvidia decided to push the review publish date closer to release. This gave consumers roughly 2 days to or in some cases 24 hours depending on different activities in life (family, work etc.). Although Nvidia and AiBs have been able to push out cards as fast as they could, scalpers and bots have shown up to ruin the day.

RTX 3080 Crashing Issues

The first big issue was constant crashing during gaming sessions. The issue appears to be that the capacitors were apparently causing instability. This could have been AiBs not following the specifications listed by Nvidia, taking alternative or cheaper routes. Other issues could have been GPU clocks and power related issues as well. This issue ultimately led stability issues and GPUs being recalled and replaced. Luckily Nvidia was quick to release a GPU Driver update (GeForce Game Ready Driver 456.55) to lower the GPU and boost clocks for the few gamers who actually owned the card during that time.

Bots, Scalpers And Limited Supply

If limited supply wasn’t enough for Nvidia and AiBs to deal with we know have Bots and Scalpers to make things much worse than they should have been. The RTX 3070 MSRP is $499, the RTX 3080 FE MSRP is $699 and the RTX 3090 MSRP is $1,499. You will almost never find these prices online as they are quickly purchased by legit consumers and mostly bots for scalping purposes. The prices has actually increased due to tariffs and other factors. The bots have been a problem for years and a problem that isn’t going away anytime soon. Bots allow scalpers to purchase all of the available stock which leads to the already “limited stock” being sold out completely. The “limited stock” is then re-sold in the aftermarket by scalpers look to make a huge profit. Scalping isn’t limited to Nvidia, AMD, Intel or console gaming. It has been an issue in a ton of different industries when there was a limited run or short supply with a large demand. The RTX 3080 and 3090 has been hard to purchase for a decent price since they can only be purchased online in nearly all cases. This makes the scalpers life even easier since the bots can react and purchase anything online faster than any human can. Now you must remember that there are TONS of bots crawling through many websites every second checking the stock of the RTX 3000 series GPUs. Once the stock is available the bots will attempt to purchase as many GPUs as possible. Ebay prices are showing the RTX 3080 (MSRP $699) selling as high as $1000 to $1,400+, completely ridiculous pricing. I’ve read comments were people are saying things like purchasing a $700-$800 GPU for $950 is “reasonable” at this point. Ebay isn’t the only place where scalpers are cashing in, Amazon resellers, Newegg resellers and even actual legit stores locally and trusted e-tailers are looking to make a profit on the RTX 3000 shortages and hype. You can read more about my thoughts in my articled: “Nvidia & AMD This Is Why You Should NEVER Paper Launch A Product” (click here) With all of that being said let’s see how well my nearly 13 year old X58 platform performs with Nvidia’s latest and greatest, the RTX 3080.

RTX 3080 Specifications

Once again I am attempting to stretch the X58 platforms to its limits. For those who don’t know I started this “X58 craze” back in 2013 and got a lot of enthusiast interested in the platform again. For many years, well after the X58 released, I have showed how well the X58 performed against Intel’s latest and greatest. During that era the top of the line was Intel’s Sandy-Bridge-E & Ivy-Bridge-E. Now in 2021 I will provide more information about this legacy platform against the modern platforms. For this special occasion I have decided to push my CPU overclock to 4.6Ghz and tightened my DDR3-1600Mhz timings to 8-9-8-20. I know this doesn’t sound like much, but we will see how well these settings perform along with Nvidia’s latest and greatest flagship. I have separated the overclocked results from the stock results as well to prevent confusion with some of my benchmarks. This article will shed more light on the X58 performance, CPU and PCIe bottlenecks as well as the RTX 3080 performance. The main focus is 4K since most people will purchase this GPU for high resolutions.

Real Time Benchmarks™

Real Time Benchmarks™ is something I came up with to differentiate my actual "in-game" benchmarks from the "built-"in" or "internal" standalone benchmarks tools that games offer. Sometimes in-game - Internal benchmark tools doesn't provide enough information. I gather data and I use several methods to ensure the frame rates are correct for comparison. This way of benchmarking takes a while, but it is worth it in the end. This is the least I can do for the gaming community and users who are wondering if the X58 and current GPU hardware can still handle newly released titles. I have been performing Real-Time Benchmarks™ for about 7 years now and I plan to continue providing additional data instead of depending solely on the Internal Benchmark Tools or synthetic benchmarks apps.

-What is FPS Min Caliber?-

You’ll notice something named “FPS Min Caliber”. Basically FPS Min Caliber is something I came up to differentiate between FPS absolute minimum which could simply be a point during gameplay when data is loading, saving, uploading, DRM etc. The FPS Min Caliber™ is basically my way of letting you know lowest FPS average you can expect to see during gameplay. The minimum fps [FPS min] can be very misleading. FPS min is what you'll encounter only 0.1% during your playtime and most times you won’t even notice it. Obviously the average FPS and Frame Time is what you'll encounter 99% of your playtime.

-What is FPS Max Caliber?-

FPS Max Caliber uses the same type of thinking when explaining the MAX FPS. Instead of focusing on the highest max frame that you'll only see 0.1% of the time, I have included the FPS max Caliber you can expect to see during actual gameplay. With that being said I will still include both the Minimum FPS and the Max FPS. Pay attention to the charts since some will list 0.1% (usually for synthetic benchmarks), but normally I use 1% lows for nearly all of my benchmarks. In the past I used the 97th percentile results, but now I just use the 1% most of the time. I just thought I would let you enthusiast know what to expect while reading my benchmark numbers.

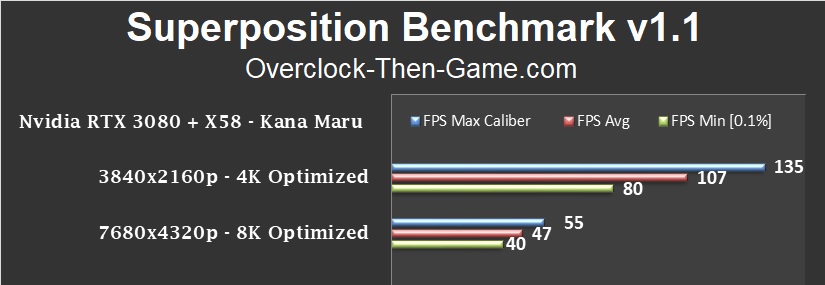

Unigine - Superposition

Unigine's SuperPosition is a very well known benchmarking and stability tool. Even though Unigine's Heaven intially released in 2009, later with Valley in 2013 and SuperPosition in 2017, all three tools gives gamers valuble information across several generations of different GPU architectures. The benchmarking tools are also gorgeous and Heaven still looks great by todays standards so don't be fooled by it's age. I have decided to use Unigine's latest and greatest "SuperPosition" benchmark for the RTX 3080. Not only will this benchmark be more to date over Unigine previous benchmarks, but this benchmark has an 8K (7680x4320p) benchmark built in that we can use for reference.

Superposition Benchmark v1.1

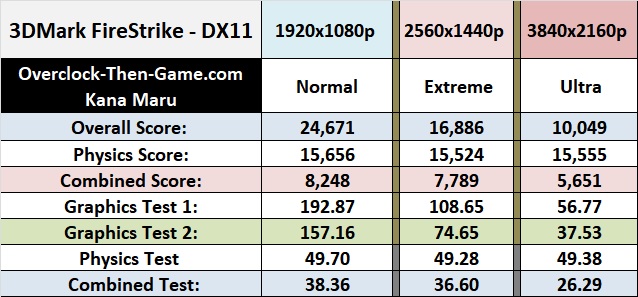

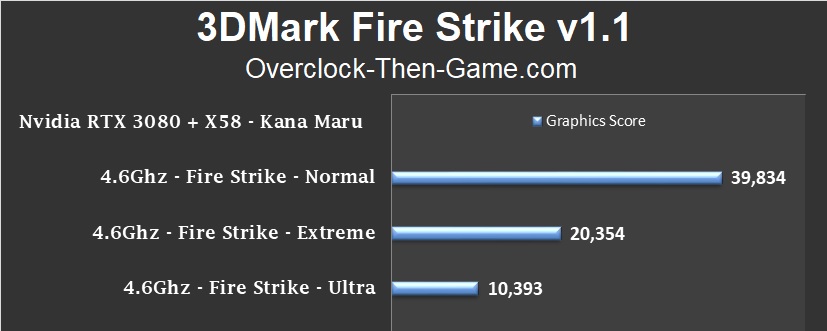

3DMark - Fire Strike

3DMark’s Fire Strike is another widely used benchmarking and stability testing tool. I have included my results below for all three popular resolutions. Be sure to check out the ”RTX 3080 Overclock - Synthetic Benchmarks” section for stock and overclock comparisons.

3DMark - Fire Strike

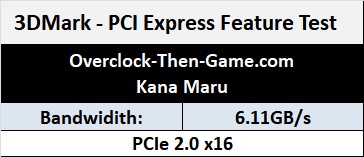

3DMark - PCIe Bandwidth Test

Even with PCIe 2.0 x16 I have always felt confident running the latest and greatest graphics card for the past decade or so. The X58 is PCIe limited to only 2.0, but is it truly limited? In this review we will definitely find out.

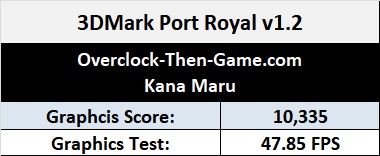

3DMark - Port Royal

3DMark - Port Royal

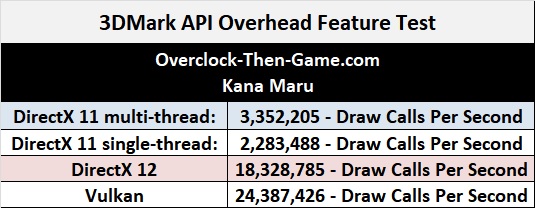

The API Overhead Feature Test will test multiple APIs that are used in most games today. Those are DirectX 11 single & multi-thread, DirectX 12 and Vulkan. Looking at the Vulkan draw calls most can easily see why I’m a huge fan of the Vulkan API. The more draw calls that the CPU can gather is better for the GPU pipepline and less chances of a bottleneck

3DMark - API Overhead Feature Test

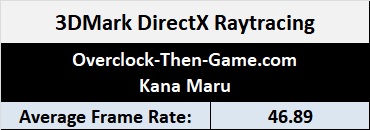

3DMark - DirectX Ray Tracing & TimeSpy

3D Mark’s DirectX Raytracing Feature Test renders the entire scene with ray tracing features. The entire test is built around ray tracing with random variables to test the ray tracing capabilities of the GPU. For direct comparisons without random variables you can set the exact settings for a better understanding of performance if you choose. I have used the default benchmarking settings.

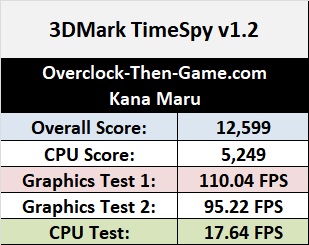

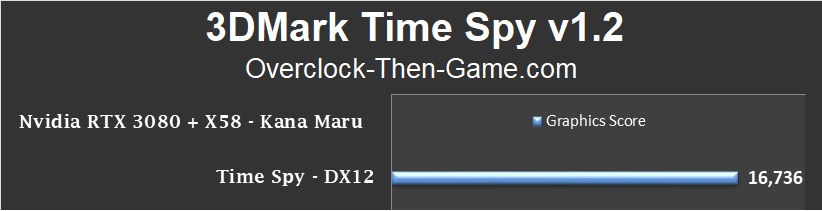

3DMark - TimeSpy

TimeSpy is a DirectX 12 benchmarking tool. Many years ago when Nvidia didn't properly support hardware async compute feautres properly I thought that this tool was best suited for comparing Nvidia GPUs against other Nvidia GPUs and vice versa for AMD GPUs. However, now that Nvidia properly supports async compute features properly my opinions 'may' have changed.

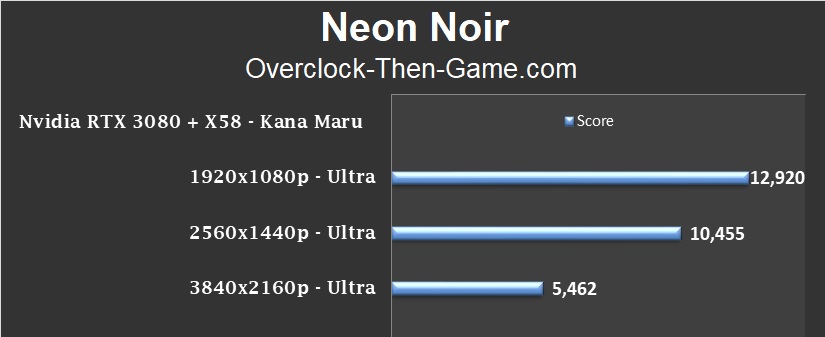

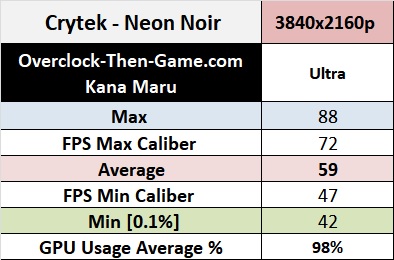

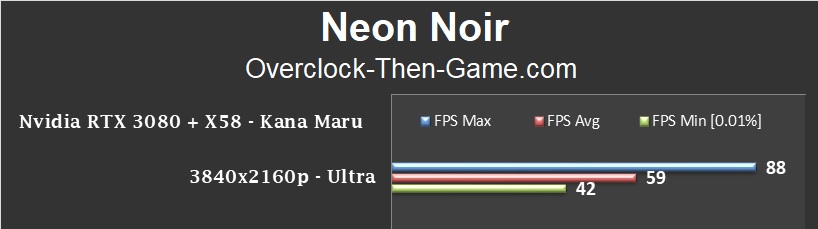

Crytek: Neon Noir - Ray-Tracing

Crytek released their Ray Tracing Benchmark in late 2019. Ray Tracing has been the talk amogst gamers over the past few years and has been gaining wide-spread attention. Neon Noir works on AMD and Nvidia GPUs by using Crytek's Total Illumination tech within their CryEngine software. Crytek produces some of the most graphically stunning games and Neon Noir is no exception, but the main focus here is Ray Tracing. Below I have shown the performance scores for 1080p, 1440p, 2160p(4K). I have also captured the actual frames per seconds along with the GPU Usage percentage at 4K. This should give plenty of info to anyone wondering about the X58 and RTX 3080 Ray Tracing performance in Crytek's Neon Noir.

Neon Noir - Ray-Tracing

Neon Noir - 4K In-depth Analysis

Looking deeper into the benchmark we can see that the RTX 3080 averages 59FPS with a low of 42FPS. The X58 +X5660 is providing plenty of data at 4K. 60FPS or higher is the ultimate goal and so far the RTX 3080 is performing very well.

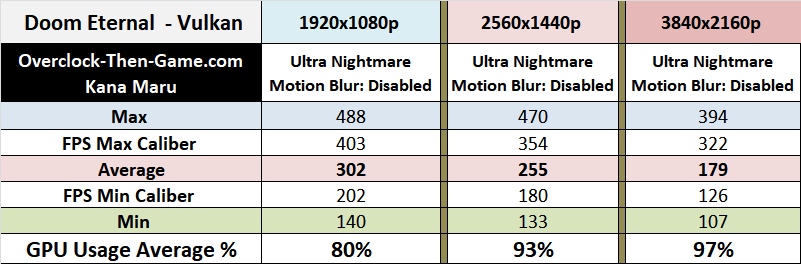

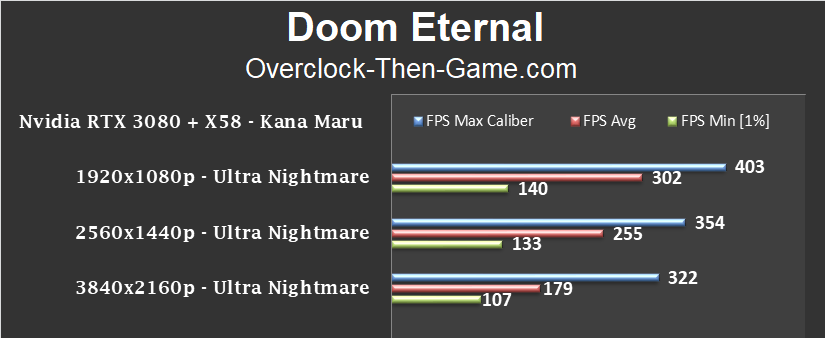

Doom Eternal

Once again I would like to kick my benchmarks off with Doom Eternal. The engine is well programmed and supports Async Compute very well. The graphics are still great and this game is all about killing demons with great music. As you can see the X58 has no problems pumping out the frames. Even with limited PCIe 2.0 bandwidth and a nearly 13 year old platform, the X58 still performs great. At 1080p it gave me an average of 302fps, 1440p shows 255fps and 4K gave me 179fps per second. 4K is the main focus for this review, but it is nice to show what the X58 is still capable off at 1080p with a well coded title.

Real Time Benchmarks™

X58 + RTX 3080 - 4K - Doom Eternal Gameplay

X58 + RTX 3080 - 1080p - Doom Eternal Gameplay

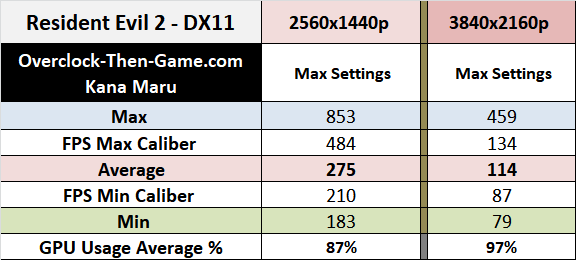

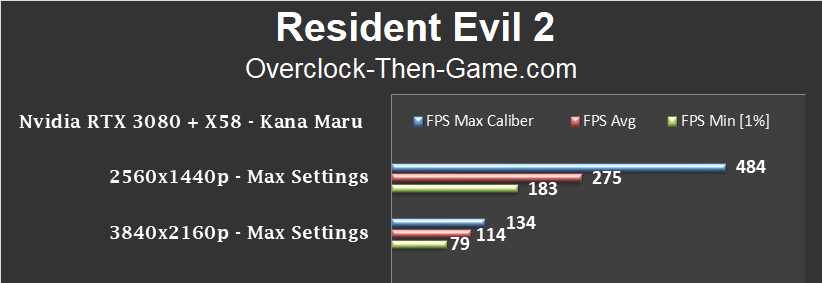

Resident Evil 2

The original (1998) Resident Evil 2 is one of my favorite video game releases of all time and the remake has quickly gone to the top of one of my favorite games to date. This remake of the original RE2 is just as great as the GameCube remake of Resident Evil 1 (REmake). This game is also optimized very well and Capcom has provided us with a seamless experience. There are no loading screens for a large portion of the game once you start playing. Even when it comes to the cutscenes and events the game continues to flow smoothly. Beyond the awesome gameplay this title sports a lot of graphical features that are easy to comprehend and set. The RE Engine is optimized very well and the RTX 3080 has no problem with this engine. The X58 platform with PCIe 2.0 delivered and average of 275FPS at 1440p and 114FPS at 4K. As you can see I’m hitting a CPU bottleneck, but things get a bit better at 4K. However, that is still as good as it gets when it comes to RE2 and that’s great news based on the numbers I’m seeing. More than 110fps average at 4K is awesome.

Real Time Benchmarks™

X58 + RTX 3080 - 4K - Resident Evil 2 Gamplay

Shadow of the Tomb Raider

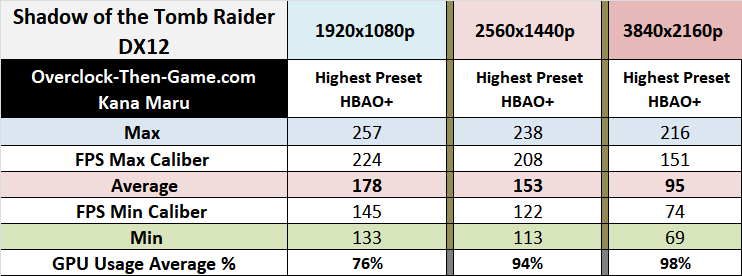

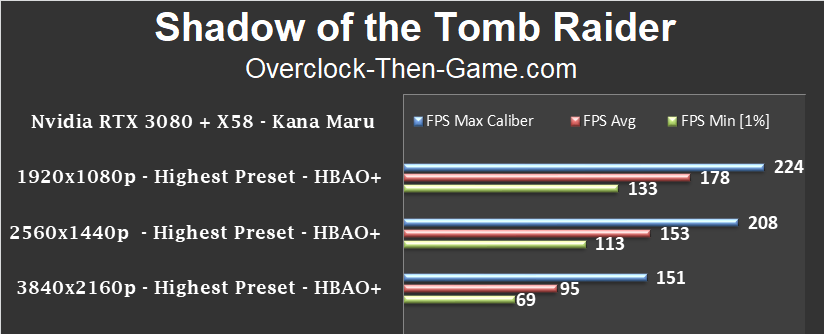

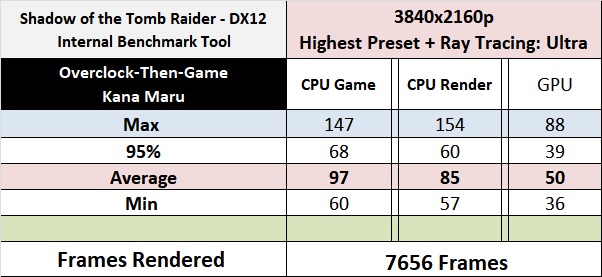

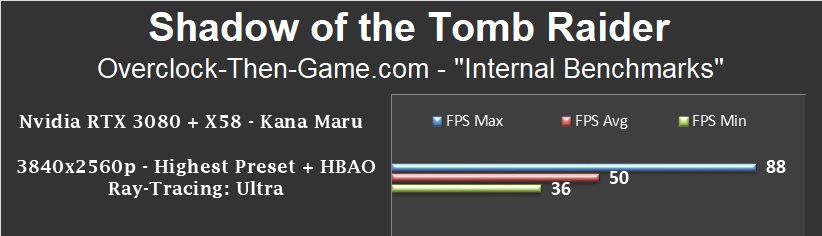

Shadow of the Tomb Raider, developed by Eidos Montréal, marks Lara’s final chapter based on the 2013 reboot. Shadow of the Tomb Raider also takes advantage of modern technology (async compute features) that Nvidia has supported properly since Turing. Ampere leverages on the advantages made by Turing to enhance the performance of asynchronous workloads. The last Tomb Raider title had major DX12 async compute issues and frame pacing issues shortly after release (click here to read about the issues I experienced). Eidos eventually got all of their problems resolved and DX12 & Async Compute performed very well (I also show the improved results in the previously linked article). This time around everything appears to be fine in Shadow of the Tomb Raider and DX12 has not been giving me any issues As I’m sure most of you have expected by now the lower resolutions run fine with no issues. Moving up to 4K we continue to the see the RTX 3080 perform extremely well. 4K brings 95FPS on average. Although I didn’t run a Real Time Benchmark using the Ray Traced Shadow features I did enable Ray Traced Shadow features with the “built-in”- Internal Benchmarking Tool that comes with the game. You can view the Internal Benchmark Tool results with Ray-Tracing Ultra below my Real Time Benchmarks™. This is another title were I could not enable DLSS, but I am working on a solution.

Real Time Benchmarks™

Shadow of the Tomb Raider - Internal Benchmark Test

Here are the results using Ray Traced Shadows on the max setting which is "Ultra". This will add translucent shadows in addition to directional and spot lights that are rendered in real-time. Opposed to standard shadow maps, real time shadows using Ray Tracing and can be very difficult to run. The benchmark should allow different area's to utilize the DirectX Ray Tracing features. The Ray Tracing does look really nice during the benchmark scenes.

Be sure to check out my RTX 3080 Overclocked section later in this article to see how well this game performs.

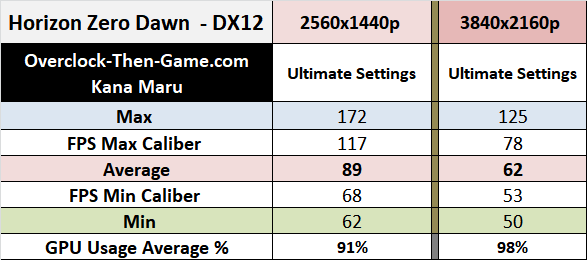

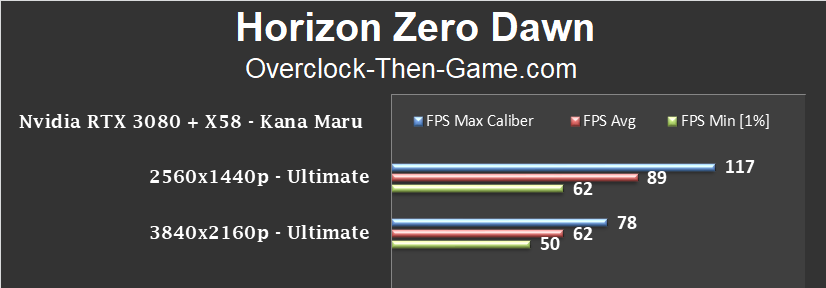

Horizon Zero Dawn

Horizon: Zero Dawn was one of the biggest releases last year. The game runs very well and definitely makes the RTX 3080 sweat at 4K. My Xeon X5660 keeps up very well. I have included a gameplay video below to showcase the performance.

Real Time Benchmarks™

X58 + RTX 3080 - 4K - Horizon Zero Dawn Gameplay

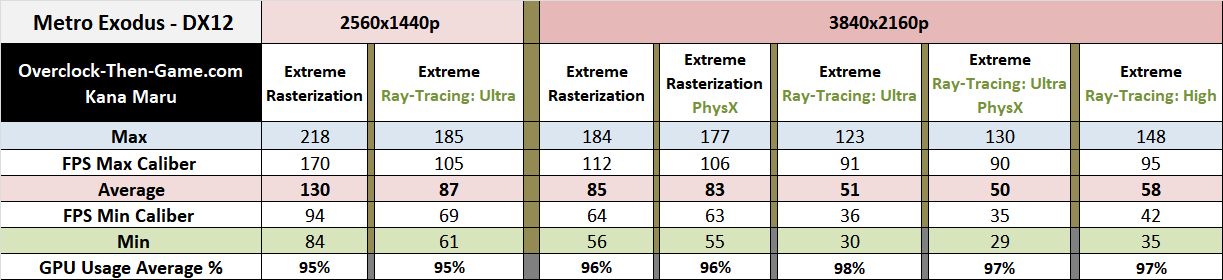

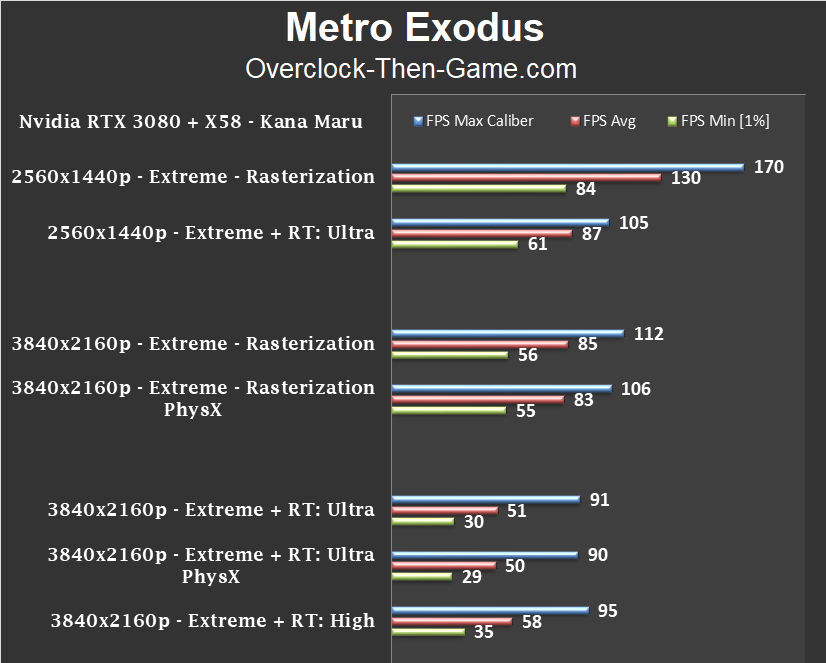

Metro Exodus

I ran quite a few benchmarks in Metro: Exodus. Looking at the 1440p results we can see that going from traditional rendering to Ray Tracing with the “Ultra” settings leads to a 33% average FPS drop. The minimum FPS drops by 27% as well. Although those drops are large 1440p is very playable with Ray Tracing enabled as the results show that the RTX 3080 stays above 60FPS in the worst case scenario’s and averages a nice 87FPS. 4K results with rasterization shows about 85fps on average even if PhysX is enabled. Once we move to Ray Tracing we see a sharp decline to 51FPS while using the Ultra Ray Tracing preset. That comes out as a 40% reduction in average FPS. The minimum FPS drops by 46% which is the biggest problem along with some micro-stutter here and there. DLSS will definitely be needed at 4K. Lowering the Ray Tracing preset to “High” does help to push the 4K performance to 58fps. That is a 13% increase when going from Ray Tracing “Ultra” to “High”. I could not enable DLSS in this game so therefore none of the results will have DLSS. I’m currently working on getting DLSS enabled, but hopefully these results will suffice.

Real Time Benchmarks™

Cyberpunk 2077

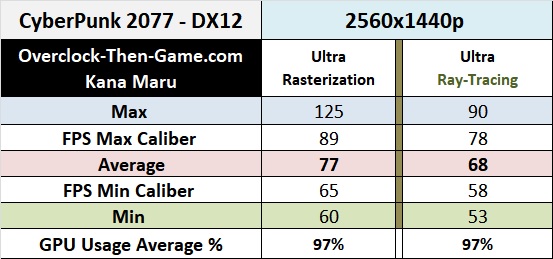

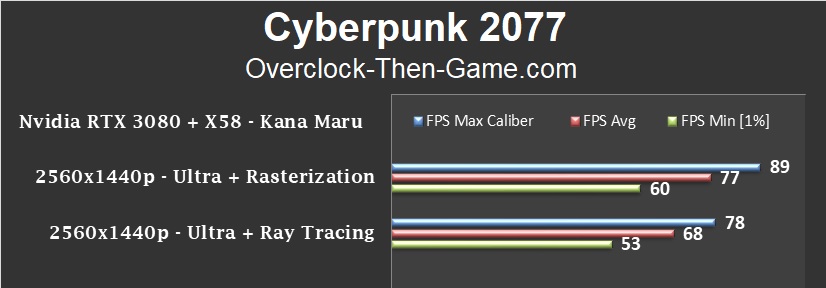

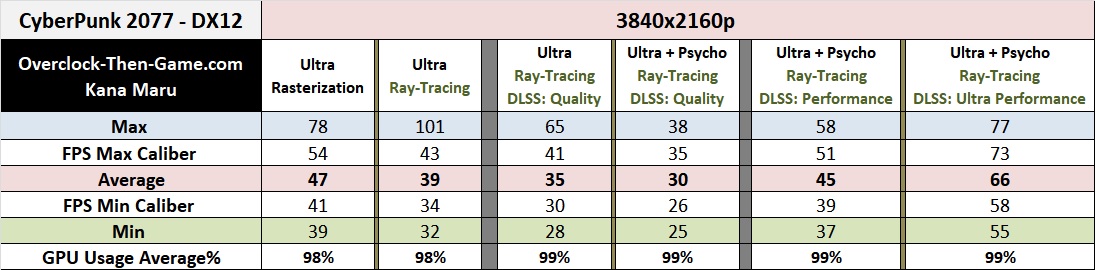

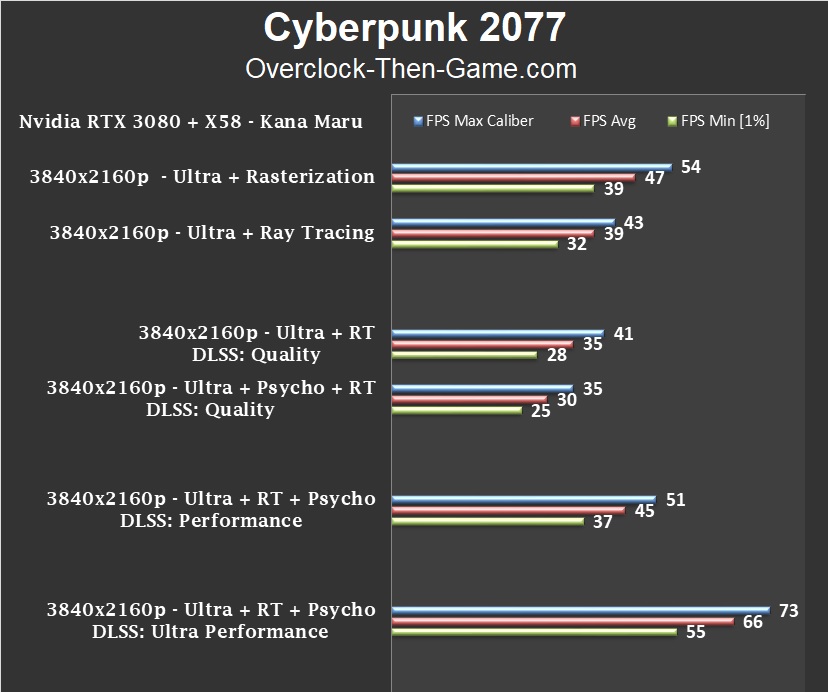

One of the biggest games of the year, Cyperpunk 2077 definitely needs a ton of horsepower to run. The Nvidia RTX 3080 should be well positioned to handle the requirements. Cyberpunk 2077 seems to be a bit much for the RTX 3080 even when using rasterization. Cyberpunk 2077 has received several patches and more patches are expected so performance might get better sooner than later hopefully. The main focus is 4K, but I did indeed run a few 1440p benchmarks. There are a few Psycho graphical settings that allows higher image quality; Screen Space Reflection Quality and Ray Traced Lighting.

1440p - Real Time Benchmarks™

1440p is the sweet spot at the moment if you want to use Ray Tracing + Ultra Preset and don’t want to use DLSS. The GPU Usage is around 97% so newer platforms will be able to perform skughtly better, but I suppose it won’t be a huge jump in performance.

4K - Real Time Benchmarks™

4K can be problematic when using Ray Tracing. Ultra and Psycho settings doesn’t make matters any better. The best case scenario is Ultra + Ray Tracing (Lighting: Ultra) + DLSS: Quality which allows about 35fps average. It’s all downhill from there when moving to the “Psycho” settings. Ultra + Psycho + RT + DLSS: Quality, hits 30FPS on average. The experience isn’t the greatest especially when it comes to shooting and quick movements. Luckily DLSS has different settings that can aid the Ray Tracing features and performance. I’ve included several benchmarks using different DLSS settings. The goal is to get the best image quality possible with a great gameplay experience and the only options are DLSS: Performance and DLSS Ultra Performance. I found Ultra Performance to appear a bit blurry in certain areas. DLSS: Ultra Performance will get you more than 60fps. My best compromise was using Ultra + Psycho + RT + DLSS: Performance which runs pretty well at 4K with an average of 45fps. It’s great that we have several options to enjoy the game.

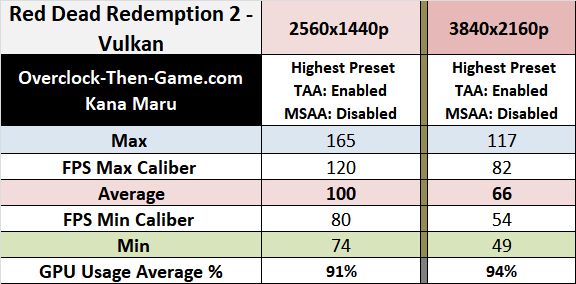

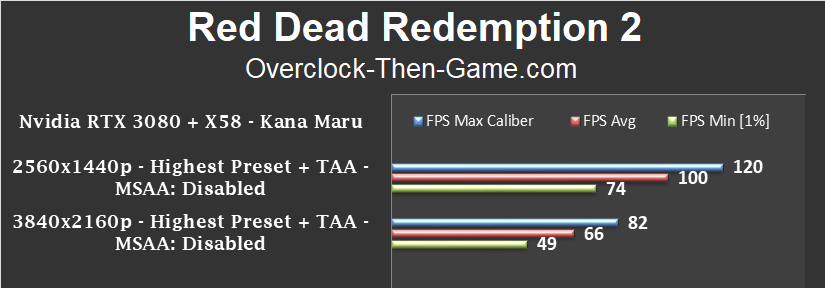

Red Dead Redemption 2

Red Dead Redemption 2 was one of the most anticipated games of 2018. Sadly the PC version released more than 12 months later in Q4 2019. That’s still a win for us PC gamers and we will gladly take a late release over “no” release. RDR2 has a plethora of graphical settings to adjust and please don't take that as an understatement. Most of Rockstar games have plenty of graphical settings to adjust which is a great thing since there are so many different generations of graphic cards in the market. However, sometimes too many options can become cumbersome, especially if you don’t have a reference were you can actually see the settings that you are changing in real time. Games such as Resident Evil 2 and Shadow of Tomb Raider gives you an on-screen image reference where you can easily see what effects graphical settings have on the image quality. You don’t get that feature in Red Dead Redemption 2 and I think it would help greatly when tweaking pages, and I mean literally pages, of graphical settings from only a black menu screen. Luckily the game has a “preset” slider than can adjust all of the settings from you. The developers set the graphics settings automatically when using the “Quality Preset Level” therefore I decided to use the highest preset possible which is the “Favor Quality” preset. I also used the Vulkan API naturally, with TAA, but I disabled MSAA since MSAA usually isn’t needed at high resolutions in the types of games I play. RDR2 happens to be one of those games. If requested I can re-run 4K with MSAA Enabled. The game looks and plays great. 1440p tops out at around 100fps on average and 4K shows 66fps on average. Overall there isn’t much to worry about and if you need more FPS 1440p is always a great option, but 4K was very smooth as well. The X58 platform does well at 4K and at 1440p the X58 keeps the RTX 3080 somewhat busy by utilizing only 91%.

Real Time Benchmarks™

X58 + RTX 3080 - 4K - Red Dead Redemption 2 Gameplay

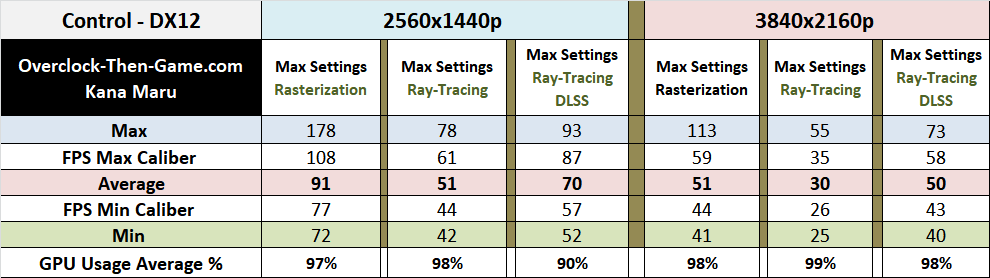

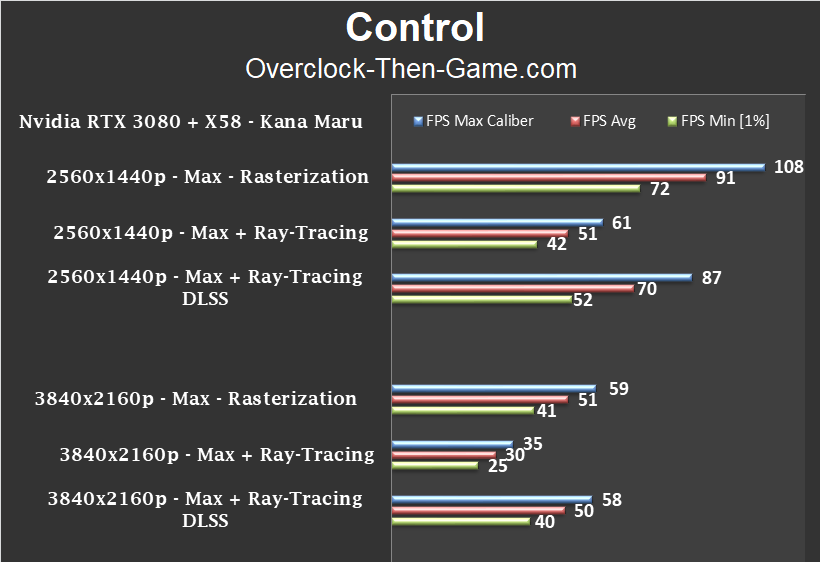

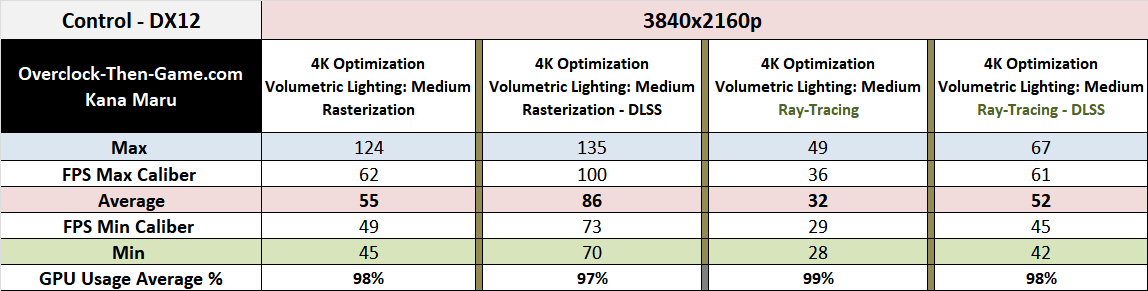

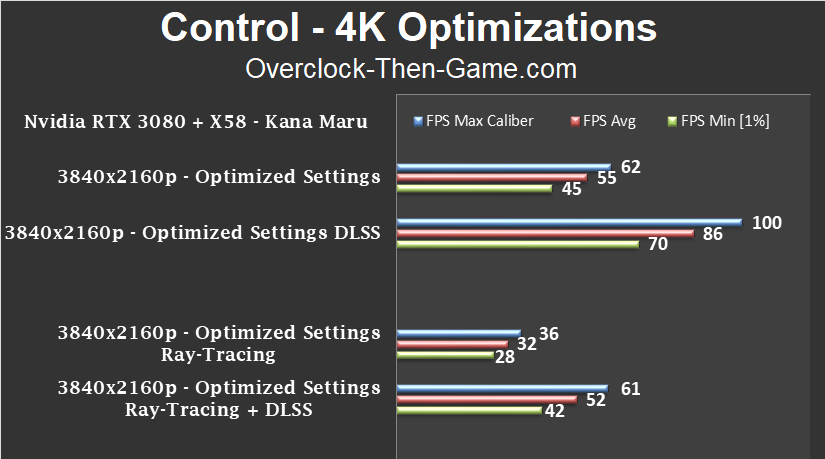

Control

Control makes extensive use of Ray Tracing features and I have set all of those features to their max graphical settings. Those RT features are Reflections, Transparent Reflections, Indirect Lighting, Contact Shadows and Debris. You can see a difference when Ray Tracing is enabled and without DLSS that difference is very noticeable 4K shows 51fps average without RT, but when you enable Ray Tracing the fps average drops by 41% to 30fps on average. Ray Tracing brings frametiming issues and can be rather “choppy”. DLSS is needed and when enabled it pushes the Ray Tracing performance back up to 50fps on average and resolves the frame issues. The GPU usage was at 98% on average. 1440p with Ray Tracing averages 51fps and with Ray Tracing + DLSS performance increases by 37% to 70fps on average. Sometimes you can see DLSS working and catch a little denoising at all resolutions, but it’s nothing to worry about. If you must be above 60fps with Ray Tracing it appears that 1440p will be the resolution for you. Without DLSS the GPU usage was 98% and with DLSS enabled the GPU usage was at 90%.

Real Time Benchmarks™

Control - 4K Optimizations

To make the gameplay experience much smoother and better I have optimized my graphical settings. These settings will resolve the “choppy” or “laggy” gameplay experience and will not necessarily give a huge boost to the average FPS. I enabled DLSS for fun with the 1440p rasterization results. My optimized graphical settings that I used are moving “Volumetric Lighting” down to “Medium” from "High", Disable MSAA and I personally disabled Motion Blur as well. These settings helped the game perform much better. My average FPS increased by about 8% using normal rendering techniques. The jump is fairly small, but as I stated above the gameplay experience is much smoother. For Ray-Tracing you’ll still need DLSS, but the game should run a bit smoother. DLSS isn’t needed for normal rasterizationm but I ran the benchmark anyways.

Real Time Benchmarks™

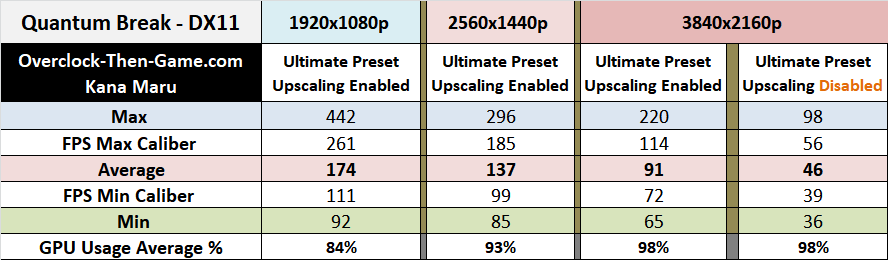

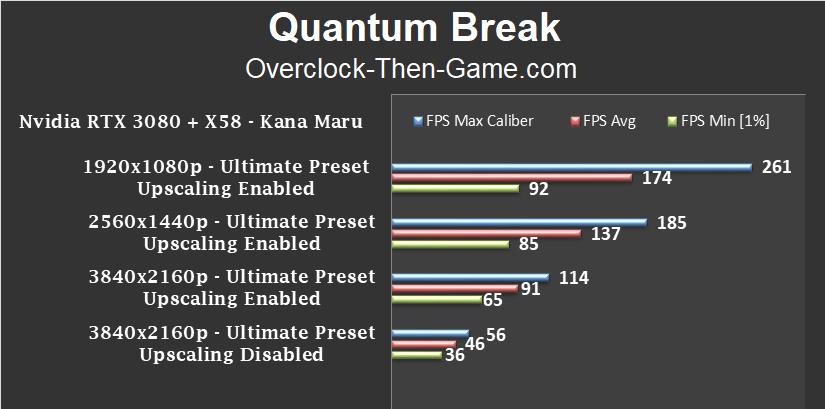

Quantum Break

Taking a quick look at Quantum Break using the Ultimate Preset shows that the game runs very well when the Upscaling setting is enabled. No issues to report here other than the obvious CPU bottleneck at lower resolutions. The issues arise when Upscaling is “Disabled”. Basically when Upscaling is enabled the game takes the previous 4 frames from a lower resolution, applies MSAAx4, and then upscale those previous frames to the native resolution. Enabling Upscaling helps keep the game FPS high. When Upscaling is disabled the game renders frames at its native resolution and performance take a dive. During the 4K benchmarks we see a massive drop of 49%. The average FPS drops from 91FPS with Upscaling enabled to 46FPS with Upscaling disabled. This game is tough to run at native resolutions without Upscaling, which is why enabling Upscaling is recommended.

Real Time Benchmarks™

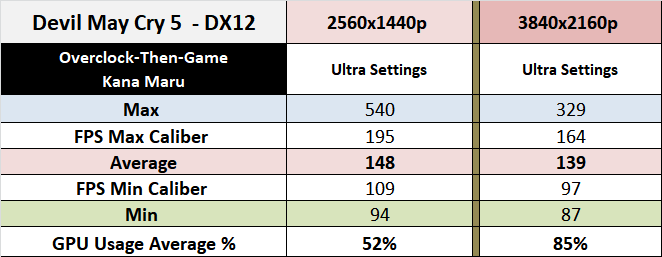

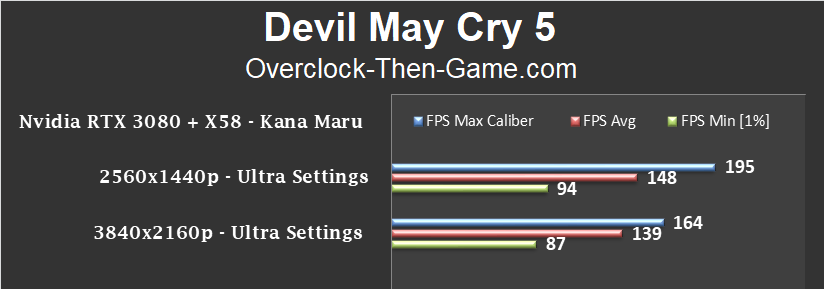

Devil May Cry 5

I really like the RE Engine that this game uses. Capcom’s RE Engine has been looking great since its introduction in Resident Evil 7. Devil May Cry looks very good and plays very well at 4K. The engine is well programmed and runs well on many different tiers of hardware. The RTX 3080 doesn’t break a sweat and the X58 shows what it’s made off and pushes out 139fps at 4K.

Real Time Benchmarks™

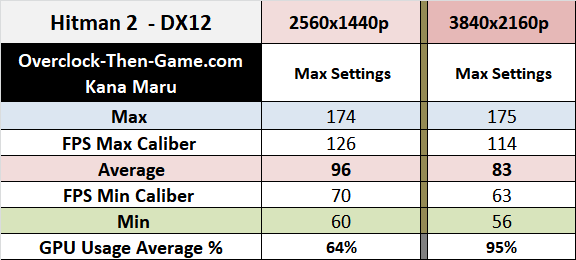

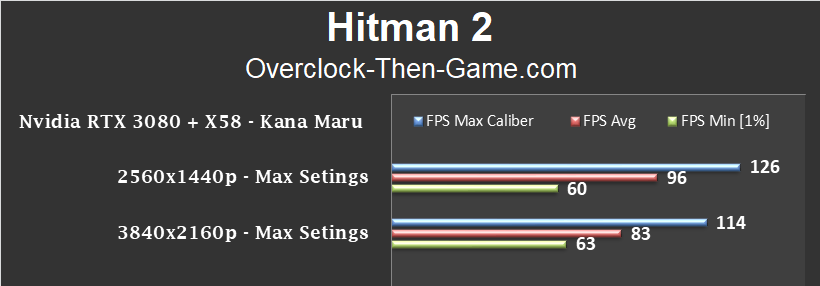

Hitman 2

IO Interactive was one of the few developers to actually take a AAA title and give it access to "modern" technology many years ago. Back in 2016 IO Interactive developed async compute support within their game engine and took advantage of GPU concurrent features. In my original Hitman (2016) benchmarks with my Fury X I saw an increase of up to 34% @ 4K when DX12\async comptute features were used correctly. This increase didn't come from overclocking, but directly from drivers and developer updates. IO Interactive tried their hardest to implement async compute effectively and they did a great job. You can read my Hitman (2016) performance increases here (click here to read my 2016 Fury X Performance Increases) Hitman 2 performs very well and is using DX12 with async comptute. The RTX 3080 runs very smoothly and is never starved for data now that Nvidia has supported Async Compute proper since their SUPER series. I wish more developers would take advantage of features like async compute.

Real Time Benchmarks™

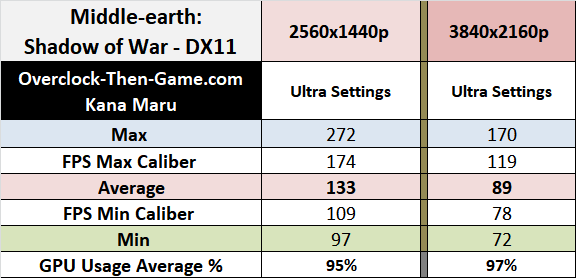

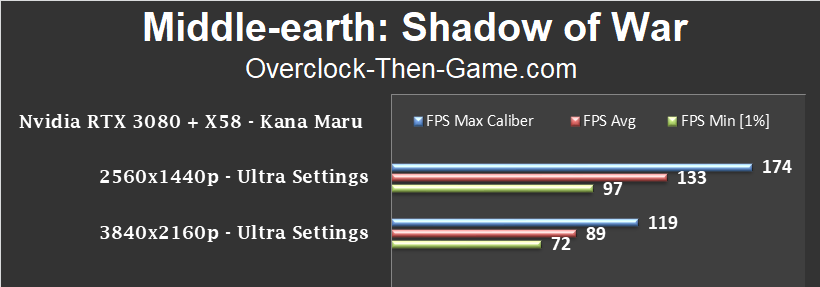

Middle-earth: Shadow of War

Real Time Benchmarks™

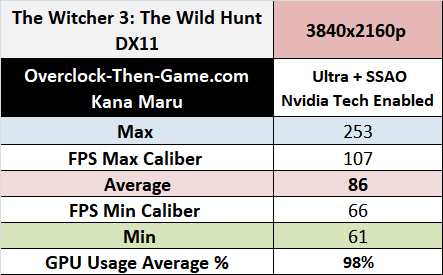

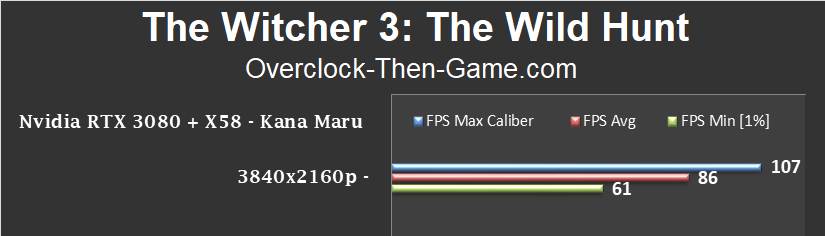

The Witcher 3: Wild Hunt

Since I am running an Nvidia GPU I have enabled all of the Nvidia tech features. This game is normally favorable to Nvidia GPUs due to Nvidia working very closely during the development of The Witcher 3: Wild Hunt. The game supports plenty of Nvidia tech and as we see it runs very well on the RTX 3080 as expected. 4K is still pretty intensive and the CPU holds up pretty well in this title. As usual I picked up a few drops that weren’t noticeable during actual gameplay, but the minimum FPS was 61FPS for a great experience nonetheless.

Real Time Benchmarks™

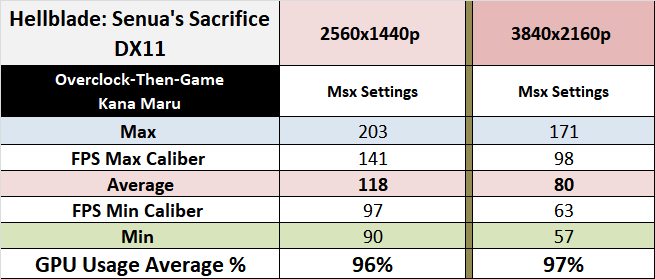

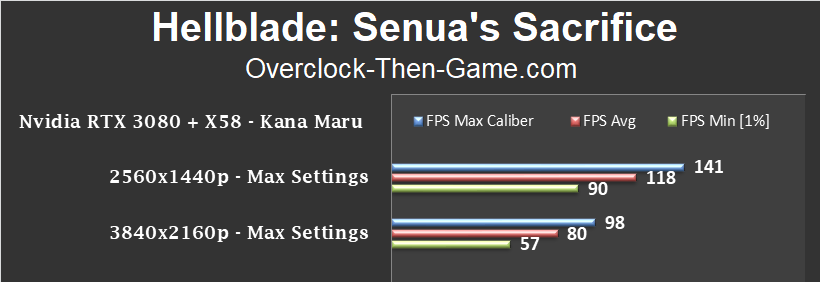

Hellblade: Senua's Sacrifice

This game runs very well. There is a slight CPU bottleneck, but the X58 and the RTX 3080 flexes it’s muscle. As usual the experience was great with the stock RTX 3080 settings.

Real Time Benchmarks™

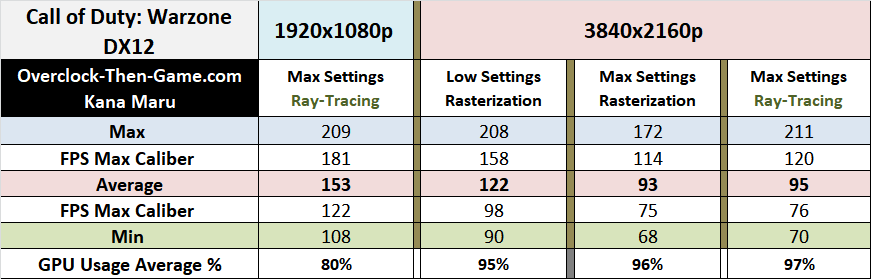

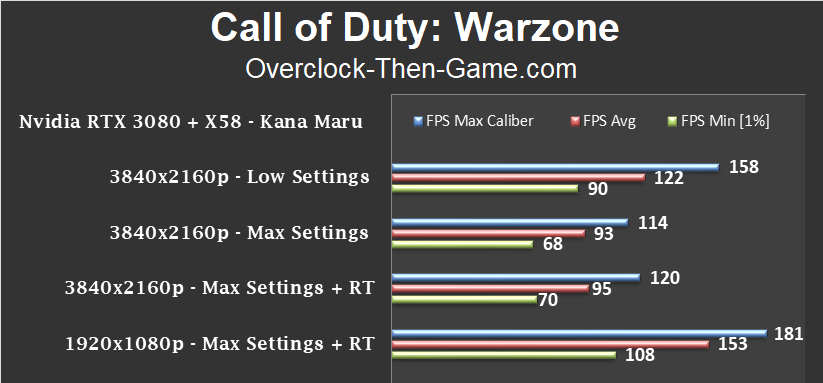

Call of Duty: Warzone

Call of Duty: Warzone supports Nvidia’s Ray Tracing and Reflex features and DLSS was recently announced. Ray Tracing does look nice in this game at 4K. Focusing first on the rasterization benchmarks we can see that I average 122fps with low settings and 93fps with Max settings; more than enough to enjoy the game at 4K with an X58. With the Low Graphical Settings at 4K the CPU doesn’t hit 100% often, but the GPU usage still only runs at 95% on average as expected, but that’s still pretty good for the old X58 platform. Cranking up the graphics to MAX settings shows the GPU usage up to 96%. Since I am CPU limited you can clearly see that the Ray Tracing performance is equal to the rasterization results for the most part. Ray Tracing pushes the GPU Usage up to 97%. Those are still pretty nice results for a nearly 13 year old platform. Although 4K is the main focus of this review I decided to run a quick 1080p test as well and averaged 153fps. The GPU usage was only 80% at 1080p.

Real Time Benchmarks™

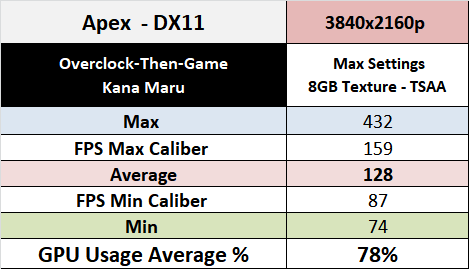

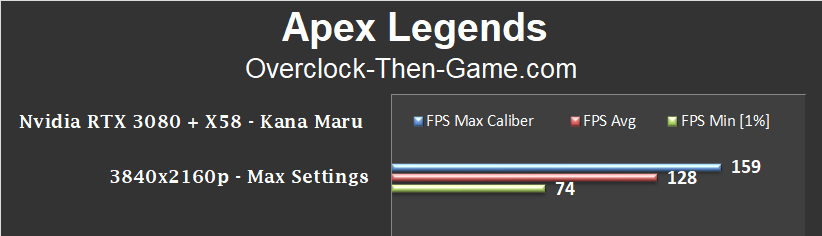

Apex Legends

Apex Legends doesn’t require a lot to run, but there are plenty of graphical settings to max out. I used about 7.7GBs vRAM on average while topping out at 8.14GBs. The GPU usage averaged 95% at 4K while maxing out at 98%. I averaged 128fps with the CPU threads hitting 100% more often than none. The average CPU usage was around 78%. The RTX 3080 is a beast and want’s even more data.

Real Time Benchmarks™

RTX 3080 + X58 Power Consumption

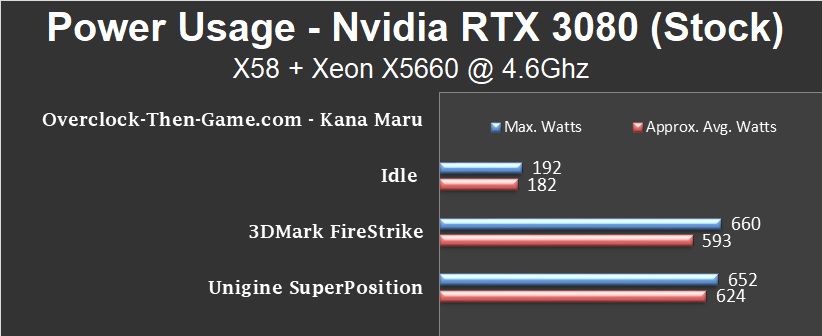

Now let’s take a look at the RTX 3080 and X58 power consumption. Running my CPU with a 4.6Ghz will definitely pull much more wattage with this old platform. Please note that I am also running Delta and Gentle Typhoon fans and trust me, there is nothing gentle about those typhoons. These powerful fans can pull a decent amount of wattage. The first thing that caught my attention was the very low idle power consumption from both the X58 and the RTX 3080. Daily light usage outside of gaming pulls a very low amount of wattage, only 182watts on average and that is without the powerful fans running. With a few of the fans running it will be somewhere around 195watts to 200watts at decent speeds. So take that information as you please. You can subtract 20watts from all of my 4.6Ghz test if you like, but I’m showing the results with everything else, other than the idle results, as is with the fans running. However, pulling only 182watts during Idle is pretty good. The first generation Intel CPU uarch is pretty good along with Nvidia’s Ampere. This time around I decided to use both 3DMark and Unigine and record the power consumption numbers to give a decent representation of both the CPU and GPU when running at their max. We can see 3DMark’s Fire Strike pull 593watts on average and Unigine’s SuperPosition pull 624watts. That’s a pretty decent amount to pull, but we need to see what happens with an actual game instead of synthetic benchmarks.

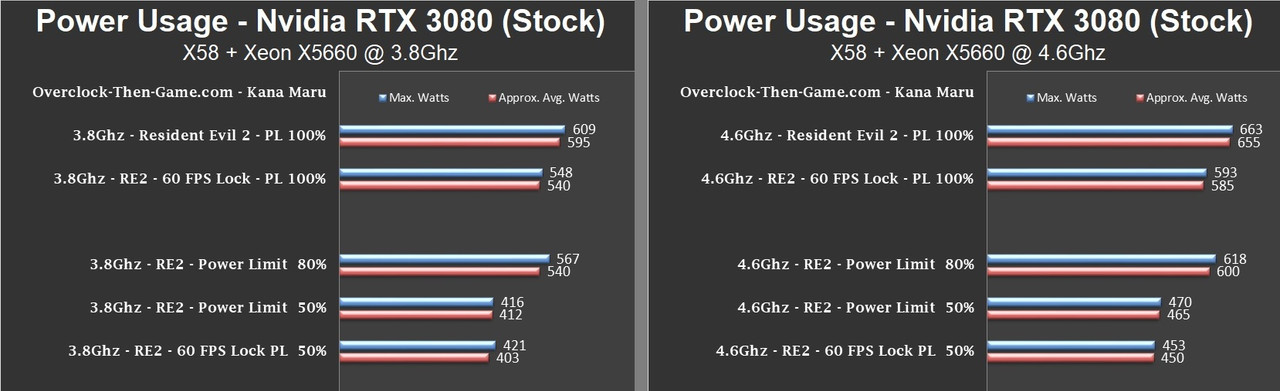

RTX 3080 + X58 - Resident Evil 2 - Total Power Consumption

Now I will like to turn the attention to an actual game, Resident Evil 2. This game performs very well and makes the CPU and GPU work for the frames at 4K. I performed a series of test using both my 4.6Ghz and 3.8Ghz OC. My 3.8Ghz pulls very little voltage maxing out at 1.16volts on the CPU core, while 1.36v is required for the 4.6Ghz OC (Idle voltage is much lower obviously). It is worth noting that all benchmarks in my X58 + RTX 3080 article was ran using the 4.6Ghz overclock. I simply ran 3.8Ghz for power consumption comparisons. We see that running the GPU Core at stock with the Power Limit (PL) set to its normal stock setting (100%) pulls 655watts on average with my 4.6Ghz OC. Locking the FPS to 60 drops the wattage by 70watts down to 585watts on average. That’s a pretty nice start.

Now if we leave the frame rate unlocked and drop the Power Limit (PL) down to 80% we see a reduction of 55watts when compared to the Power Limit at 100%. That’s great, but I know that I can do better than that. Dropping the Power Limit down to 50% drops the wattage to 465watts on average. That’s a reduction of 190 watts when compared to the Power Limit at 100% and at this point in my test I am receiving around 60-64fps at 4K. So in that case I might as well lock the FPS to 60fps along with the PL set at 50%. As you can see I’m only pulling 450watts which is awesome for a high 4.6Ghz overclock on a first generation Intel CPU. I’m sure most know, but I will repeat it just for those don’t know this about Nvidia and AMDs GPUs. Simply locking to the FPS to a specific number won’t always save you the most power since the GPU will still be running near full speed. Adjusting the Power Limit will help as well as undervolting and underclocking if necessary. So in my case since I am CPU limited at certain resolutions I can simply find the average FPS that my CPU can produce and lock my FPS to that number while adjusting my Power Limit so that the GPU is only using as much power as necessary to match the average FPS. Otherwise I can just lock the FPS to any arbitrary number that I choose and make the GPU match the performance by adjusting the Power Limit.

RTX 3080 - Temperature and GPU Power Consumption

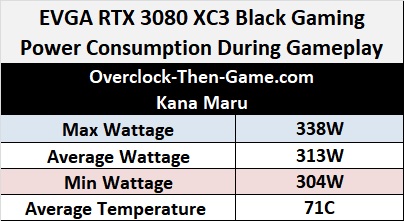

Now moving on the GPU temperature I saw an average of about 71 Celsius. This is a pretty hot GPU, but the performance backs it up. You’ll definitely want cool ambient airflow or liquid cooling if you feel that 71c is a bit warm for you. You can also adjust the Power Limits which is tied to the GPU temperature by default. As far as power consumption goes I found that the EVGA RTX 3080 XC3 Black Gaming pulls an average of 313Watts across all of my ‘Real Time Benchmarks™ ‘ which is pretty good during actual gaming sessions. The minimum wattage was 304watts and the maximum wattage was 338watts.

RTX 3080 Overclock - Synthetic Benchmarks

As I explained earlier I am using the EVGA RTX 3080 XC3 Black Gaming GPU for this review. More information about this GPU can be found on the “RTX 3080 Specifications” page. Below I have listed the Nvidia RTX 3080 Founders Edition as a reference to my EVGA RTX 3080 XC3 overclocks. The EVGA RTX 3080 XC3 Black Gaming GPU Core is overclocked out of the box by about 8%, however the memory frequency and other specifications are the same as the Nvidia RTX 3080 Founders Edition.

I was able to add a 5% increase to the memory frequency and increased the GPU core by 14% over the Nvidia RTX 3080 FE and only 5% over the stock EVGA RTX 3080 XC3 Black Gaming GPU. This GPU is overclocked near it max out of the box. With a light load I was able to push the GPU core to 2130Mhz, but once a heavy load appears the core GPU will drop. So I decided to settle for 1950Mhz since I was able to sustain that GPU Core clock during 4K gameplay. Above I have 1950Mhz listed as the Core Clock and the Boost Clock, but the GPU core clock will slightly fluctuate, but of course during idle periods the GPU will drop to roughly 210Mhz. I wasn’t able to push it much further than I have at the moment, but with overclocks it can take a while so I will share what I have been able to accomplish thus far.

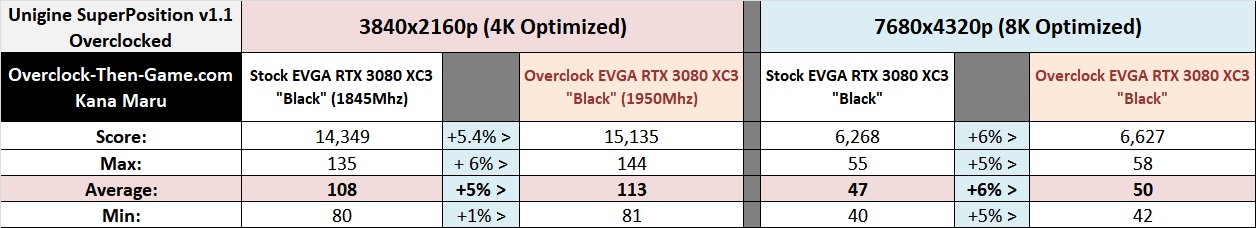

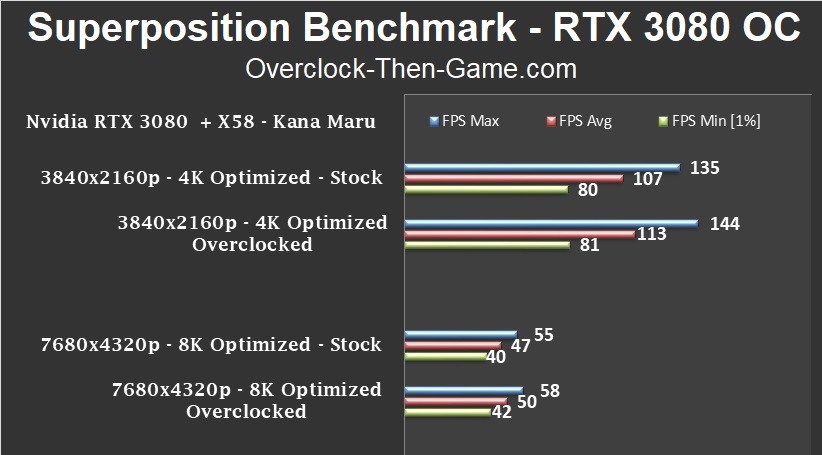

RTX 3080 Overclock - Unigine SuperPosition

Comparing the stock EVGA RTX 3080 XC3 Black Gaming to the overclocks I was able to hit we can see roughly a 5% increase in both the 4K and 8K benchmarks.

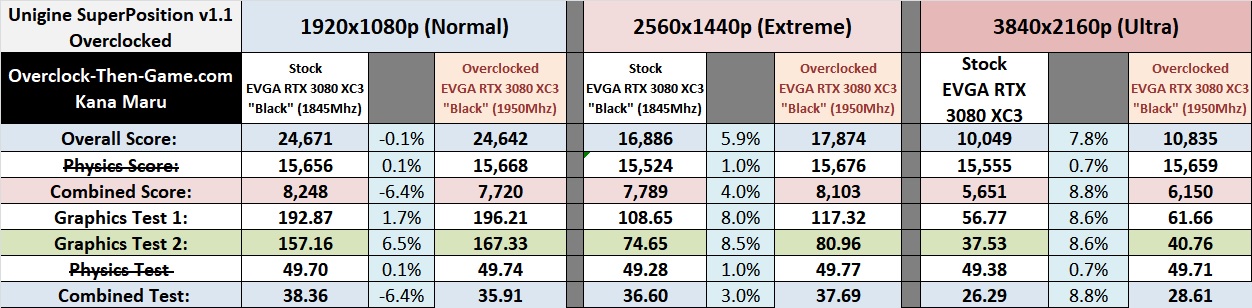

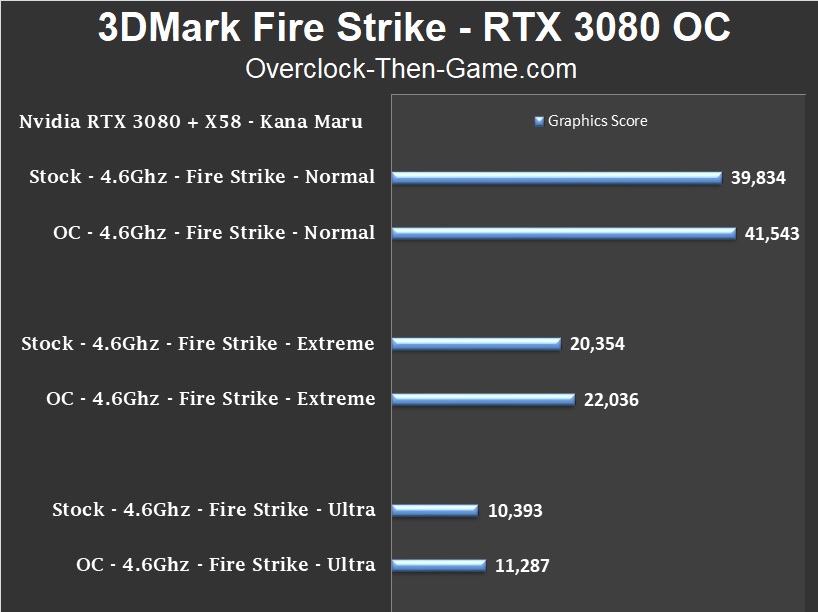

RTX 3080 Overclock - 3DMark Fire Strike

The Physics tests can be ignored since it focuses solely on the CPU performance. As expected we see very small increases and in some cases decreases at 1080p since I am very CPU limited, but at higher resolutions se see some decent increases across the tests. 1440p break down shows an increase of 8.5% in the Graphics Tests and the Combined score increased by 4%. The 4K (Ultra) results shows an increase of about 8.6% increase in the Graphics Test. So the extra 5% over the stock EVGA Black Gaming came out to be a pretty nice increase so far.

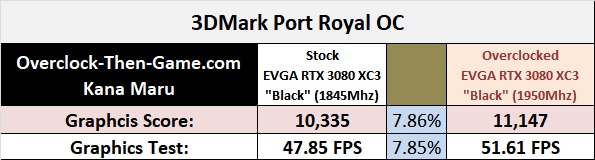

3DMark - Port Royal

It seems like 8% is becoming a normal percentage increase in 3DMark so far.

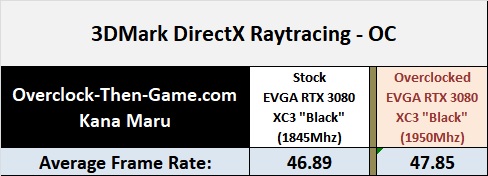

3DMark - DirectX Ray Tracing

One frame for a 2% increase. Minor, but an increase is an increase I guess.

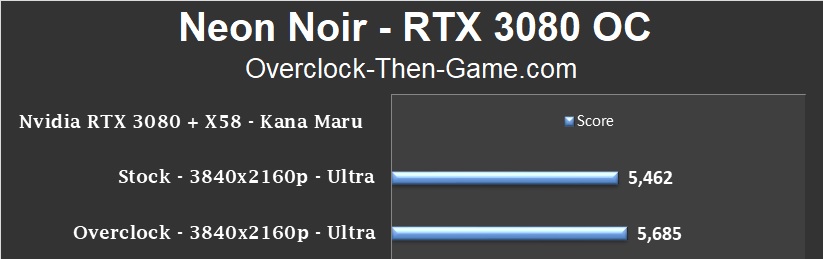

Neon Noir - Ray-Tracing

Neon Noir shows a 4% increase at 4K

RTX 3080 Overclock - Doom & Shadow of the Tomb Raider

On the previous page we saw a decent performance bump in the Synthetic Benchmarks for the most part. Now we will look at two popular titles at 4K. These RTX 3080’s prices have increased and the buying them aftermarket is a no-go thanks to scalpers. No one is spending upwards towards $700-$900+ on a GPU like this for lower resolutions, except for competitive gaming purposes. Even then this GPU would be overkill for competitive gaming. There is a far less likely chance that I will be bottlenecked at 4K anyways.

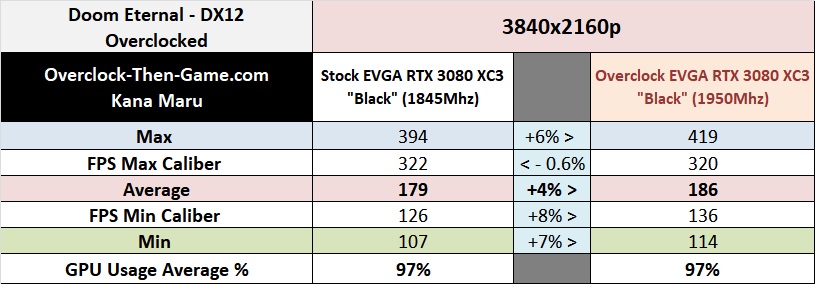

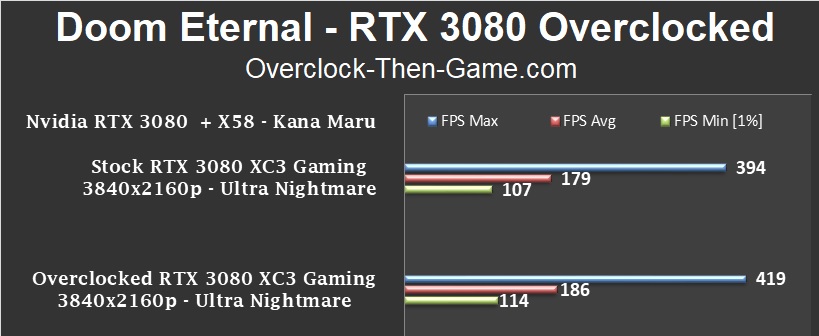

Doom Eternal - RTX 3080 Overclocked

The average FPS increases by 4%, but the minimums increased by roughly 7% to 8%. In this game you’ll never notice it since it runs so well, but I suppose it was at least nice to see an increase of 7fps.

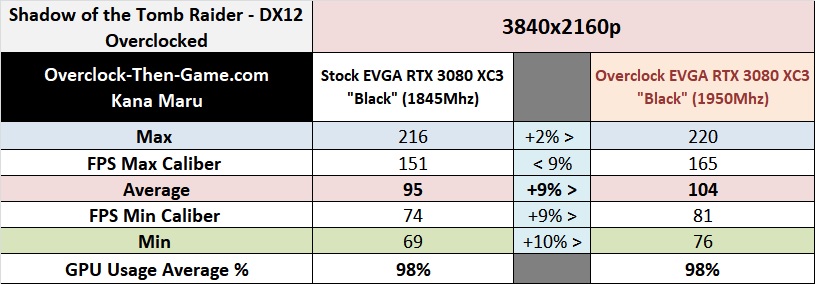

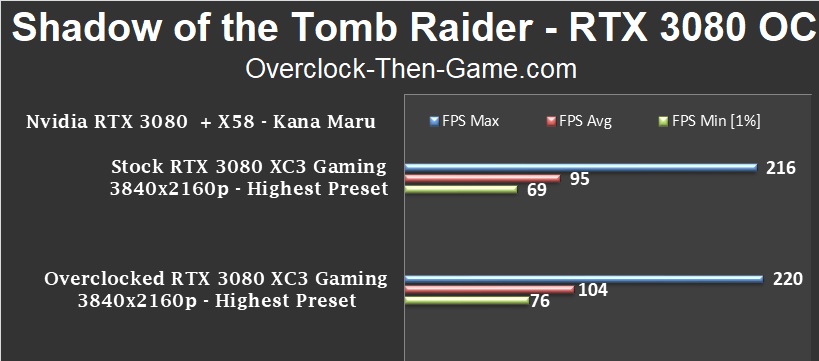

Shadow of the Tomb Raider - RTX 3080 Overclocked

We see an increase of 9FPS on average and 7FPS for the minimum FPS.

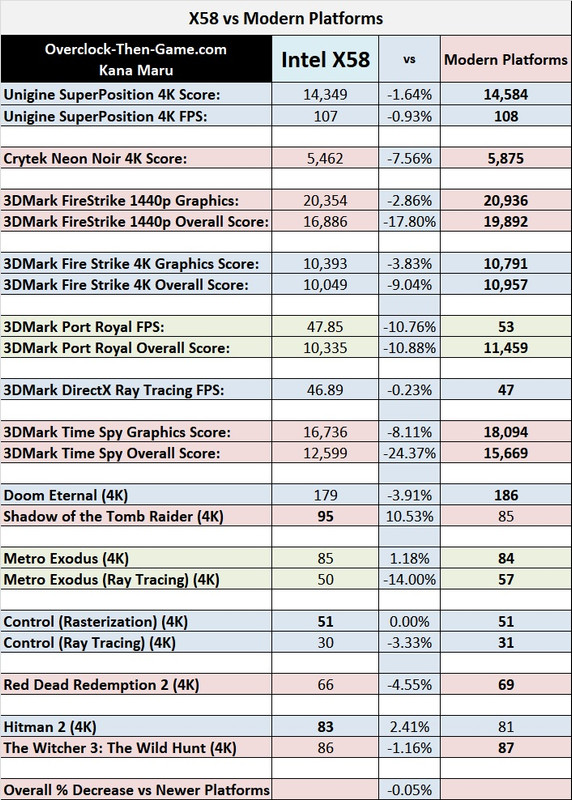

Intel X58 vs Modern Platforms

So from what we have seen and what most people probably expected, the '2008' X58 performs very well at higher resolutions such as 4K. Below I have compared the X58 + X5660 against modern machines running anywhere from 8C\16Ts up to 16\32T. The comparisons consist of several sources for nearly all of the games and benchmarking apps listed below. Even though the PCIe 2.0 bandwidth is approximately 330% slower than PCIe 4.0 performance in 3DMark’s PCIe Bandwidth Test the performance at 4K doesn’t change that much. Certain games at 4K that depend more on the CPU performance will show an increase in FPS over the older X58\PCIe 2.0 platform, but normally the amount of FPS in most games are normally within 1FPS to 5FPS. For example, based on my comparisons against newer platforms we can see that Control (4K) performs identically while using rasterization and shows a 1 FPS difference when using Ray Tracing. Other games like Metro Exodus (4K) can show up a 7FPS difference when using Ray-Tracing, but only show a 1 FPS difference when using rasterization. Lower resolutions can and will benefit from a faster CPU, but when does that become a real issue for people running an older X58 platform in 2021? For instance in Doom Eternal at 1080p I pull over 300FPS which is more than enough for the majority of the monitors sold in the market today. However, a newer platform can pull well over 320FPS - 350+FPS, but most gamers won’t be buying this GPU for the 1080p or 1440p performance in most cases. This is a 4K GPU for sure, but Ray Tracing and certain APIs used will ultimately be the limiting factor in most cases in addition to the GPU itself.

Intel X58 vs Modern Platforms Comparisons

Below we can see the comparisons against modern platforms. The list below shows a compiled average of the modern platforms against my X58 + EVGA RTX 3080 XC3 Black Gaming + PCIe 2.0. The “Modern Platforms” are from various RTX 3080’s (Founders Edition and 3rd party OC'd RTX 3080’s). I didn’t use my Overclocked RTX 3080 results and only used my stock EVGA RTX 3080 XC3 Black Gaming results from my review. Some of the percentage differences look worse than the actual FPS. The breakdown might be helpful for those wondering how the older PCIe 2.0 - X58 architecture performs against the newer platforms from Intel and AMD. When I combine the total performance numbers across the samples listed below we see an overall decrease of only 0.05% when compared to newer platforms. Please note that there a ton of games out there to benchmark and I have chosen the results below based on the amount of information I could find and validate from several sources. Feel free to leave your comments in the comment section on the next page.

Conclusion & Comments

Alright so we have reached the end of my review and I can honestly say that the RTX 3080 is a very nice GPU. I have to give Nvidia a big complement for supporting the older BIOS\non-UEFI platforms. This will definitely help them make more sales from many enthusiast across several platforms. The RTX 3080 performs very well and depending on the title 1440p might still be the sweet spot while 4K is the main selling point. When there is no Ray Tracing involved the GPU normally dominates the 4K experience in nearly all games. Several games without Ray Tracing will give the RTX 3080 a hard time and this will make 60fps the standard again but this time at 3840x2160p (4K). DLSS will definitely be a great aid until the hardware can handle the worload natively. Enabling Ray Tracing shows that Nvidia is nearly there in some of the games while certain games seem to be a bit much for the GPU to compute. I’m sure as more mature drivers release and more games are built around the technology we will see better implementations. I’m waiting patient for Vulkan Ray-Tracing to take off, but Microsoft’s DirectX Ray-Tracing is very popular right now and most games will use that API to implement the RT tech.

Thank you for reading my ""X58 + RTX 3080 Review" article. Feel free to leave a comment below and feel free to share this article. If you would like to see more content like this please consider donating to my Patreon or PayPal by clicking the images below. Thank you.