Gracemont Core – Architectural Deep Dive

Gracemont = Efficient "Atom" Core

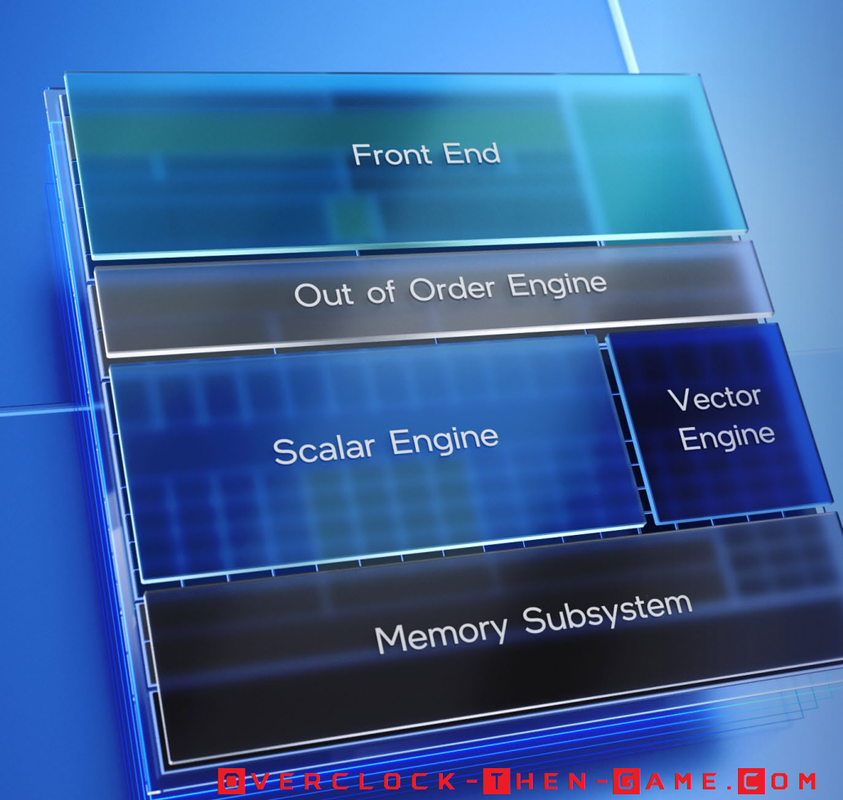

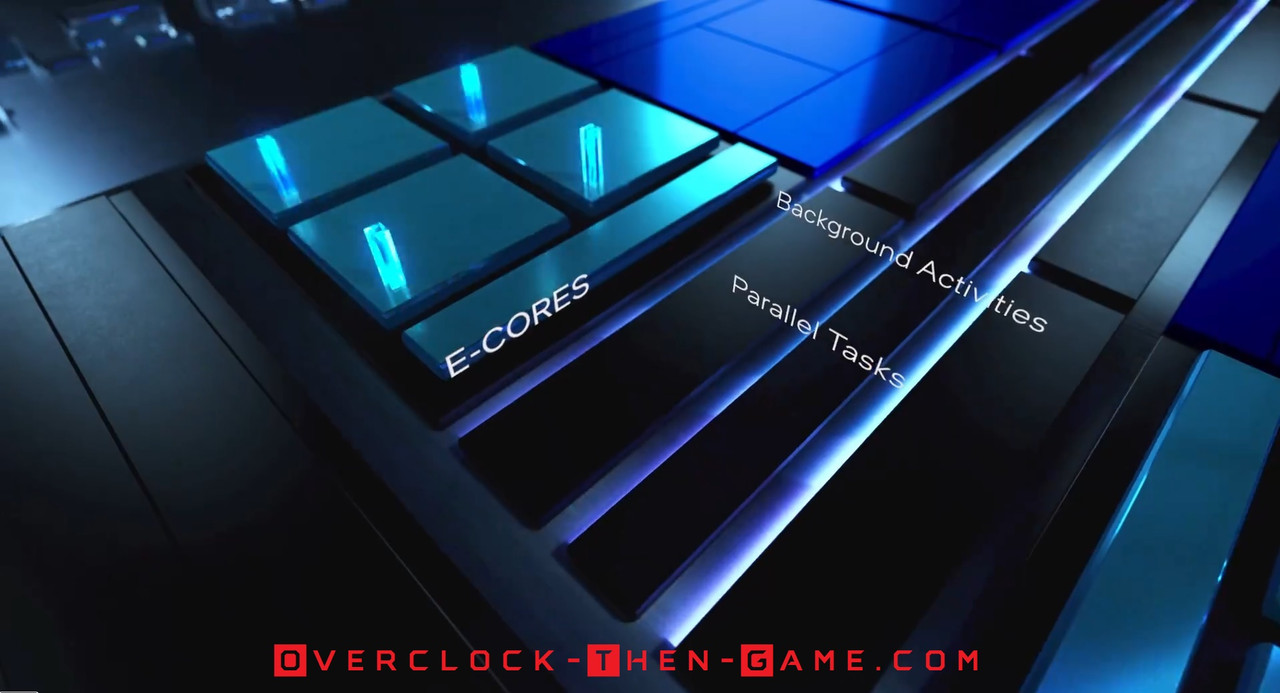

We will start with the Efficient Cores, codenamed Gracemont. Gracemont focus is throughput as it will be running the background tasks that don’t require a ton of performance to complete. The background tasks could be light workloads such as basic OS tasks or light workloads such as word processing software or web-browsing. As I stated previously these Efficient (E)-Cores do not include Hyper-Threading so they are limited to one thread per core. Intel did not want to add more complexity to an already monumental architecture and push Alder Lake out of the power budget that they have already set. Adding Hyper-Threading to the Efficient –Cores would have done just that, added more power and time to development. With future platforms I’m sure we could possibly see Hyper-Threading added to the efficient cores. Intel has deliberately decided to compare the Gracemont Cores to Skylake Cores (11th Gen) for Single-Theaded throughput performance. If the numbers hold true then the smaller Gracemont Cores could prove to be great for background tasks and efficiency. Intel has broken their Efficient x86 Core Microarchitecture into 5 areas, with those being: Front End (Instructions, Branch Prediction, and Decoding etc.), Out of Order Engine (scheduling, Allocation etc.), Scalar Engine (Execution Units), Vector Engine (EUs for complex data) and Memory Subsystem (L1 Data Cache, L2 Cache, TLB, SDB, LLB etc.). We will take a look at these different areas below in the architectural deep dive.

Gracemont vs Skylake

Intel claims that a 2-Core \ 4-Thread Skylake Core is slower than the 4-Core \ -4 Thread Gracemont (E)-Core with less power usage. This should be expected since Alder Lake E-Cores are being compared to the 11th Gen Skylake, however the bigger story is how much more efficient Gracemont is and how much more performance is gained with the 4C\4T (no Hyper-Threading) Gracemont Core over the 2C\4T (HT Enabled) Skylake Core. Intel is claiming an 80% performance increase while using 80% less in power usage in Multi-threading scenarios. That sounds like a great accomplishment if it holds true especially for Multi-Threading. Intel also says that the Single-Threaded performance when compared against Skylake (1C\1T) vs a 1C\1T (Gracemont) increases performance by 40% while decreasing power consumption by 40% which also sounds great.

Front End

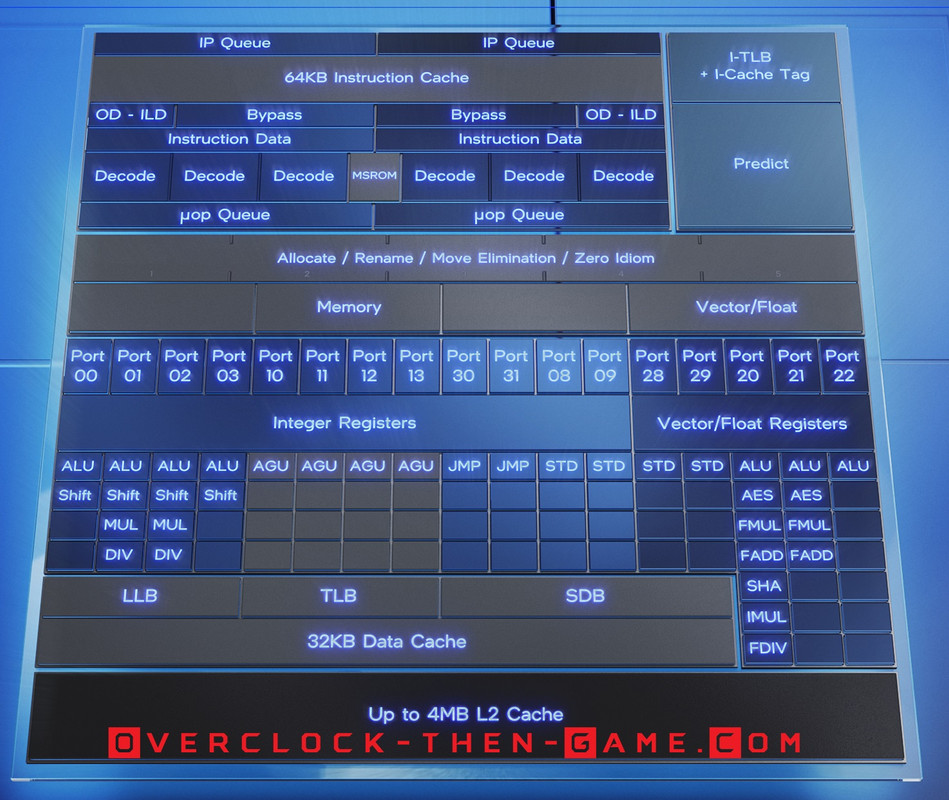

Even without Hyper-Threading at the hardware level it appears that the Gracemont cores will perform well. We will now dive into the micro-architecture to see how this is possible. While trying to balance the power efficiency and performance ratio Intel decided on an Instruction Cache that has 64KB, which is double the Instruction Cache on my 1st Generation Nehalem\Westmere CPU (32KB Instruction Cache). Also unlike my 1st Generation CPU, the 12th Generation Gracemont Core apparently has the Instruction Length Decoder within the Instruction Cache. This will allow the decoder in later stages to have everything it needs while removing additional stages in the pipeline. On my 1st Generation Nehalem\Westmere CPU there were multiple stages with the Instruction Decoder and Queues. However, the Alder Lake-S L1 Data Cache remains the same as my 1st Gen CPU which is 32KBs. Intel is definitely making the Front End wider to keep the CPUs fed with plenty of data workloads. Gracemont can decode up to 6 instructions.

E-Core Memory Subsystem

I have been learning more about Intel memory subsystem and this is what I could gather. The L2 Cache can be 2MBs (Consumer Alder Lake-S) or up to 4MBs (Enterprise-Xeon) depending on the CPU model. That is an increase over Rocket Lake-S which had only 512KB and an increase over my 1st Gen CPU which contained only 256KBs. So in essence that is a 290% (2MB) to a 681% (4MB) increase in L2 cache memory over Rocket Lake and a 681% (2MB) to a 1,463% (4MB) over my 1st Gen Westmere CPU. Since Hyper-Threading is disabled on Gracemont Cores the larger L2 cache can help greatly within the cluster of cores and hopefully not waste long cycles on system memory reads. The L2 cache contains instructions and data unlike the L1 cache that splits the data and instructions. The 2MB L2 cache is shared between the 4 Gracemont cores (i7-12700K). If your CPU model has 8 Gracemont Cores (i9-12900K) then there are 2 clusters of Gracemont Cores. So the first set of cores in a cluster (x4 Gracemont Cores) will share the L2 memory cache and the second cluster (x4 Gracemont Cores) will share another L2 memory cache. That gives you a total of 4MBs L2 Cache if you have two Gracemont Clusters (i9-12900K\KF) which will be added to the Performance Cores total L2 cache discussed later in this article. Alder Lake supports up to 30MB L3 Cache I will speak more about the L3 memory later in the article in the “P-Core Memory Subsystem” section.

Instruction Cache Updates

So far this is pretty exciting. Intel says that Alder Lake will be there first “on-demand” Instruction Length Decoder that will be stored within the Instruction Cache. That should help with performance greatly since that step has been consolidated and will help the CPU decide if it needs to go through the entire decoder stage or not early in the pipeline. If the CPU recognizes the data\code then this process can be skipped, if the CPU does not recognize the data\code then the data can be processed quickly within the Instruction Cache. This way the CPU won’t have to necessarily wait for decoding that was traditionally in later stages like my older 1st Gen CPU. This wasn’t a big issue for many years, but consolidating workloads in the pipeline is always a great thing. To aid the workloads the branch target buffer has been increase to 5000 entries along with more history entries for branch predictions, this way the Gracemont Core can be as accurate as possible when working with instructions. Accuracy naturally leads to efficiency since Branch Predictions hits can lead to a better performance\power efficiency ratio without taking any other microarchitecture improvement into account. Deeper branch prediction cache means that misses can be caught and resolved before reaching crucial stages later in the pipeline which could cause stalls and hurt performance & efficiency.

Feeding the Beast & Power Efficiency

Moving down the Gracemont microarchitecture we see a much larger allocation buffer and out-of-order window. The clustered out-of-order decoder, as Intel calls it, can provide up to six instructions per cycle while holding a total of 256 entries (That is a 100% increase in entries over my 1st Gen CPU). This will feed the Execution Units via Ports and Gracemont comes packed with plenty of them for many operations per clock. Having such a large number of entries also allows more data to completed in parallel if there aren’t any dependencies. If all goes well the EU’s should always have plenty of data to execute. There are 17 execution ports for each Gracemont Core which should be capable of some serious parallel workloads. So if you have 8 Gracemont Cores (i9-2900K) then you theoretically could have 136 EU’s working at once if they are all being utilized for different purposes.

If certain areas within the CPU aren’t being utilized Intel has included hardware that works alongside software that shuts down unused Cores, Execution Units and Ports within micro-seconds rather than milliseconds, according to Intel. However I have also read that this could get down to the nanoseconds. If Intel has been updating their old advance power-saving methods, and we know they have been, it is very possible that parts of the L2 cache will be in a very low power state if all regions of the L2 cache aren't being utilized after sometime. The same should be true for parts of the L3 cache as well.

There are 4 INT ALU, 2 FP\Vec as well as a Vector ALU. Intel packed 4 Total AGUs (x2 Store & x2 Load) which should help calculate addresses effectively for typical workloads. The Store\Load will be able to handle 32 byte reads. Intel has also included 2 FP\Vec Stores which should also be effective for complex related workloads. There are also 2 Jump ports and 2 dedicated Store Data EUs. Now we can begin to see how the Gracemont Cores are able to compete against Skylake Cores with potentially up to 80% more performance and 80% less power even though there is a lack of Hyper-Threading. Intel OOB scheduling must be top notch for this type of bold marketing. As far as I know the scheduler should able to send operations to any port as needed. All of this performance explains why Intel needed to increase the Instruction Cache, OOE entries and expanded on other areas within the core. The instruction sets for the Gracemont E-Cores use the same instructions that the Performance Cores (Golden Cove) uses. In order to use FP16 instructions the Performance Cores must be enabled. In order to use AVX-512 it appears that the Efficient Gracemont Cores will need to be disabled and the motherboard OEM will need to support or allow the feature.

So this tells me that even the Efficient Gracemont Cores alone should bring some big updates and performance increases to my archaic Intel X58 - 1st Gen 45nm (Quad) \32nm (Hexa) CPU build which only had a max of (32nm Westmere) 6 EU’s with 3 being dedicated for workloads (INT & FP\Complex) and 3 dedicated to memory operations (1 AGU (Load), 1 AGU (Store), 1 Store Data) per core. I am hoping that consumers can eventually have a streamlined approach to manually control which Cores applications can use (P-Core or E-Core).

If certain areas within the CPU aren’t being utilized Intel has included hardware that works alongside software that shuts down unused Cores, Execution Units and Ports within micro-seconds rather than milliseconds, according to Intel. However I have also read that this could get down to the nanoseconds. If Intel has been updating their old advance power-saving methods, and we know they have been, it is very possible that parts of the L2 cache will be in a very low power state if all regions of the L2 cache aren't being utilized after sometime. The same should be true for parts of the L3 cache as well.

There are 4 INT ALU, 2 FP\Vec as well as a Vector ALU. Intel packed 4 Total AGUs (x2 Store & x2 Load) which should help calculate addresses effectively for typical workloads. The Store\Load will be able to handle 32 byte reads. Intel has also included 2 FP\Vec Stores which should also be effective for complex related workloads. There are also 2 Jump ports and 2 dedicated Store Data EUs. Now we can begin to see how the Gracemont Cores are able to compete against Skylake Cores with potentially up to 80% more performance and 80% less power even though there is a lack of Hyper-Threading. Intel OOB scheduling must be top notch for this type of bold marketing. As far as I know the scheduler should able to send operations to any port as needed. All of this performance explains why Intel needed to increase the Instruction Cache, OOE entries and expanded on other areas within the core. The instruction sets for the Gracemont E-Cores use the same instructions that the Performance Cores (Golden Cove) uses. In order to use FP16 instructions the Performance Cores must be enabled. In order to use AVX-512 it appears that the Efficient Gracemont Cores will need to be disabled and the motherboard OEM will need to support or allow the feature.

So this tells me that even the Efficient Gracemont Cores alone should bring some big updates and performance increases to my archaic Intel X58 - 1st Gen 45nm (Quad) \32nm (Hexa) CPU build which only had a max of (32nm Westmere) 6 EU’s with 3 being dedicated for workloads (INT & FP\Complex) and 3 dedicated to memory operations (1 AGU (Load), 1 AGU (Store), 1 Store Data) per core. I am hoping that consumers can eventually have a streamlined approach to manually control which Cores applications can use (P-Core or E-Core).