Page 8 of 11

Westmere-EP - X5660 Full Review

Real Time Benchmarks™

X5660 + X58 + AMD Fury X 2015 Results Here:

https://overclock-then-game.com/index.php/benchmarks/8-amd-fury-x-review

X5660 +X58 + AMD Fury X 2016 Results Here:

https://overclock-then-game.com/index.php/benchmarks/15-crimson-relive-16-12-1-several-games-benchmarked-4k

OLD GTX 670 SLI results below:

Real Time Benchmarks™ is something I came up with to differentiate standalone benchmarks tools from actual gameplay. I basically play the games for a predetermined time or a specific online map. It all depends on the game mode and the level\mission at hand. I tend to go for the most demanding levels and maps. I capture all of the frames and I use 4 different methods to ensure the frame rates are correct for comparison. In rare cases I'm forced to use 5 methods to determine the fps. it takes a while, but it is worth it in the end.

These test should give those who have similar high-end setups a good comparison between the stock and overclock CPU performance. For those who don't have a similar setup these benchmarks will let you see if the X5660 is worth the upgrade. Some games are more CPU dependent than others. I'm only running the GTX 670 2GB reference 2-way SLI. I'm hoping to upgrade to a Quad\SLI\Crossfire X setup on my X58 platform. I just have to choose between Nvidia or AMD. In the meantime this is how the X58 is running in 2014+ with some of the latest and greatest high end games available.

These test should give those who have similar high-end setups a good comparison between the stock and overclock CPU performance. For those who don't have a similar setup these benchmarks will let you see if the X5660 is worth the upgrade. Some games are more CPU dependent than others. I'm only running the GTX 670 2GB reference 2-way SLI. I'm hoping to upgrade to a Quad\SLI\Crossfire X setup on my X58 platform. I just have to choose between Nvidia or AMD. In the meantime this is how the X58 is running in 2014+ with some of the latest and greatest high end games available.

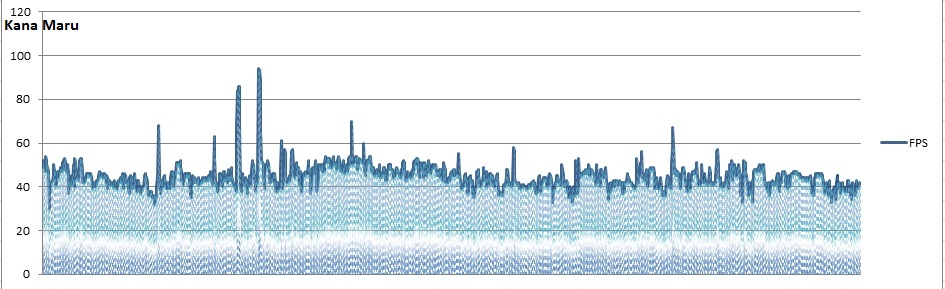

Middle-earth: Shadow of Mordor [Very High + Medium Texture Quality] – 2560x1600p

- Note: Unofficial SLI Support -

GTX 670 2GB 2-Way SLI @ 988Mhz [Boost: 1228Mhz] [344.11 WHQL Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-1600Mhz

Gameplay Duration: 41 minutes 17 seconds

Captured 393,643 frames

FPS Avg: 41fps

FPS Max: 97fps

FPS Min: 15fps

FPS Min Caliber ™: 29fps

Frame time Avg: 22.5ms

-FPS Min Caliber?-

You’ll notice that I added something named “FPS Min Caliber”, well that is if anyone even look at these charts nowadays. Basically FPS Min Caliber is something I came up to differentiate between FPS absolute minimum which could simply be a data loading point during gameplay etc. The FPS Min Caliber ™ is basically my way of letting you know lowest FPS average you’ll see during gameplay. The minimum fps [FPS min] can be very misleading. I plan to continue using this in the future as well.

Now to the results, this is much better in terms of playability. There was no button lag or stuttering and the game was smooth. Very High + Medium Texture Quality still looks amazing. No complaints from a 2GB GPU user. Also remember that this game doesn’t officially support SLI just yet, but I have managed to get it working on my PC.

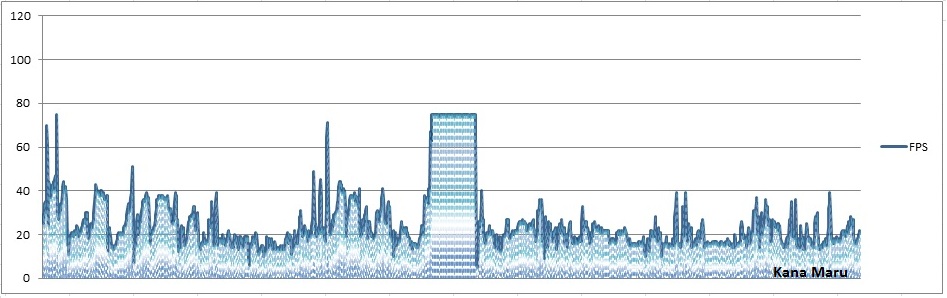

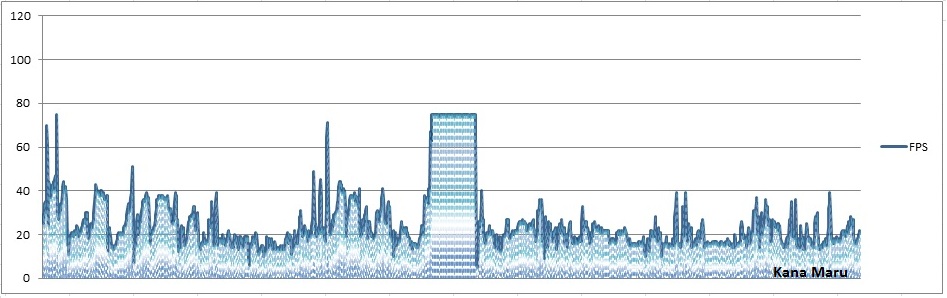

Middle-earth: Shadow of Mordor [Ultra Settings + HD Texture Pack] – 2560x1440p

- Note: Unofficial SLI Support -

GTX 670 2GB 2-Way SLI @ 988Mhz [Boost: 1241Mhz] [344.11 WHQL Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-1600Mhz

Gameplay Duration: 33 minutes 29 seconds

Captured 49,095 frames

FPS Avg: 25fps [24.58fps]

FPS Max: 65fps

FPS Min: 6fps

FPS Min Caliber ™: 12fps

Frame time Avg: 40.5ms

- Note: Unofficial SLI Support -

GTX 670 2GB 2-Way SLI @ 988Mhz [Boost: 1241Mhz] [344.11 WHQL Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-1600Mhz

Gameplay Duration: 33 minutes 29 seconds

Captured 49,095 frames

FPS Avg: 25fps [24.58fps]

FPS Max: 65fps

FPS Min: 6fps

FPS Min Caliber ™: 12fps

Frame time Avg: 40.5ms

-FPS Min Caliber?-

You’ll notice that I added something named “FPS Min Caliber”, well that is if anyone even look at these charts nowadays. Basically FPS Min Caliber is something I came up to differentiate between FPS absolute minimum which could simply be a data loading point during gameplay etc. The FPS Min Caliber ™ is basically my way of letting you know lowest FPS average you’ll see during gameplay. The minimum fps [FPS min] can be very misleading. I plan to continue using this in the future as well. [/I]

In order to run this game with the HD Texture Pack you need at least a 6GB GPU. Obviously I have a 2GB GPU, but hell I ran the test anyways. Also SLI isn’t currently supported in this game. I did find a away to get it to work with both GTX 670 cards. As you can see above the cards just can’t handle this game at 1400p + Ultra. I’ll run another test at 1080p, but being that the texture pack requires a 6GB card I’m not sure how much more I’ll gain.

The Ultra settings won’t help my cause much either. GTX 670 users will need to stick to “Medium Texture” settings and “Very High” Graphic Quality settings. The game still looks really good regardless. As far as Ultra Settings & HD Content goes, it’s playable, but the experience is pretty bad. There’s plenty of button lag, random micro lag, stutter, and the random low FPS doesn’t help either. 40.5ms frame time is simply unforgiving. 2GB users should stick with Very High and Medium Texture settings for sure.

Watch Dogs [Ultra Settings + E3 Graphic Mods] – 1920x1080p

MSAA x2

GTX 670 2GB 2-Way SLI @ 988Mhz [Boost: 1228Mhz] [337.88 BETA Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-1600Mhz

Gameplay Duration: 14 minutes 5 seconds

Captured 90,355 frames

FPS Avg: 68fps

FPS Max: 131fps

FPS Min: 21fps

Frame time Avg: 9.30ms

Watch Dogs [Ultra Settings + E3 Graphic Mods] – 2560x1440p

MSAA x2

GTX 670 2GB 2-Way SLI @ 988Mhz [337.88 BETA Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-1600Mhz

Gameplay Duration: 22 minutes 59 seconds

Captured 55,939 frames

FPS Avg: 41fps

FPS Max: 55fps

FPS Min: 9fps

Frame time Avg: 24.7ms

Tomb Raider [Ultra] - 3500x1800p

Tomb Raider [Ultra] - 3500x1800p

StockGTX 670 2GB 2-Way SLI @ 988Mhz [337.50 BETA Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-1600Mhz

CPU Average: 35c

CPU Max: 45c

Ambient Temp: 25.5c

CPU Usage Avg: 4.9%

CPU Usage Max: 28.4%

Gameplay Duration: 15 minutes 21 seconds

Captured 49,318 frames

FPS Avg: 54fps[53.51]

FPS Max: 70fps

FPS Min: 20fps

Frame time Avg: 18.7ms

StockGTX 670 2GB 2-Way SLI @ 988Mhz [337.50 BETA Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-1600Mhz

CPU Average: 35c

CPU Max: 45c

Ambient Temp: 25.5c

CPU Usage Avg: 4.9%

CPU Usage Max: 28.4%

Gameplay Duration: 15 minutes 21 seconds

Captured 49,318 frames

FPS Avg: 54fps[53.51]

FPS Max: 70fps

FPS Min: 20fps

Frame time Avg: 18.7ms

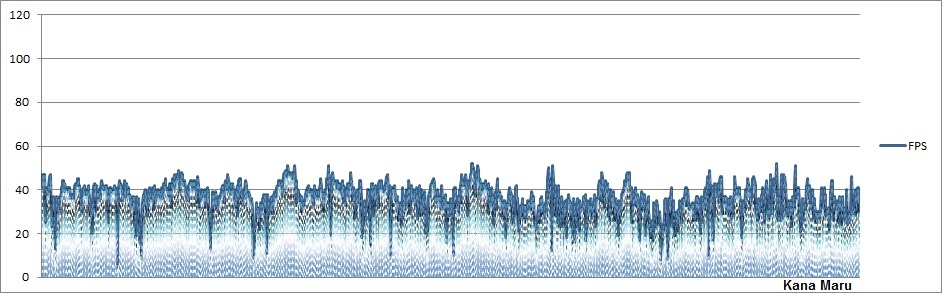

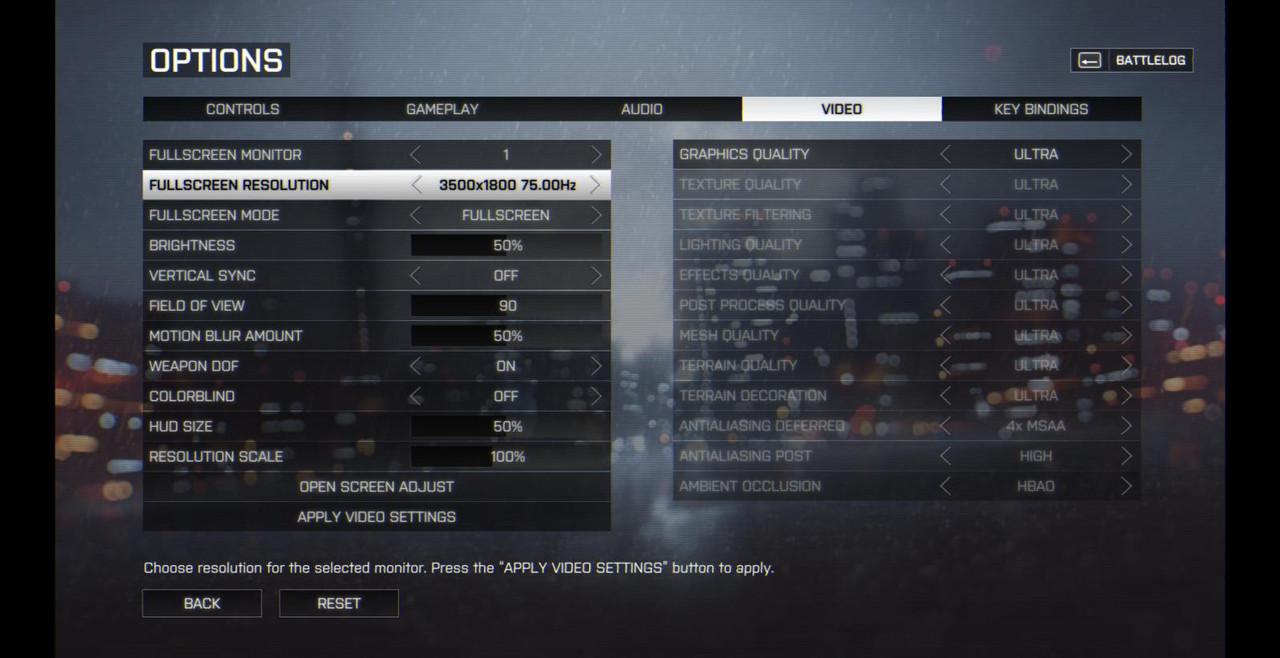

Battlefield 4 100% Maxed [Ultra] - 3500x1800p

StockGTX 670 2GB 2-Way SLI @ 988Mhz [Boost: 1228Mhz] [337.50 BETA Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-1600Mhz

CPU Average: 48c

CPU Max: 59c

Ambient Temp: 24c

CPU Usage Avg: 17%

CPU Usage Max: 49%

Gameplay Duration: 10 minutes 36 seconds

Captured 17,241 frames

FPS Avg: 43fps[42.97]

FPS Max: 116fps

FPS Min: 7fps

Frame time Avg: 22ms

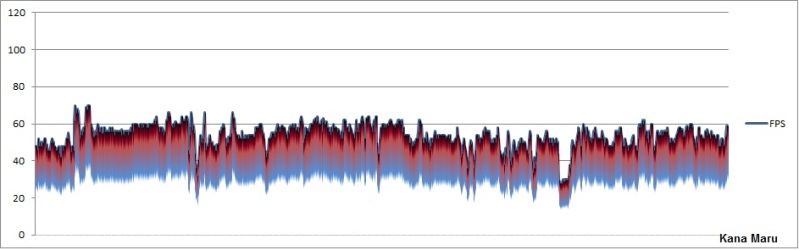

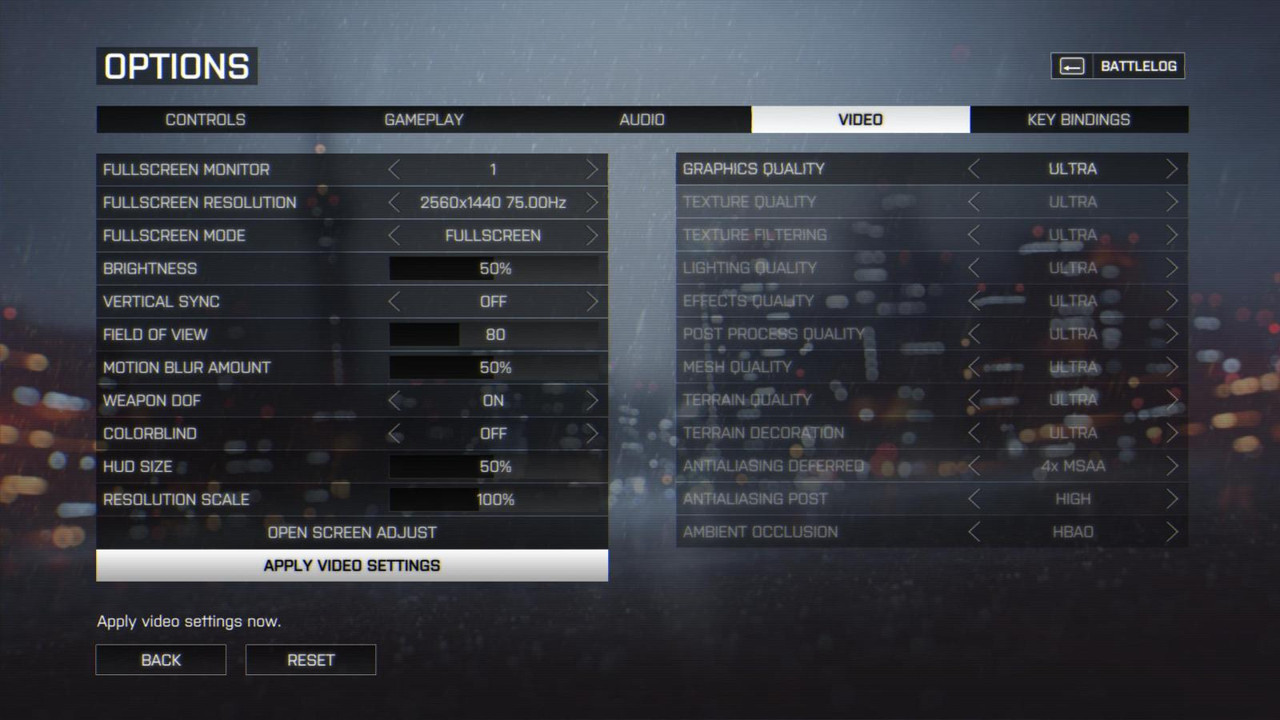

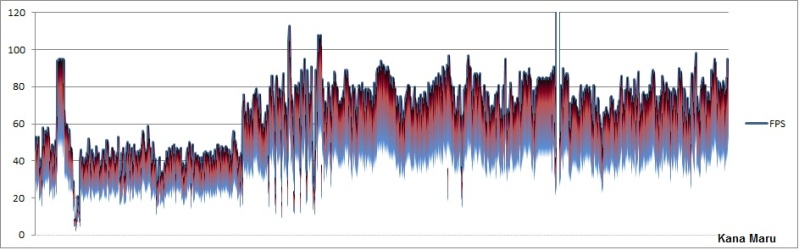

Battlefield 4 100% Maxed [Ultra] - 2560x1440p

StockGTX 670 2GB 2-Way SLI @ 915Mhz [Boost: 1221Mhz] [337.50 BETA Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-1600Mhz

CPU Average: 44c

CPU Max: 57c

Ambient Temp: 22c

Gameplay Duration: 34 minutes 46 seconds

Captured 141,055 frames

FPS Avg: 69fps

FPS Max: 147fps

FPS Min: 32fps

Frame time Avg: 14.5ms

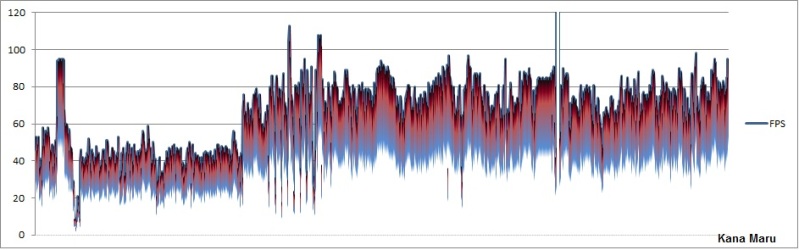

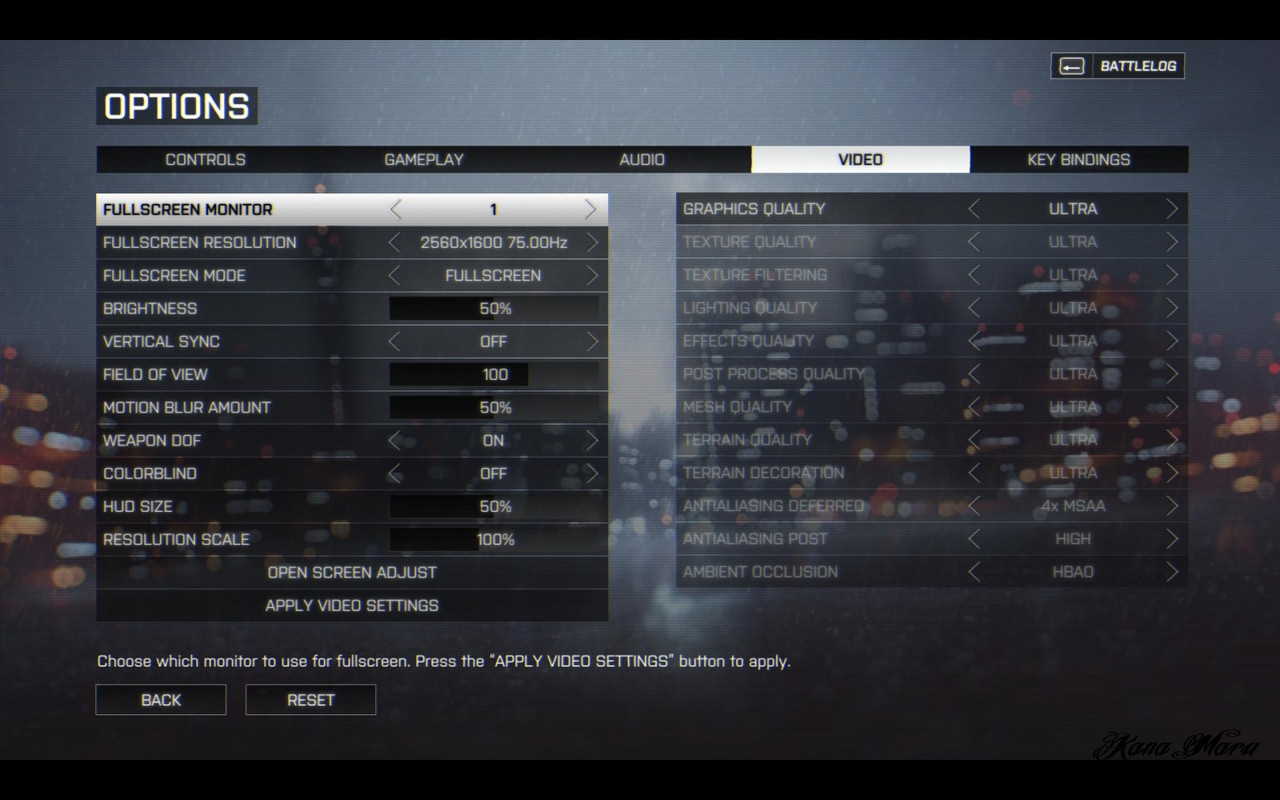

Battlefield 4 100% Maxed [Ultra] - 2560x1600p

StockGTX 670 2GB 2-Way SLI @ 915Mhz [Boost: 1221Mhz] [337.50 BETA Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-1600Mhz

Gameplay Duration:1hour 3mins 58secs

Captured 235,043 frames

FPS Avg: 62fps[62.25]

FPS Max: 139fps

FPS Min: 30fps

Frame time Avg: 15.2ms

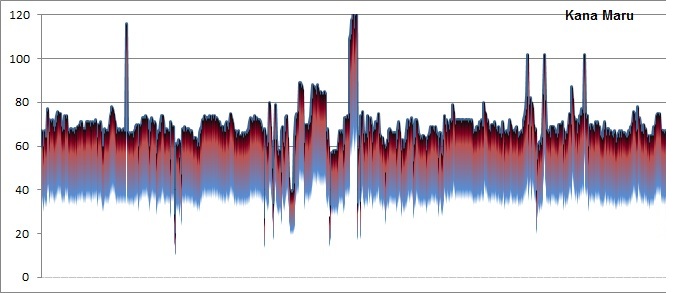

Thief @ 1920x1080 - Very High [100% Maxed] - Stock CPU

Stock GTX 670 2GB 2-Way SLI @ 966Mhz [Boost: 1228Mhz]

X5660 @ 3.2Ghz

RAM: DDR3-1333Mhz

Gameplay Duration: 25 minutes 36 seconds

117,328 Frames Captured

FPS Avg: 76fps

FPS Max: 97fps

FPS Min: 19fps

Frame time Avg: 13.1ms

I’ve been so busy with the new RAM that I haven’t had time to post my Thief Very High Settings. Running stock speed gave me some pretty good results. There was minor stuttering during the loading process, but nothing major. The game was smooth I had no issues at all. This was expected since the game is using the Unreal Engine 3. While the engine is 10 years old it still looks fantastic. The Xeon handled this game well at stock speeds, but this isn’t it’s full potential.

Thief - Overclocked Xeon

Thief @ 1920x1080 - Very High [100% Maxed]

Stock GTX 670 2GB 2-Way SLI @ 966Mhz [Boost: 1228Mhz]

X5660 @ 4.6Ghz

RAM: DDR3-1600Mhz

Gameplay Duration: 22 minutes 19 seconds

145,111 Frames Captured

FPS Avg: 108fps

FPS Max: 116fps

FPS Min: 25fps

Frame time Avg: 9.22ms

My overclocked Xeon still proves that Hexa cores are great for gaming. I increased in just about every stat I recorded. This time around I didn’t capture the CPU temp or the CPU Usage. I gained 32 frames per seconds on average and dropped my frame time down 3.88ms. This game is looking very nice. I can’t wait to see what the Unreal Engine 4 will bring regarding graphics. Ignore the fps that reads off the chart, those high FPS readings are from the loading screen.

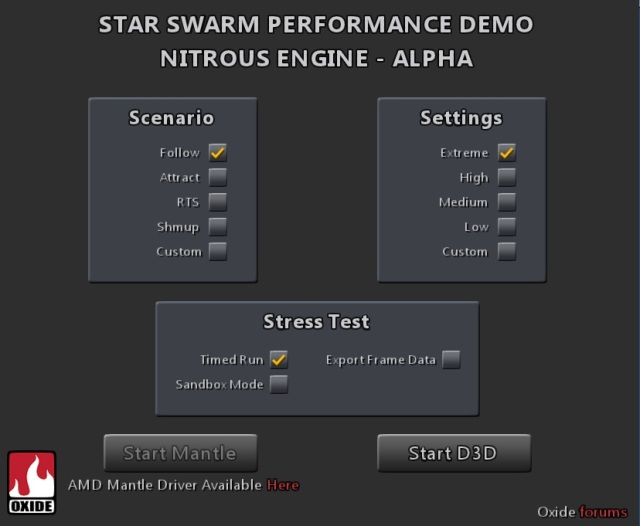

Star Swarm Stress Test [Benchmark Tool v1.0] – Extreme - Attract @ 1920 x 1080

Stock GTX 670 2GB 2-Way SLI @ 966Mhz[Boost: 1241Mhz]

X5660 @ 3.2Ghz

RAM: DDR3-1333Mhz[

CPU Avg: 17%

CPU Max: 41%

CPU Temp Avg: 29c

CPU Temp Max: 32c

Room Ambient: 21c

Gameplay Duration: 6 minutes

7494 Frames Captured

FPS Avg: 20fps

FPS Max: N/A [Benchmark Tool]

FPS Min: 3fps

Frame time Avg: N/A [Benchmark Tool]

Star Swarm Stress Test [Benchmark Tool v1.0] – Extreme - Follow @ 1920 x 1080

Stock GTX 670 2GB 2-Way SLI @ 966Mhz[Boost: 1241Mhz]

X5660 @ 3.2Ghz

RAM: DDR3-1333Mhz

CPU Avg: 15%

CPU Max: 32%

CPU Temp Avg: 29c

CPU Temp Max: 33c

Room Ambient: 21c

Gameplay Duration: 6 minutes

9296 Frames Captured

FPS Avg: 26fps

FPS Max: N/A [Benchmark Tool]

FPS Min: 3.5fps

Frame time Avg: N/A [Benchmark Tool]

Star Swarm Stress Test [Real Time Benchmarks] – Extreme - Follow @ 1920 x 1080

Stock GTX 670 2GB 2-Way SLI @ 966Mhz[Boost: 1241Mhz]

X5660 @ 4.6Ghz

RAM: DDR3-1600Mhz

CPU Avg: 12%

CPU Max: 25%

CPU Temp Avg: 46c

CPU Temp Max: 51c

Room Ambient: 21c

Gameplay Duration: 6 minutes

13905 Frames Captured

FPS Avg: 39fps

FPS Max: 124fps

FPS Min: 5fps

Frame time Avg: 25.9ms

Alright so I’ve ran more test for those wondering if the Hex cores are worth it for gaming. In this test I’ve overclocked my GTX 670 2GB 2-Way SLI to 966Mhz which boost up to 1241Mhz to take on the Star Swarm Stress Test. It was recently updated on Steam. So it’s a pretty decent GPU overclock. I’m comparing my X5660 @ 3.2Ghz-DDR3-1333Mhz and 4.6Ghz-DDR3-1600Mhz. The first two test were ran using only the Benchmark Tool\Stress Test and I did not run my personal tests. However, I did perform my Real Time Benchmarks along with the Star Swarm Benchmark Tool in the last test [4.6Ghz-1600Mhz].

3.2Ghz DDR3-1333Mhz Results:

The X5660 @ 3.2Ghz & overclocked GTX 670s with the Extreme Preset appears to playable. When there are a lot of things happening on the screen the game simply drops the frame rate sharply. You can be at 30-40fps and the next second you are rapidly dropping to 10fps; even worse 3 fps. It seemed like a slow motion scene in the Matrix. I was using the benchmark tool so I’m guessing the frame times were near the 180ms-200ms mark. This could simply be a performance issue with the GTX 670 2GB limitation and specs. This benchmark is definitely demanding and should bring most cards to their knees.

4.6Ghz DDR3-1600Mhz Results:

Now to lift any bottlenecking I’ve OC’d my CPU. Anyone running 3.8Ghz Hex-Core and higher should have no major bottlenecks. I also run my CPU overclocked to 4.6Ghz because it only requires 1.36vCore which is safe as it gets with higher overclocks. With all of that being said this Benchmark Tool does rely on a decently clocked Intel CPU or AMDs APU to even it up with better results. I’m comparing the Extreme - Follow @ 1920x1080 results. If you look at the comparisons above you’ll see some pretty interesting results. The most obviously being that the Extreme - Follow @ 1920x1080presets showed an increase of 13fps. From 26fps [3.2Ghz] to 39fps [4.6Ghz]. That’s actually really good. That’s a 50% increase! The frames per second aren’t the only tell-tale. If you take a look at the frames that were actually captured, you’ll a huge increase of around 50% as well. The CPU can keep up with the GPU which allows for more frames to be captured during the benchmark. Which means you’ll have a more pleasant experience while playing. The frame time was a very good as well [25.9ms]. The GTX 670 2GB reference still suffered from the extremely high Frame times. My highest was 140ms which knocked my frames down to 5fps. So as I said above anyone running 3.8Ghz or higher Hex-cores shouldn’t really have any bottleneck issues. With that being said this benchmark is rough on graphic cards. I personally prefer to post results from actual gameplay. However, this will do for now. Feel free to compare your GPU scores and CPU speed.

Total War: Rome II

Prologue - The Siege of Capua @1920 x 1080:

Stock GTX 670 2GB 2-Way SLI @ 915Mhz [Boost: 1228Mhz]

X5660 @ 3.2Ghz

RAM: DDR3-1333Mhz

CPU Avg: 28%

CPU Max: 54%

Gameplay Duration: 19 minutes 46secs

23,885 Frames Captured

FPS Avg: 20fps

FPS Max: 55fps

FPS Min: 10fps

Frame time Avg: 49.6ms

I added the CPU Usage info for anyone who wanted to know the difference between stock vs overclock usage. It was pretty late when I performed this benchmark so I forget to log the CPU temperatures.

In the past I found that most games don’t rely on the CPU heavily. Most recently High-end games like BF3 for example can run fine without massive CPU overclocks as I’ve posted. Sometimes the gains aren’t worth the power and CPU voltage. Well that isn’t the case with Total War: Rome II. This game depends on the CPU a lot. A decently clocked CPU can make your experience pleasant. If your CPU is slow then obviously things won’t go over so smoothly with your eyes and key input. As you can see from my benchmark above, I didn’t have the best experience.

My Xeon is clocked @ 3.2Ghz, which then down clocks to 3.0Ghz after two cores are being used. Although I played through the Prologue with minor issues for my taste [or just hype for the game], some things can’t be ignored. Random stutter, random input lag and low FPS. Input delay and difficulties with the controls due to micro “like” stuttering. Now what makes the “Prologue” different than the “Campaign” is that there is no overworld menu. The game places you directly in a huge battle with hundreds, if not thousands of soldiers on the screen at any given time. The level is pretty large as well. Not to forget to mention that I’m playing the game 100% MAXED as I showed in the picture above. The game is gorgeous, but the gameplay wasn’t the best. 20 fps isn’t that bad when everything is moving at a steady motion. However, once there’s a lot of things happening on screen you can expect anywhere from 17-27 fps. The frame time was all over the place and was literally Spiking rapidly during my benchmark test. Two of my highest spikes were 88.8ms and 104ms! That is very unacceptable; however, it only happened twice respectfully. The experience was passable, but far from decent. Also remember than I’m running the 2GB reference GTX 670 for testing to give a fair CPU comparison. Read below for my overclocked settings.

Prologue - The Siege of Capua @1920 x 1080: Overclock 4.6Ghz+1600Mhz RAM

Stock GTX 670 2GB 2-Way SLI @ 915Mhz [Boost: 1228Mhz]

X5660 @ 4.6Ghz

RAM: DDR3-1600Mhz

CPU Usage Avg: 27%

CPU Usage Avg: 38%

CPU Temp: Avg: 49C

CPU Max: 54C

Room Ambient: Approx: 22C

Gameplay Duration: 17 minutes 29secs

35,112 Frames Captured

FPS Avg: 33fps

FPS Max: 77fps

FPS Min: 18fps

Frame time Avg: 29.9ms

Now that I’ve overclocked my CPU to 4.6Ghz and increased my DRAM to 1600Mhz everything is much better now. I gained 13 much needed frames per second. To make things even better, the frame rate dropped 19.7ms from 49.6ms which puts me at 29.9ms; which is very good. The experience was much better. I instantly noticed the increase in fps and smoother gameplay. There was also no input delay. Everything was smooth and the 33fps appears to be constant. The frame per second usually stayed above 40 throughout the benchmark test.

There was no rapid spiking in the frame rates and frame time during the benchmark. In the last test my frame time spiked to ridiculous number, over 100ms. Although it only happened one my overclocked settings were obviously much better. My highest frame time was 55.8ms and 74.7ms. What matters most is the average fps and frame time overall. The Prologue was very enjoyable. I had no slowdowns to steady frame times and frame rates. I’m going to use my 4.2Ghz OC settings instead of 4.6Ghz in my next test to see if there’s a big difference between 4.6Ghz and 4.2Ghz. 4.2Ghz will be easy for most overclockers to hit. 100% MAXED in a massive game like this is fine with me. I could always downgrade the graphic to Ultra instead of Extreme. However, I’m sticking with the Extreme + 100% max settings.

Campaign Mode @1920 x 1080:

Campaign – First 2 hours @1920 x 1080:

Stock GTX 670 2GB 2-Way SLI @ 915Mhz [Boost: 1228Mhz]

X5660 @ 3.2Ghz

RAM: DDR3-1333Mhz

CPU Avg: 24%

CPU Max: 100%

CPU Temp Avg: 30c

CPU Temp Max: 37c

Ambient Temp: 19C

Gameplay Duration: 1 hour 57 secs

136,535 Frames Captured

FPS Avg: 37fps

FPS Max: 80fps

FPS Min: 13fps

Frame time Avg: 26.8ms

I figured that I’d benchmark this game as I played it. I really like this game. It’s pretty deep like other RTS games. Despite all of the fun I had playing the game, there were some major issues. Before I jump right into the issues I need to distinguish the Prologue from the Campaign. The Campaign is the “story”. The prologue obviously is what leads to the Campaign\story, but what makes it different is that the Prologue has no overworld map. The Prologue places you directly in battle and the Campaign allows you to control everything with different menus. The reason I’m explaining this is because you can see that the frames per second is up 17fps in the Campaign [37fps] instead of 20fps in the Prologue. The Campaign doesn't focus solely on decisive battles. However, the overworld view is pretty demanding. Scrolling fast across the map caused my frame rate to drop extremely low [13 - 19fps]. The drop was sharp and unexpected as well. There was a bit of stutter while playing which ultimately leads to input delay.

Overall the game played much better. This was due to the areas being much smaller than the Prologue and having fewer enemies on screen. So battles were a lot better than the Prologue. The average frame times were a lot better, but could not prevent the micro stutter issues on the overworld map. The CPU average was low, but the CPU obviously wasn’t moving data quick enough. The CPU actually hit 100% during my play through. This could be a error or it could have happened while the overworld map was stuttering. That’s definitely not good, but overall the game was decent and very playable. There were some stutter issues that I could not ignore on the overworld map.

Campaign – 1 hour 41 minutes @1920 x 1080: Overclock 4.6Ghz+1600Mhz RAM

Stock GTX 670 2GB 2-Way SLI @ 915Mhz [Boost: 1228Mhz]

X5660 @ 4.6Ghz

RAM: DDR3-1600Mhz

CPU Usage Avg: 22%

CPU Usage Max: 37%

CPU Temp Avg: 45c

CPU Temp Max: 60c

Ambient Temp: 22c

Gameplay Duration: 1 hour 10 mins

189,477 Frames Captured

FPS Avg: 47fps

FPS Max: 89fps

FPS Min: 19fps

Frame time Avg: 21.4ms

I gained 10fps after overclocking the CPU and RAM. The frame time was much better as well. Overall the gameplay was much better. 4.2Ghz will get you 42fps. 4.6Ghz does make a difference, but 4Ghz-4.2Ghz will be fine for playing this game if you have a GTX 670 or anything near the 2GB reference specs.

Hopefully this helps those X58 users who are still wondering if the X5660 or L5639 will be worth it for gaming. Well the answer is yes it will be.

Crysis 3:

The real time benchmarks are games that I have played while capturing real time data. Instead of relying on a benchmark tool found in a lot of games nowadays, I play through the levels looking for micro stutter and or delays. The L5639 handled Maxed High End games like Crysis 3, Tomb Raider and Metro: LL etc. Let's see how the X5660 handle Crysis 3,

Welcome to the Jungle - 1920x1080p

This email address is being protected from spambots. You need JavaScript enabled to view it.

CPU Max Temps:58c

Stock GTX 670 2-Way SLI @ 915Mhz [Boost: 1228Mhz]

GPU 1: 83c

GPU 2: 71c

Those temps were pretty much steady throughout the benchmark and gameplay. Due to heat concerns I had to run the GPU cores @ stock\915Mhz.

FPS:

Avg: 53

Max: 136

Min: 18

As you can see not much has changed in this category from the L5639 reveiw. I actually gained 3 frames per second and the frame rate was well above 60fps throughout the level. I’m guessing it’s safe to say that 4Ghz-4.2Ghz will be fine for high end gaming with the Hex cores. I personally run with 4.6Ghz for high end games. With no more CPU bottlenecking I’ve finally hit the max on my graphic cards. I was only getting 25fps to 35fps with my This email address is being protected from spambots. You need JavaScript enabled to view it. . Two extra cores make a huge difference. The L5639 and X5660 is fine for high end gaming.

Average Frame time: 19ms

My frame times were slightly better. The game played fine on both CPUs. The higher clocked X5660 gave it a edge over the L5639. However, I’m sure if both CPUs were clocked at the same speed the difference wouldn’t matter. just as they do not now. So i7-920 to i7-960 and pretty much all Bloomfield users will see a tremendous upgrade. Gaming wise there is no comparisons.

Battlefield 3

BF3 is one of the most gorgeous FPS I’ve played. There are a lot of great looking games. EA isn’t my favorite company, but they definitely invest in their studios. It was requested to be benchmarked. I also have to thank PontiacGTX for allowing me to benchmark this game on Origin. It took me 2 hours to download 20.3GBs of data. It was worth it. I ran the benchmarks in 1600p, 1080p and 720p. There are a lot of charts, but I’m not going to post the charts. It’s way too many charts.

All benchmarks were tested with Max Settings. My GPUs were at stock settings.

GTX 670 2GB 2-Way SLI @ 915Mhz.[Boost: 1228Mhz]

While playing @ 1600p

X5660 @ 4.6Ghz

CPU: Max: 52c

GPU 1: Avg. 70c - Max: 76c

GPU 2: Avg. 65c - Max: 72c

Semper Fidelis [Campaign] @2560 x 1600p:

Gameplay Duration: 3 minutes 21 secs

Captured 14,690 frames

FPS Avg: 73fps

FPS Max: 110fps

FPS Min: 30fps

Frame time Avg: 13.7ms

The game plays great at 1600p. No micro stuttering at all. The input lag and everything was smooth. My highest frame time was 33.0ms, which is no problem at all to me. The Average was 13.7ms which is great. The game is still gorgeous and will be for a very long time. This was level short so make your judgment from the other benchmarks.

Operation Swordbreaker [Campaign] @ 2560 x 1600p:

Gameplay Duration: 26 minutes 25 secs

Captured 123,237 frames

FPS Avg: 78fps

FPS Max: 120fps

FPS Min: 34fps

Frame time Avg: 12.9ms

Caspian Border [Multiplayer – Conquest 32v32] @ 2560 x 1600p:

Gameplay Duration: 23 minutes 29 secs

Captured 95,475 frames

FPS Avg: 66fps

FPS Max: 106fps

FPS Min: 34fps

Frame time Avg: 15.2ms

Operation Metro [Multiplayer – Conquest 32v32] @ 2560 x 1600p:

Gameplay Duration: 13 minutes 1 secs

Captured 58,831 frames

FPS Avg: 75fps

FPS Max: 112fps

FPS Min: 41fps

Frame time Avg: 13.3ms

Noshahr Canals [Multiplayer TDM 32v32] @ 1920x1080p:

Gameplay Duration: 21 minutes 49 secs

Captured 185,190 frames

FPS Avg: 141fps

FPS Max: 201fps

FPS Min: 76fps

Frame time Avg: 7.07ms

Operation Metro [Multiplayer – Conquest 32v32] @ 1280x720:

Gameplay Duration: 25 minutes 50 secs

Captured 265,517 frames

FPS Avg: 171fps

FPS Max: 224fps

FPS Min: 90fps

Frame time Avg: 5.86ms

Now as you can see the GTX 670 2GB SLI manhandles the Frostbite 2 engine. I’ll probably get around to testing the Frostbite 3 engine if I ever get man hands on the game. I will be posting more Real Time Benchmarks soon.

----

I found something interesting while play BF3 @ stock clocks with DDR3-1333Mhz.

Operation Swordbreaker [Campaign] @ 2560 x 1600p

[Stock Clocks+ DDR3-1333Mhz]:

CPU: Max: 36C

Gameplay Duration: 22 minutes 57 secs

100,692 frames captured

FPS Avg: 73fps

FPS Max: 115fps

FPS Min: 42fps

Frame time Avg: 13.7ms

Running with stock CPU settings – 3.0Ghz [x23] - 3.2Ghz [x24] + DDR3-1333Mhz – there are minor differences when running my PC @4.6Ghz+1600Mhz. From my stock test it appears that most games won’t require the 3.8Ghz+ overclock in order to enjoy games in Ultra-high resolution modes [16:10 -2560x1600p]. I had no micro stutter or issues at all. 2560x1600p played like a champ @ 100% maxed out-Ultra Settings 1600p. The X5660 CPU continues to impress me. I only lost approximately 5fps from the 4.6Ghz-DDR3-1600Mhz overclock. The RAW numbers don’t lie. My frame time only increased 0.8ms which is no problem at all. My CPU max was only 36C and the room ambient temp was 24c. This is very good to know and I will continue to test the stock clocks vs the overclock stocks during my real time gaming benchmarks. Not for every benchmark, but for high end games like Crysis 3 100% maxed @ 1080p. I have yet to play multilayer with stock settings. There isn't a huge difference in the Campaign thus far.

GTA: IV

The Cousins Bellic + It's Your Call Missions @2560x1600p:

Stock GTX 670 2GB 2-Way SLI @ 915Mhz [Boost: 1228Mhz]

X5660 @ 3.2Ghz

RAM: DDR3-1333Mhz

Gameplay Duration: 27 minutes 4 secs

Captured 78,263 frames

FPS Avg: 48fps

FPS Max: 72fps

FPS Min: 30fps

Frame time Avg: 20.8ms

Using the latest high-end mods makes GTA IV looks fantastic. I’m running the game maxed out + mods. I’m also running all of the unrestricted command lines. The Frame rate is decent and the frame time was great. The game was a little choppy at first, but got better as I continued to play. The game was very playable. It all depends on the area. Some areas will be a bit choppy along with input delay. Other areas are perfectly fine.The fps were as steady as I would like personally, but that isn’t a bad thing. I did not use the V-sync option, but I’m sure it would help. The CPU Usage Average was 30%. However, this all changed when I overclocked my CPU [read below].

Three's a Crowd + First Date Missions @ 2560x1600p:

Stock GTX 670 2GB 2-Way SLI @ 915Mhz [Boost: 1228Mhz]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-1600Mhz

Gameplay Duration: 23 minutes 4 secs

Captured 76,908 frames

FPS Avg: 55fps

FPS Max: 94fps

FPS Min: 25fps

Frame time Avg: 18.6ms

After running my overclock settings while increasing my RAM from 1333Mhz to 1600Mhz, GTA IV with mods is much more playable. Not only did I gain 7 frames per second and lowering my frame time average to 18.6ms, the game was very playable. The frame rate was steady. I never benchmark with V-sync for obvious reasons, but with my overclocked X5660 I didn’t need to use V-sync to keep the frames steady. If I were to overclock my GPU and boost to1241Mhz I’m sure I could get well over 60fps. I’m just running stock clocks to keep the heat low and to give everyone an example of stock speeds. There was no input lag and no latency issues. I believe a minor overclock to 3.8Ghz-4.2Ghz would be fine. The CPU Usage Average was on 15% while playing.