Rise Of The Tomb Raider DirectX 11 & 12 Benchmarks

DirectX 12 is still in the early stages and I’ve decided to pick up Rise of the Tomb Raider for the newest API. Not only for the DX12 API, but I’m a Tomb Raider fanatic. I can still remember when I used to play the Tomb Raider games back in the 90s\2000s. For the record I own every Tomb Raider game released across several platforms, so I’m serious when I say that I’m a TR fan. I’m still excited about DX12 nonetheless and I’ve ran tons of DX11 & DX12 benchmarking test. I’ve also performed some frame time tests as well. Unfortunately, we still haven’t received a “full” DirectX 12 title yet. So far we have Gear of War: UE, Hitman and now Rise of the Tomb Raider. Hitman did a great job with DX12, but there are still a few issues that need to be ironed out by the developers, Nvidia and AMD. Gear of War: UE, Hitman and TR were built with more than one API in mind. That’s expected since there are still a lot of gamers out there with DX11 only GPUs, but a lot of older GPUs can run some DirectX 12.

I’m sure once the developers start focusing solely on either Vulkan or DirectX 12 things will get much better. Well take what they give us for now. You can check out my Hitman review here:

Now let’s get to the specs and benchmarks.

Gaming Rig Specs:

Motherboard:ASUS Sabertooth X58

CPU:Xeon X5660 @ 4.6Ghz

or

CPU:Xeon X5660 @ 4.8Ghz

CPU Cooler:Antec Kuhler 620 Watercooled - Pull

GPU:AMD Radeon R9 Fury X Watercooled - Push

RAM:24GB DDR3-1600Mhz [6x4GB]

or

RAM:24GB DDR3-2088Mhz [6x4GB]

SSD:Kingston Predator 600MB/s Write – 1400MB/s Read

PSU:EVGA SuperNOVA G2 1300W 80+ GOLD

Monitor:Dual 24inch 3D Ready – Resolution - 1080p, 1400p, 1600p, 4K [3840x2160] and higher.

OS:Windows 10 Pro 64-bit

GPU Drivers:

AMD R9 Fury X @ StockSettings – Core 1050Mhz

All benchmarks are using SMAA and AA will be the built-in AO unless noted otherwise.

First I’d like to start with the fact that this is an Nvidia “The Way It’s Meant To Be Played” title. From here out I’ll use the label “TWIMTBP”. With that being said the game does feature some AMD tech shockingly. TR uses AMDs “Pure Hair” technology. Pure Hair is TressFX big sister and it looks even better. TressFX was featured in Tomb Raider 2013 release. AMD tech is open sourced. The Nvidia tech available is HBAO+, which is an upgrade from the 2008 HBAO version, and most recently with the DX12 update is a VXAO [Voxel-Based Ambient Occlusion]. Both HBAO+ and VXAO are closed sourced behind Nvidia’s proprietary Gameworks. What’s weird about VXAO is that it’s only compatible with the DX11 API. Nvidia has been a little slow updating their tech to DX12. What makes the VXAO setting different is that it’s only available on Nvidia GPUs so AMD users can’t find the setting in the option, secondly, VXAO is optimized and only available on Maxwell GPUs. Ouch Nvidia pushes that knife deeper into Kepler 600\700 series GPUs.

I also have few things I have to get off my chest just for the sake of being “fair”. I believe in fair treatment so I normally mix up the settings depending on the situation. However, lately I’ve been seeing a trend with a lot of benchmarks across the web. Everyone knows that Pure Hair is AMD tech and HBAO+ is Nvidia tech. If you didn’t read what I’ve written above know now you know. So you would think that the journalist would give gamers different benchmark results with certain tech [HBAO+\Pure Hair] disabled. NOPE! It seems many of them just set the Graphics settings to “Very High” and call it a day. There’s two issues with this; the first being that Pure Hair is optimized for AMD GPUs; the obviously second being that HBAO+ is closed sourced optimized for Nvidia GPUs.

So with those two facts out of the way you would “really” think that the journalist would give the gamers more options than the standard “Very High” graphical setting. The “Very High” doesn’t max the game 100% so it’s just a preset. This did not happen and obviously it gave AMD a bad look initially. What’s really messed up about the situation is that the journalist will acknowledge the HBAO+ hit on AMD GPUs in the the comment section or in the forums, but will not make a chart in the actual review; showing that AMD performance increases are higher than Nvidia’s increases when HBAO+ is disabled. AMD optimized their drivers, but it doesn’t matter if no one updates their benchmarks results or test more than one setting.

One company can easily optimize their drivers [Nvidia] and the other company [AMD] cannot optimize their drivers for closed proprietary tech [Nivida Gameworks]. So obviously AMD losing both battles here. Nvidia easily has access to AMDs open-source Pure Hair. Unlike the TressFX issues in 2013, Nivida was prepared this time. Well AMD has been optimizing their drivers and working on TR performance with the latest Crimson 16.3 Beta Drivers. AMD has really been cranking out drivers rapidly over the past year or so.

So initially I was going to 100% max the game as I normally do and benchmark. Instead I think I’ll do something different this time. I think I’ll use the SAME settings several websites used by simply setting the “Graphical Settings” to “Very High”. Then afterwards I’ll disabled HBAO+ to show you the penalty it has on AMD GPUs. Since I only have an AMD GPU to benchmark I’ll disabled the Nvidia tech. If I had an Nvidia GPU I would disable the AMD tech for comparison. Then I would run both cards with neither company tech enabled for a fair comparison.

DirectX 11 Benchmarks

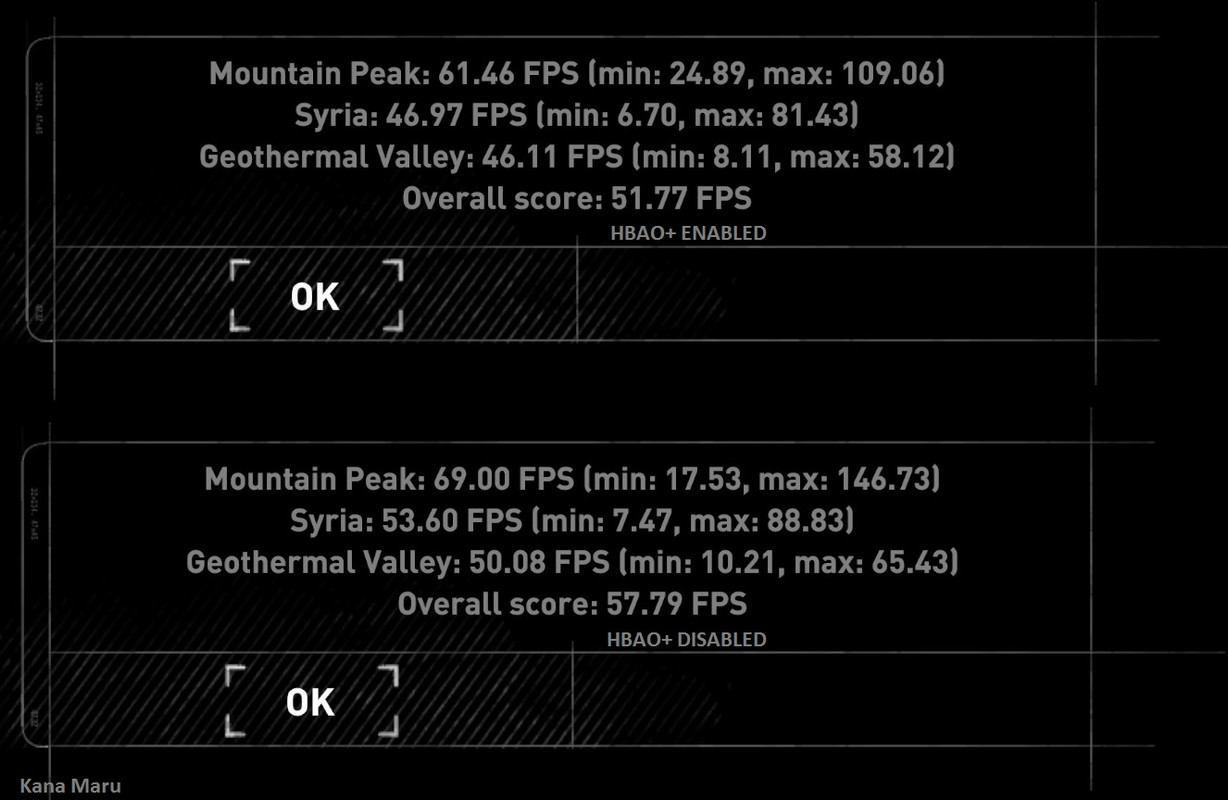

2560x1440 - Internal Benchmark

| Very High & HBAO+ ENABELD |

FPS Average | FPS Min | FPS Max | Overall Score | Performance Difference |

Very High & HBAO+ DISABLED - Using AO |

FPS Average | FPS Min | FPS Max | Overall Score |

| Moutain Peak: | 61.46 | 24.89 | 109.06 | 12.26% | Moutain Peak: | 69.00 | 17.53 | 146.73 | ||

| Syria: | 46.97 | 6.70 | 81.43 | 14.11% | Syria: | 53.60 | 7.47 | 88.83 | ||

| Geaothermal Valley | 46.11 | 8.11 | 58.12 | 8.61% | Geaothermal Valley | 50.08 | 10.21 | 65.43 | ||

| 51.77FPS | 11.63% | 57.79FPS |

While running the newly built in benchmark you can clearly see that the Nvidia HBAO+ Gameworks tech affects 11.63% of my overall Fury X performance. Noticed that when I use the built in “AO” that Crystal Dynamics provided my score increases. Could this be why several benchmarking sites didn’t disable HBAO+? Who knows and I understand that it takes a while to benchmark and get all of the data ready for readers like you. However, this is a built in benchmarking tool and it takes less than 15 seconds to change the settings. I think this explains why we need to see more than one blanket “graphical” setting that the developers give us.

3840x2160 - Internal Benchmark

| Very High & HBAO+ ENABLED - Using AO |

FPS Average | FPS Min | FPS Max | Overall Score | Performance Difference |

Very High & HBAO+ DISABLED - Using AO |

FPS Average | FPS Min | FPS Max | Overall Score |

| Moutain Peak: | 34.30 | 15.66 | 78.48 | 16.59% | Moutain Peak: | 39.99 | 14.57 | 90.11 | ||

| Syria: | 20.46 | 5.22 | 47.21 | 35.04% | Syria: | 27.63 | 5.29 | 55.95 | ||

| Geaothermal Valley | 23.09 | 4.56 | 36.12 | 14.29% | Geaothermal Valley | 26.39 | 5.28 | 37.55 | ||

| 26.29FPS | 20.04% | 31.56FPS |

Moving on to the DX11 4K benchmarks, the same issues are experienced here as well. HBAO+ causes issues with AMD GPUs and perhaps some Nvidia GPUs as well. With HBAO+ disabled my fps @ 4K increased 20%. My fps for each benchmark level increased 5-7fps during the test.

So if you are running an AMD Radeon GPU you should DISABLE HBAO+no matter which settings you decide to use. With that being said let’s see how well the game performs in-game.

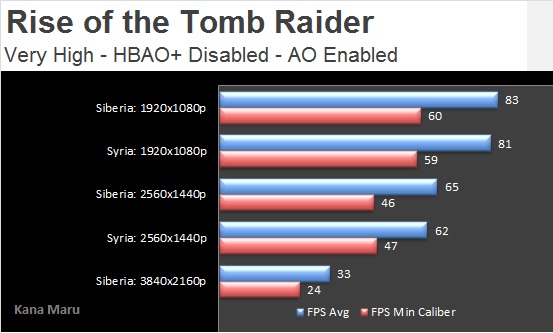

DirectX 11 - Real Time Benchmarks ™

Siberia

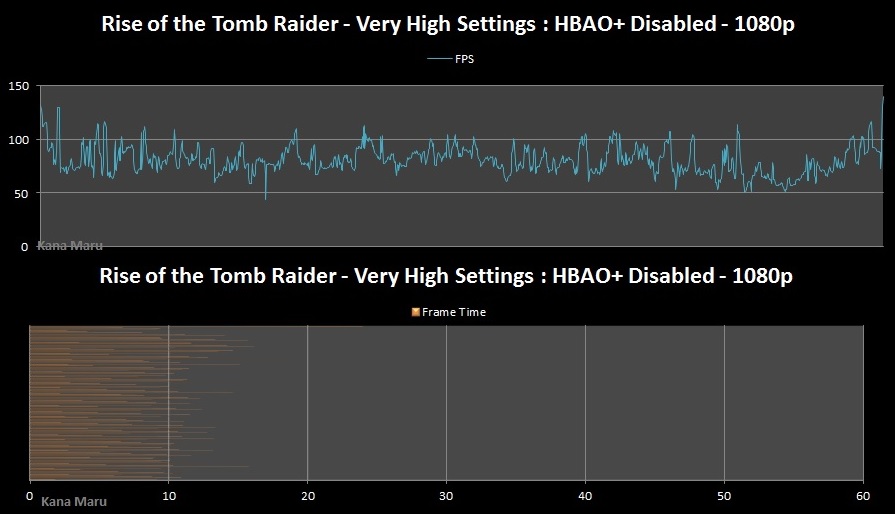

Rise of the Tomb Raider - [ DX11 + Very High Settings - HBAO+ DISABLED] – 1920x1080p

AMD R9 Fury X @ Stock Settings [Crimson 16.3 Beta Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-1600Mhz

FPS Avg: 83fps

FPS Max: 172fps

FPS Min: 42fps

FPS Min Caliber ™: 59.79fps

Frame time Avg: 12ms

Fury X Info:

GPU Temp Avg: 40c

GPU Temp Max: 42c

GPU Temp Min: 34c

CPU info:

CPU Temp Avg: 49c

CPU Temp Max: 61c

CPU Temp Min: 42c

CPU Usage Avg: 20%

CPU Usage Max: 42%

Syria

Rise of the Tomb Raider - [ DX11 + Very High Settings - HBAO+ DISABLED] – 1920x1080p

AMD R9 Fury X @ Stock Settings [Crimson 16.3 Beta Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-1600Mhz

FPS Avg: 81fps

FPS Max: 139fps

FPS Min: 44fps

FPS Min Caliber ™: 58.67fps

Frame time Avg: 12.4ms

Fury X Info:

GPU Temp Avg: 40c

GPU Temp Max: 43c

GPU Temp Min: 35c

CPU info:

CPU Temp Avg: 47c

CPU Temp Max: 58c

CPU Temp Min: 39c

CPU Usage Avg: 19.31%

CPU Usage Max: 38%

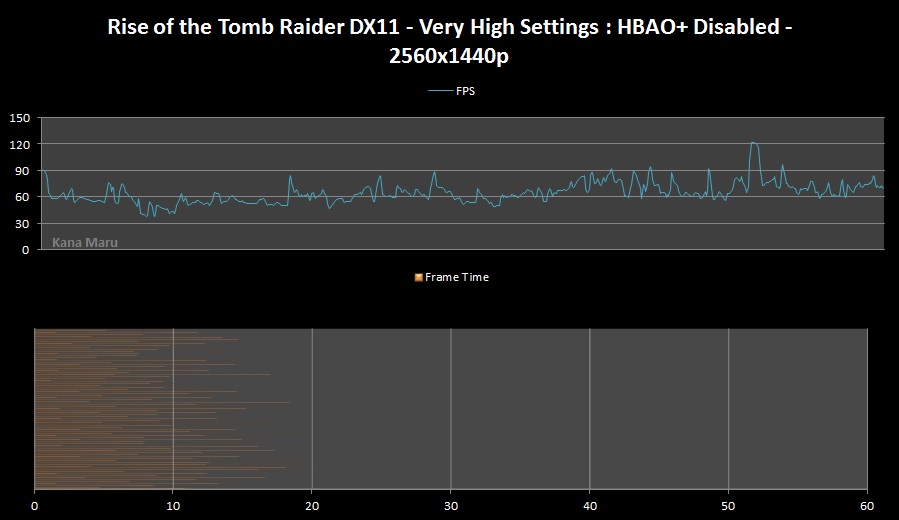

Siberia

Rise of the Tomb Raider - [ DX11 + Very High Settings - HBAO+ DISABLED ] – 2560x1440p

AMD R9 Fury X @ 1100Mhz OC [Crimson 16.3 Beta Drivers] - Overclocked

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-2088Mhz

FPS Avg: 65fps

FPS Max: 122fps

FPS Min: 38.2fps

FPS Min Caliber ™: 46.25fps

Frame time Avg: 15.5ms

Fury X Info:

GPU Temp Avg: 41c

GPU Temp Max: 43c

GPU Temp Min: 34c

CPU info:

CPU Temp Avg: 40c

CPU Temp Max: 61c

CPU Temp Min: 40c

CPU Usage Avg: 16%

CPU Usage Max: 42%

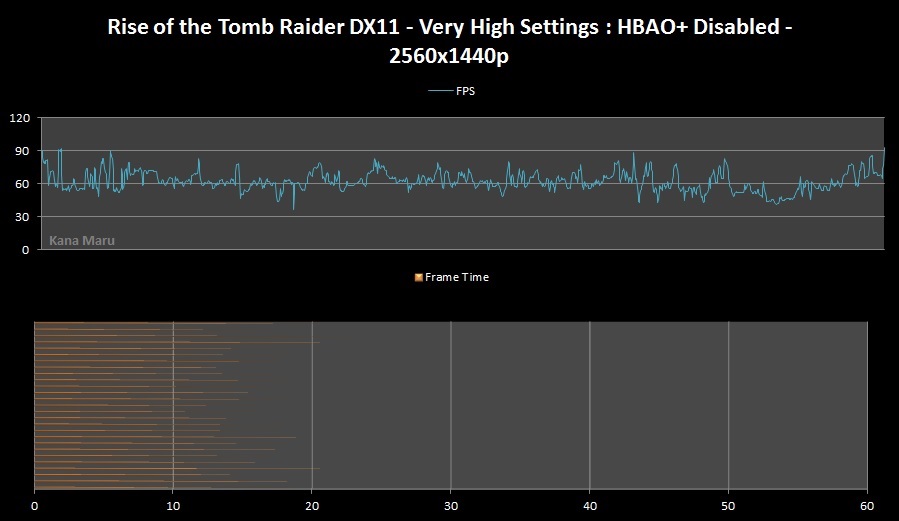

Syria

Rise of the Tomb Raider - [ DX11 + Very High Settings - HBAO+ DISABLED ] – 2560x1440p

AMD R9 Fury X @ 1100Mhz OC [Crimson 16.3 Beta Drivers] - Overclocked

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-2088Mhz

FPS Avg: 62fps

FPS Max: 92fps

FPS Min: 37fps

FPS Min Caliber ™: 47fps

Frame time Avg: 16ms

Fury X Info:

GPU Temp Avg: 41c

GPU Temp Max: 43c

GPU Temp Min: 37c

CPU info:

CPU Temp Avg: 47c

CPU Temp Max: 63c

CPU Temp Min: 39c

CPU Usage Avg: 14%

CPU Usage Max: 44%

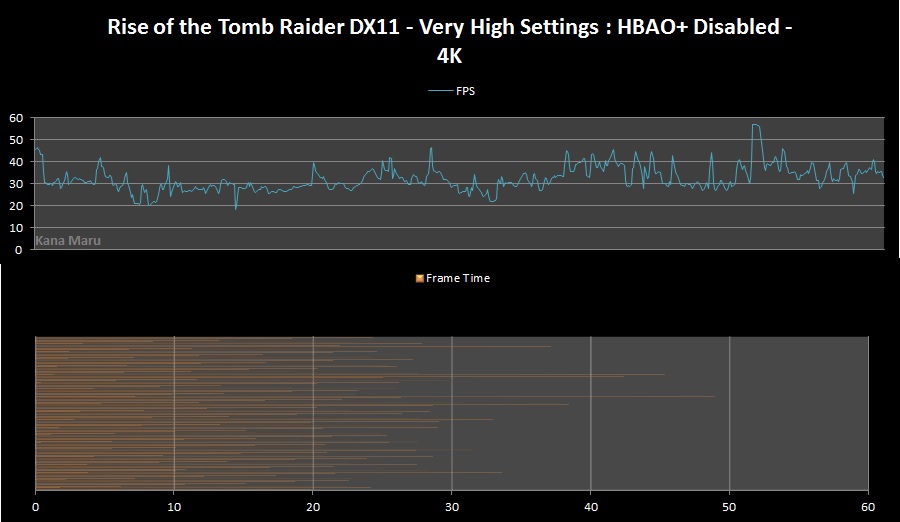

Siberia

Rise of the Tomb Raider - [ DX11 + Very High Settings - HBAO+ DISABLED ] – 3840x2160p- 4K

AMD R9 Fury X @ Stock Settings [Crimson 16.3 Beta Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-1600Mhz

FPS Avg: 33fps

FPS Max: 57fps

FPS Min: 18.4fps

FPS Min Caliber ™: 24fps

Frame time Avg: 31ms

Fury X Info:

GPU Temp Avg: 37c

GPU Temp Max: 39c

GPU Temp Min: 31c

CPU info:

CPU Temp Avg: 35c

CPU Temp Max: 47c

CPU Temp Min: 30c

CPU Usage Avg: 10%

CPU Usage Max: 32%

Rise of the Tomb Raider - Fury X - AMD 4GB GPU Optimization

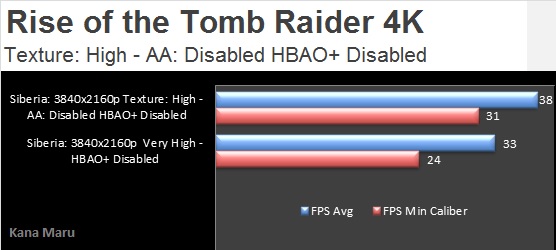

Although the Fury X handles Rise of the Tomb Raider [Very High Preset: HBAO+ Disabled] very well, with only 4GBs of vRAM a few changes can increase your gameplay expeirence & frame times. The "Texture Quality" requires a ton of vRAM and the game warns you that you'll want to be using a GPU with more than 4GBs vRAM. All of the test I've performed on the previous pages did include AA and High Textures, but it's hard to notice a difference above 1080p. There's also really no reason to use the Anti-Alising settings at 4K or 1440p anyways.

After setting the Very High settings in the options you'll need to lower 3 settings to gain around 6 or more fps @ 4K.

- Disable Anti_Aliasing

- Lower the Texture Quality to "High"

- Disabled Nvidia HBAO+ and use the built-in Ambient Occlusion

All other setting with the "Very High" preset can remain the same. I have performed another Real Time Benchmarks™ to show the performance increase. I also ran the Internal Benchmark as well.

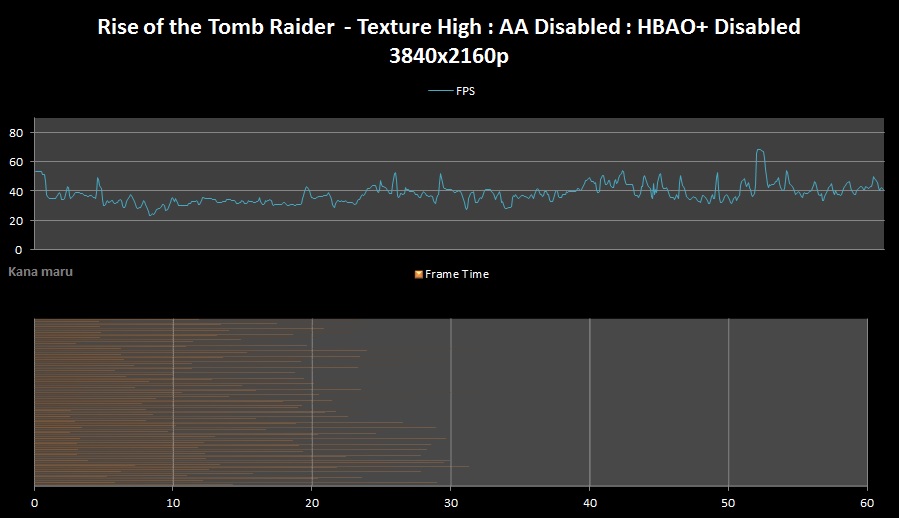

Siberia

Rise of the Tomb Raider - [DX11 + Texture High + AA Disabled + No HBAO+] – 3840x2160p- 4K

AMD R9 Fury X @ Stock Settings [Crimson 16.3 Beta Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-2088Mhz

FPS Avg: 38fps

FPS Max: 69fps

FPS Min: 23fps

FPS Min Caliber ™: 31fps

Frame time Avg: 26.33ms

Fury X Info:

GPU Temp Avg: 42c

GPU Temp Max: 44c

GPU Temp Min: 34c

CPU info:

CPU Temp Avg: 42c

CPU Temp Max: 64c

CPU Temp Min: 38c

CPU Usage Avg: 9%

CPU Usage Max: 33%

2560x1440 & 3840x2160 - Internal Benchmark

| 2560x1440p | FPS Average | FPS Min | FPS Max | Overall Score: |

| Moutain Peak: | 84.54 | 36.10 | 157.72 | |

| Syria: | 63.20 | 10.51 | 81.35 | |

| Geaothermal Valley | 59.69 | 13.10 | 75.99 | |

| 69.49fps |

| 3840x2160p | FPS Average | FPS Min | FPS Max | Overall Score: |

| Moutain Peak: | 47.89 | 20.13 | 117.70 | |

| Syria: | 35.56 | 10.83 | 61.51 | |

| Geaothermal Valley | 35.45 | 10.03 | 39.88 | |

| 39.88fps |

As you can see above the performance increases are worth it. Although the the Fury X performs well with the Very High Settings, frame rate is closer to 40fps than 30fps. You can also lower other graphical settings that you find insignifican to the overall Image Quality. No matter what you decide to do just remember to disable HBAO+. I discussed the implications of enabling HBAO+ a few pages back. I've been playing this game a lot lately and I've ran tons of benchmarks during gameplay. After lowering the settings I ddidn't notice a difference while playing. The Image Qualiy is still great.

DirectX 12 Benchmarks

Alright there are some serious issues with DirectX 12. Rise of the Tomb Raider is now the second game to poorly implement DX12. Gear of War: UE was the first game which features old archaic source code with tacked on DX12. AMD has addressed their issues in GOW:UE with the latest patch by the way and the performance has been increased by 60% with the Fury X . Rise of the Tomb Raider will definitely need more patches soon from the developers. AMD will have to work with Crystal Dynamics to get DX12 running well. People across the web are getting worse results under DX12 with AMD cards. Which is laughable because DX12 is based heavily on AMDs Mantle API and AMD GPUs are manufactured to benefit from low-level concurrent operations?

While running Rise of the Tomb Raider at 4K resolution & using DirectX 12 the experience is horrible. Seriously, it’s atrocious. If my Fury X runs out of vRAM you see weird artifacts pop-up on the screen and black spots were the 3D assets and textures are loading. The frame times are horrible. However, using the same settings @ 4K with DirectX 11 shows much different results. DirectX 11 performed very well and you can tell that AMD has been working hard on lowered their DX11 overhead issues. This was unexpected behavior for DirectX 12. DX12 will more than likely get patched in the remaining weeks. I've compared my 1440p benchmarks using both DX11 and DX12 so that we can compare them.

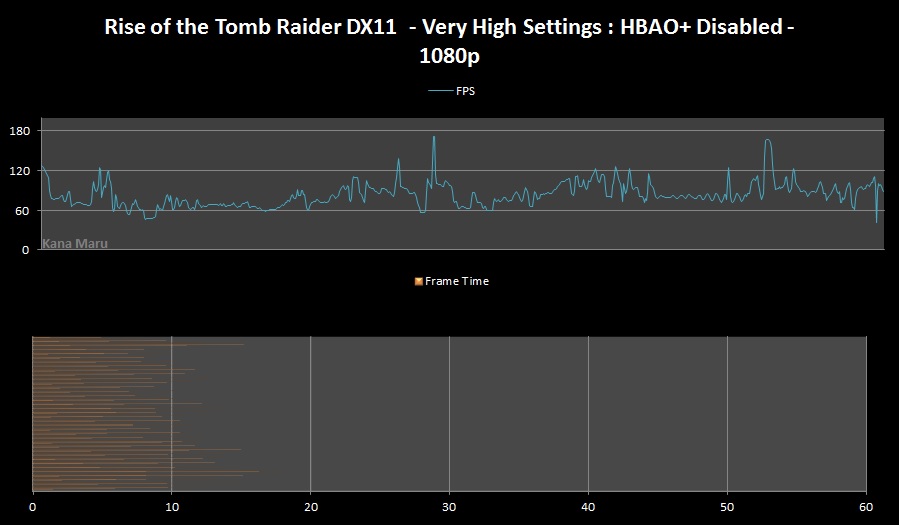

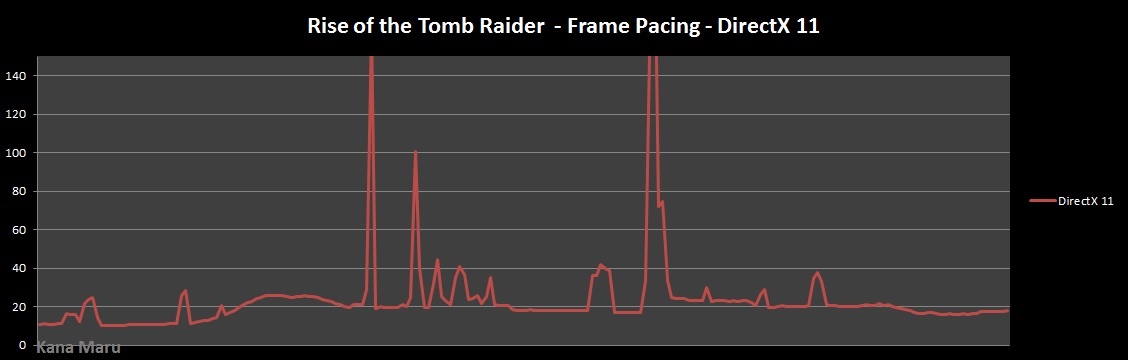

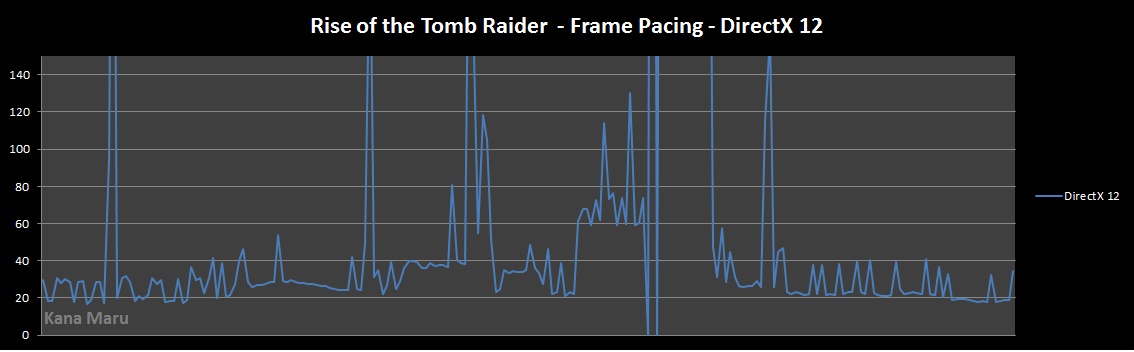

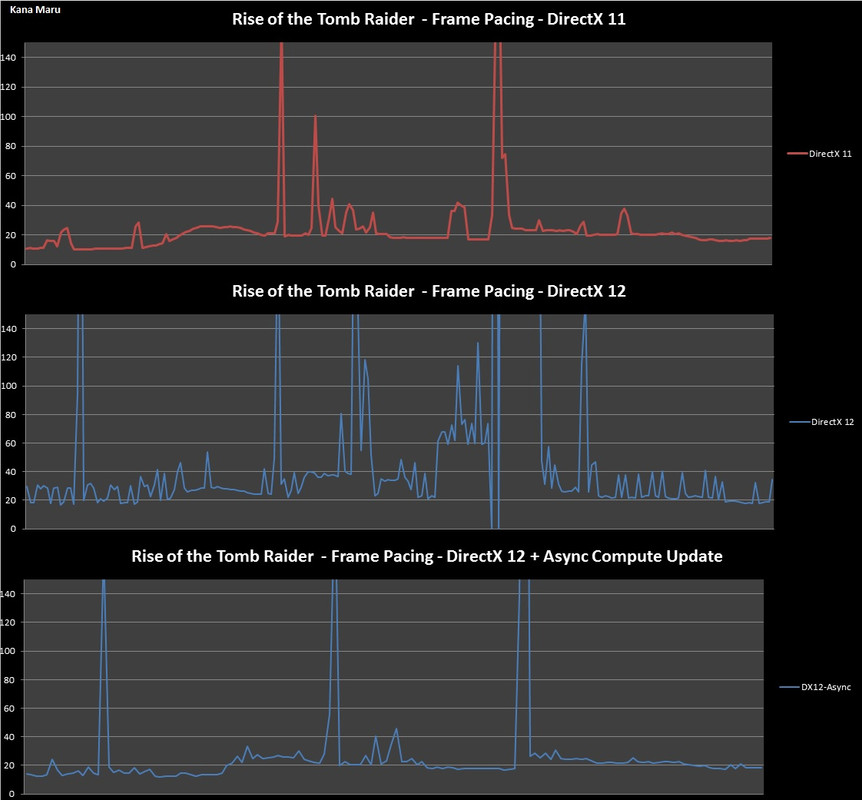

DirectX 11 vs DirectX 12 - Frame Pacing

First I'll let be known that the massive spikes in DX11 did not affect the FPS, but they occured during loading periods. I'm showing the RAW data here so some rotten data shouldn't be taken seriously. The main point is the massive "spiking" that occurs while running DX12.

Notice how smooth the DX11 experience compared the the DX12 experience. The internal benchmark runs 3 different areas so those spikes you see in the DX11 frame time chart is during loading areas. In the DX12 chart you'll notice that it is spiking during the first benchmark, but the majority of spiking happens below 33ms which means you won't really notice it. However, you will notice stutters near the end of the first benchmarks and so on. The second area in the benchmark will shows how screwed up DX12 is in this game. The frame times are all over the place, but now such problems are noticeable in the DX11 during the benchmark. The PC developers and AMD will definitely need to get together to address this issue.

Another issue I've noticed is that DX12 benchmarks aren't reliable for comparison at the moment. In other words you cannot perform a DirectX 11 vs DirectX 12. Rise of the Tomb Raider will not allow DX12 to go above 60fps from what I've noticed. I will continue to see what I can find out about this and hopefully I can find away around this limitation. The main issue is the frame times are spiking all over the place in DX12 and this is causing DX11 to perform better.

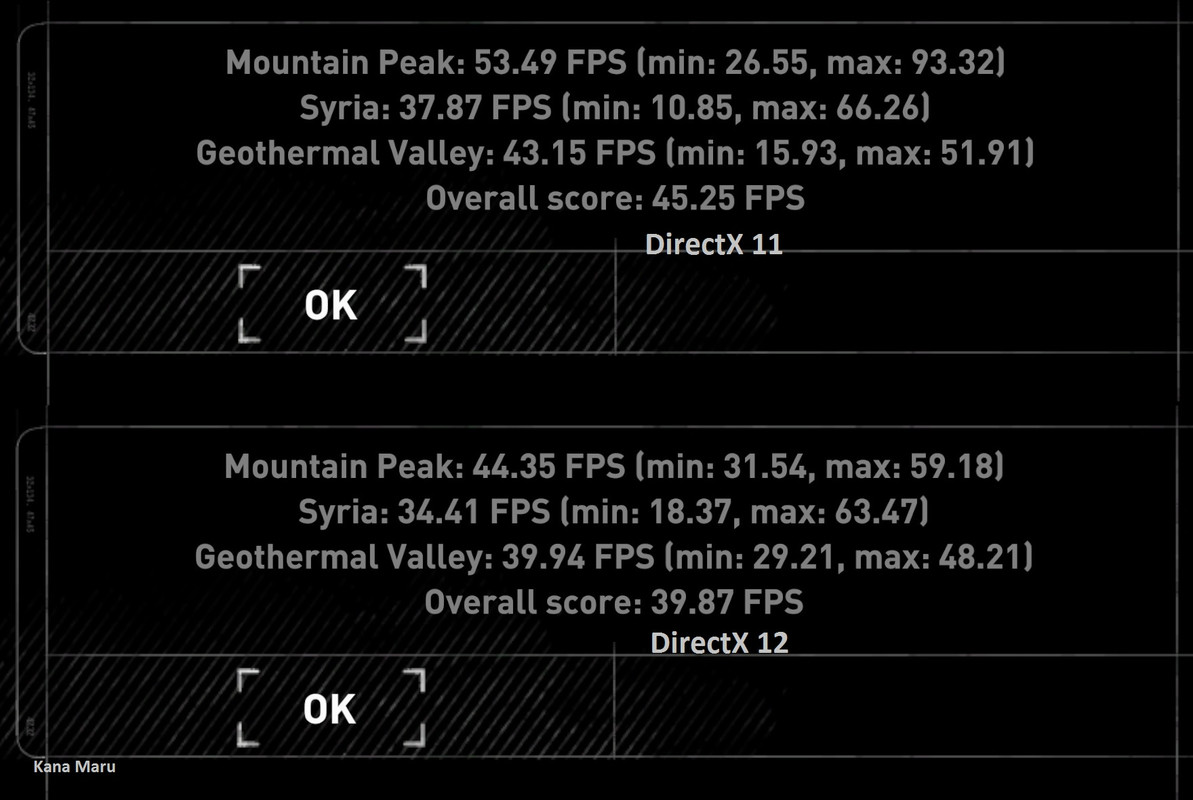

Just to ensure that I'm no hitting the Fury X limited vRAM at high resolutions I performed a benchmark test using the internal tool @ 2560x1440 using the "Medium" graphical settings. I wanted to make sure I wasn't experiencing the same problems found with limited vRAM and throttling. Let's see how crap the DX12 implementation is now.

As you can see above the DX11 API is running 13.50% better than the DX12 API. This should not be happening and is definitely a major issue. What's weird is that the internal benchmark shows "63.47fps" as the max for one of the DX12 benchmarks. I couldn't see past 60fps during my testing. It wouldn't really matter at this point anyways since the DX12 implementation is horrid at the moment. So we can eliminate vRAM bottlenecking. Hitman runs DX12 much better. Both games comes from Square Enix, different devs of course.

Update : There was a patch recently released for this game. I've tested DirectX 12 and DX12 is still broken for AMD cards. The performance is actually worse in DX12. I guess we will have to wait even longer for the devs to fix the DX12 issues.

DirectX 12 Benchmarks Results with Async Compute

Nearly 3 months later we still see Crystal Dynamics attempts to "learn and implement" DX12. Rise of the Tomb Raider was developed with the DirectX 11 API and later updated implemented the DX12 API. Previous DX12 results have been abysmal, but now they have included Async Compute for AMD GCN 1.1 architecture. My Fury X supports GCN 1.2 so there shouldn't be any issues. I have ran several benchmarks to see if Crystal Dynamics have improved their DX12 implementation or not. Anything should be better than what we received nearly 3 months ago. Orginally I ran the benchmarks using the latest drivers from AMD [Crimson 16.7.2 Beta] and had poor performance with the DX12 API with Async Compute. I decided to double check my results so I removed the drivers and reinstalled the drivers. It appears my driver issue has been resolved.

Let's take a look at the Frame Pacing when comparing DX11, DX12 and DX12 with Async Compute:

The raw data in the charts does show that the developers have fixed their constant stutter. There are still some issues that need to addressed, but overall the game is very playable at 1440p and 4K. You can ignore the massive spikes that are off the charts since those only occurred when the game is loading the next area. The developers now has DX12 working properly with Fullscreen and Exclusive Fullscreen. DX12 with Async Compute pacing is identical to the DX11 API. Hopefully more developers build their games around DX12 instead of implementing wrappers and late implementations. It took awhile, but DX12 is finally playable and offers a better experience according to the chart above. The internal benchmark has been pretty accurate with my Real Time Benchmarks so far. Let's see how DX12 with Async Compute translate to actual gameplay. Remember that all of my benchmarks are using SMAA, even at 4K. I like to max all settings when benchmarking.

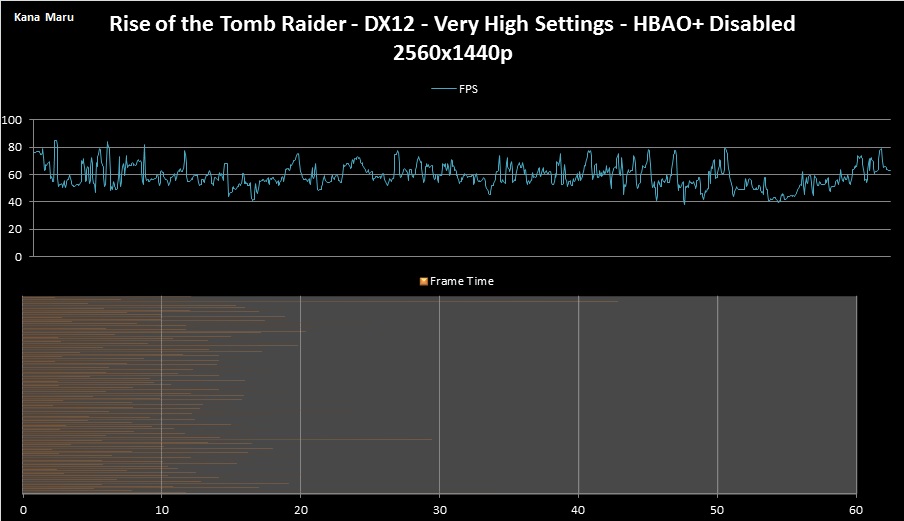

Rise of the Tomb Raider [DX12 + Very High Settings - HBAO+ DISABLED] – 2560x1440p

AMD R9 Fury X @ Stock Settings [Crimson 16.7.2Beta Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-1600Mhz

FPS Avg: 59fps

FPS Max: 85fps

FPS Min Caliber™: 43.9fps

Frame time Avg: 16.94ms

Fury X Info:

GPU Temp Avg: 42c

GPU Temp Max: 43c

GPU Temp Min: 31c

CPU info:

CPU Temp Avg: 43c

CPU Temp Max: 57c

CPU Temp Min: 36c

CPU Usage Avg: 16%

CPU Usage Max: 45%

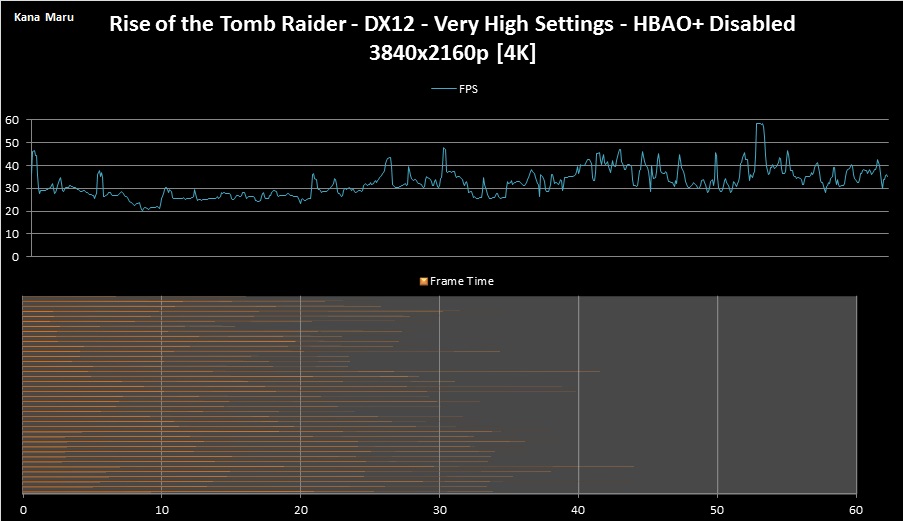

Rise of the Tomb Raider [DX12 + Very High Settings - HBAO+ DISABLED] - 3840x2160 – 4K

AMD R9 Fury X @ Stock Settings [Crimson 16.7.2Beta Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-1600Mhz

FPS Avg: 32.32fps

FPS Max: 58.3fps

FPS Min Caliber™: 23.35fps

Frame time Avg: 30.94ms

Fury X Info:

GPU Temp Avg: 42.31c

GPU Temp Max: 45c

GPU Temp Min: 31c

CPU info:

CPU Temp Avg: 40c

CPU Temp Max: 60c

CPU Temp Min: 37c

CPU Usage Avg: 9.1%

CPU Usage Max: 55%

The game performs well at 1440p and 4K. There was no micro-stutter and there were no issues running the game in Exclusive Fullscreen Mode. That mode causes issues in some games when it comes to DX12. Let's see how well DX11 compared to DX12 with Async.

DX12 with Async Compute increases the FPS Min by 33% at 1440p and 27% at 4K. The actual average frame rate is basically the same regardless of which API you use. Usually DX12 offers better frame rate averages as seen in games such as Hitman and Ashes of the Singularity. This is not the case in Rise of the Tomb Raider. However, DX12 would be the go to API since the minimum frame rates are much higher than the DX11 frame times. I'll tip my hat to Crystal Dynamics for sure since they have fixed their previous DX12 issues on AMD GPUs, but they must learn how to get more out of the DX12 API. Rise of the Tomb Raider was built around DX11 so DX12 is like on the job training for the developers at this point.

If you want more frames per second @ 4K with a Fury X or a 4K capable 4GB GPU you can disable AA [FXAA\SMAA] and lower the Texture Quality from Very High to the High setting. All other settings can use the Very High preset. I'll probably get around to running test with the optimized settings above when I get more time. Check back for updates and be sure to check out this game if you haven't yet. it's a great title and one of the best looking games to release this year.

Upcoming Updates:

- Add Internal DX12 Results Images

-Add Real Time Benchmarks™ for DX11 andDX12

- Add CPU Usage Chart for DX12

-Add 4K, 1440p, 3200x1800 and possibly 1080pReal Time Benchmarks™

- Add HQ Gameplay Images

-Wait for DX12 to be fixed and update the results.

- Play and enjoy the game