DirectX 12 Benchmarks

Alright there are some serious issues with DirectX 12. Rise of the Tomb Raider is now the second game to poorly implement DX12. Gear of War: UE was the first game which features old archaic source code with tacked on DX12. AMD has addressed their issues in GOW:UE with the latest patch by the way and the performance has been increased by 60% with the Fury X . Rise of the Tomb Raider will definitely need more patches soon from the developers. AMD will have to work with Crystal Dynamics to get DX12 running well. People across the web are getting worse results under DX12 with AMD cards. Which is laughable because DX12 is based heavily on AMDs Mantle API and AMD GPUs are manufactured to benefit from low-level concurrent operations?

While running Rise of the Tomb Raider at 4K resolution & using DirectX 12 the experience is horrible. Seriously, it’s atrocious. If my Fury X runs out of vRAM you see weird artifacts pop-up on the screen and black spots were the 3D assets and textures are loading. The frame times are horrible. However, using the same settings @ 4K with DirectX 11 shows much different results. DirectX 11 performed very well and you can tell that AMD has been working hard on lowered their DX11 overhead issues. This was unexpected behavior for DirectX 12. DX12 will more than likely get patched in the remaining weeks. I've compared my 1440p benchmarks using both DX11 and DX12 so that we can compare them.

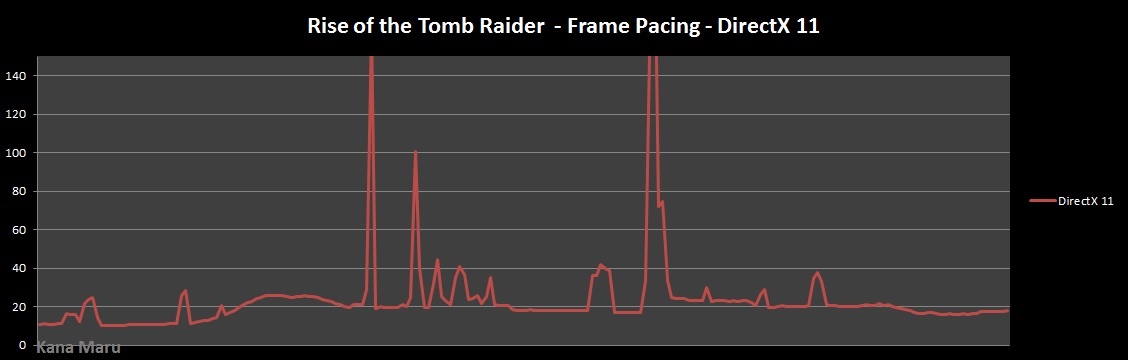

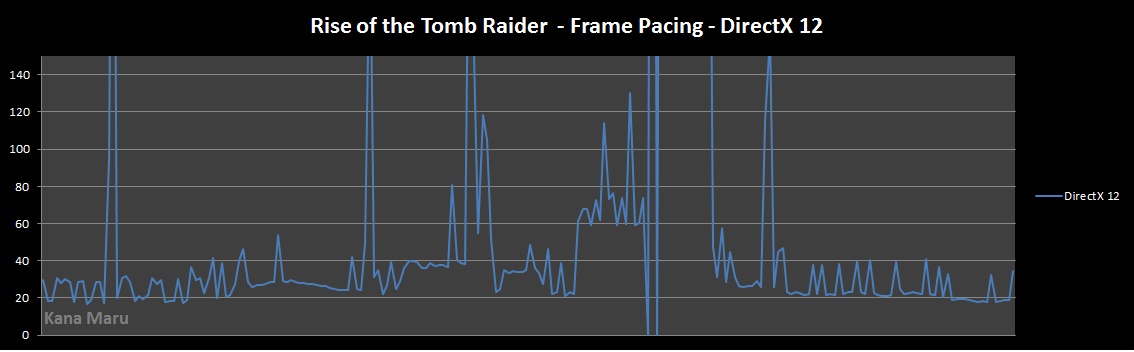

DirectX 11 vs DirectX 12 - Frame Pacing

First I'll let be known that the massive spikes in DX11 did not affect the FPS, but they occured during loading periods. I'm showing the RAW data here so some rotten data shouldn't be taken seriously. The main point is the massive "spiking" that occurs while running DX12.

Notice how smooth the DX11 experience compared the the DX12 experience. The internal benchmark runs 3 different areas so those spikes you see in the DX11 frame time chart is during loading areas. In the DX12 chart you'll notice that it is spiking during the first benchmark, but the majority of spiking happens below 33ms which means you won't really notice it. However, you will notice stutters near the end of the first benchmarks and so on. The second area in the benchmark will shows how screwed up DX12 is in this game. The frame times are all over the place, but now such problems are noticeable in the DX11 during the benchmark. The PC developers and AMD will definitely need to get together to address this issue.

Another issue I've noticed is that DX12 benchmarks aren't reliable for comparison at the moment. In other words you cannot perform a DirectX 11 vs DirectX 12. Rise of the Tomb Raider will not allow DX12 to go above 60fps from what I've noticed. I will continue to see what I can find out about this and hopefully I can find away around this limitation. The main issue is the frame times are spiking all over the place in DX12 and this is causing DX11 to perform better.

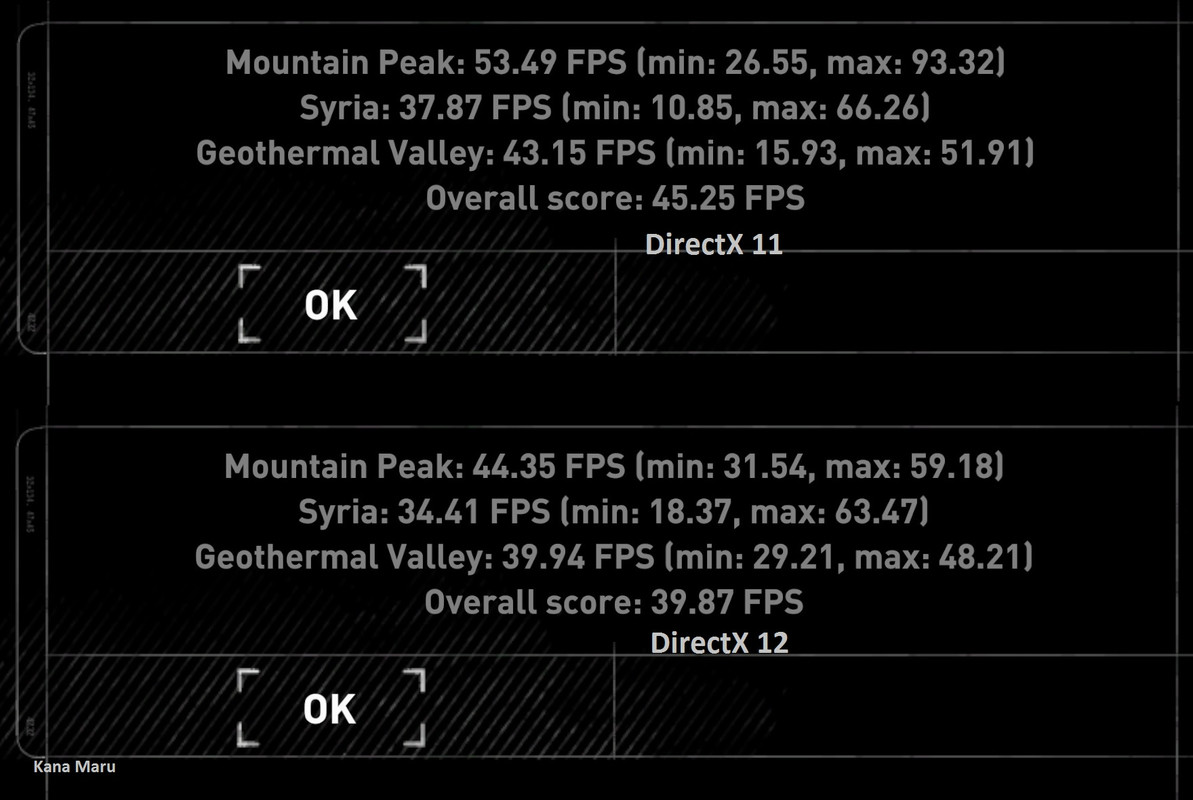

Just to ensure that I'm no hitting the Fury X limited vRAM at high resolutions I performed a benchmark test using the internal tool @ 2560x1440 using the "Medium" graphical settings. I wanted to make sure I wasn't experiencing the same problems found with limited vRAM and throttling. Let's see how crap the DX12 implementation is now.

As you can see above the DX11 API is running 13.50% better than the DX12 API. This should not be happening and is definitely a major issue. What's weird is that the internal benchmark shows "63.47fps" as the max for one of the DX12 benchmarks. I couldn't see past 60fps during my testing. It wouldn't really matter at this point anyways since the DX12 implementation is horrid at the moment. So we can eliminate vRAM bottlenecking. Hitman runs DX12 much better. Both games comes from Square Enix, different devs of course.

Update : There was a patch recently released for this game. I've tested DirectX 12 and DX12 is still broken for AMD cards. The performance is actually worse in DX12. I guess we will have to wait even longer for the devs to fix the DX12 issues.