Why AMD and HBM Matters

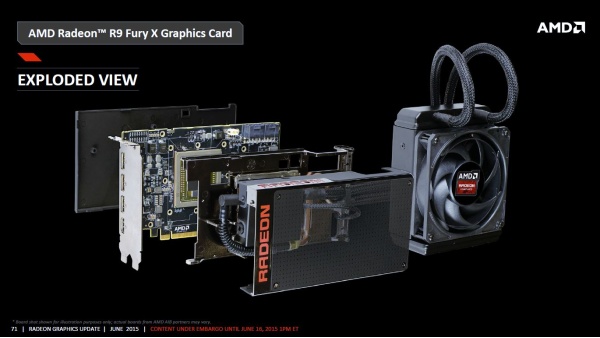

I’ve finally purchased one of the hottest selling cards on the market (no pun intended), the watercooled AMD Radeon R9 Fury X. I couldn’t wait to get my hand on this card and benchmark it myself. Now what would make AMD bring back the Fury brand from 1999 when ATI was a separate company from AMD? There must be something exciting right? Well for starters all you ever hear anyone talk about now is “HBM” high bandwidth memory. HBM is indeed a big deal since it eliminates a lot of issues that was once faced with GDDR5 in this case. HBM eliminates processing bottlenecking and allows data to be moved quickly and efficiently. Speaking of “efficient”, the power efficiency is much better than GDDR5 as well. HBM runs at a lower voltage.

HBM works by communicating with the GPU via an interposer. HBM ram is stacked and passes data via the “TSVs” - through-silicon vias. HBM also has a wider bus that reaches 1024-bit compared to GDDR5 32-bit. This allows HBM to move more than 100GB/s per stack. Yes more than 100GB/s! AMD along with SK Hynix has definitely created more revolution technology that technologist and PC gamers will love.

You can say what you want about AMD, but there is one thing you must admit, they have been innovating for a very long time. Just to name a few other innovations that AMD has been apart of includes: x86-x64, IMC – Integrated Memory Controllers, On-die GPUs, multicore CPUs [consumer side], WOL\\Magic Packet [along with HP] GDDR and Mantle. Speaking on the last two innovations, AMD had a lot to do with the creation of GDDR as a standard. Let’s also remember that AMD was the first company to release a GPU with GDDR memory. Many people think that Mantle is dead. I beg to differ. Microsoft and The Khronos Group has taken the best parts of Mantle and incorporated the low level APIs\l - low overhead into DirectX 12 and OpenGL\Vulkan respectfully.

As a consumer and a gamer, especially a PC gamer, I must thank AMD. DX11 was nice, but without DX12 low overhead there would be virtually no hype for a lot of Windows 7 & 8 gamers. AMD Mantle has had a lot of support from some of the biggest AAA games on the market. Vulkan will also allow developers to use an open API that won’t be limited to specific platforms such DX12. Vulkan is also getting a lot of press and support from some of the biggest companies in the world. This is great for programmers and gamers who avoid Windows or those who don’t use the Windows OS.

Why AMDs R9 Radeon Fury X Matters

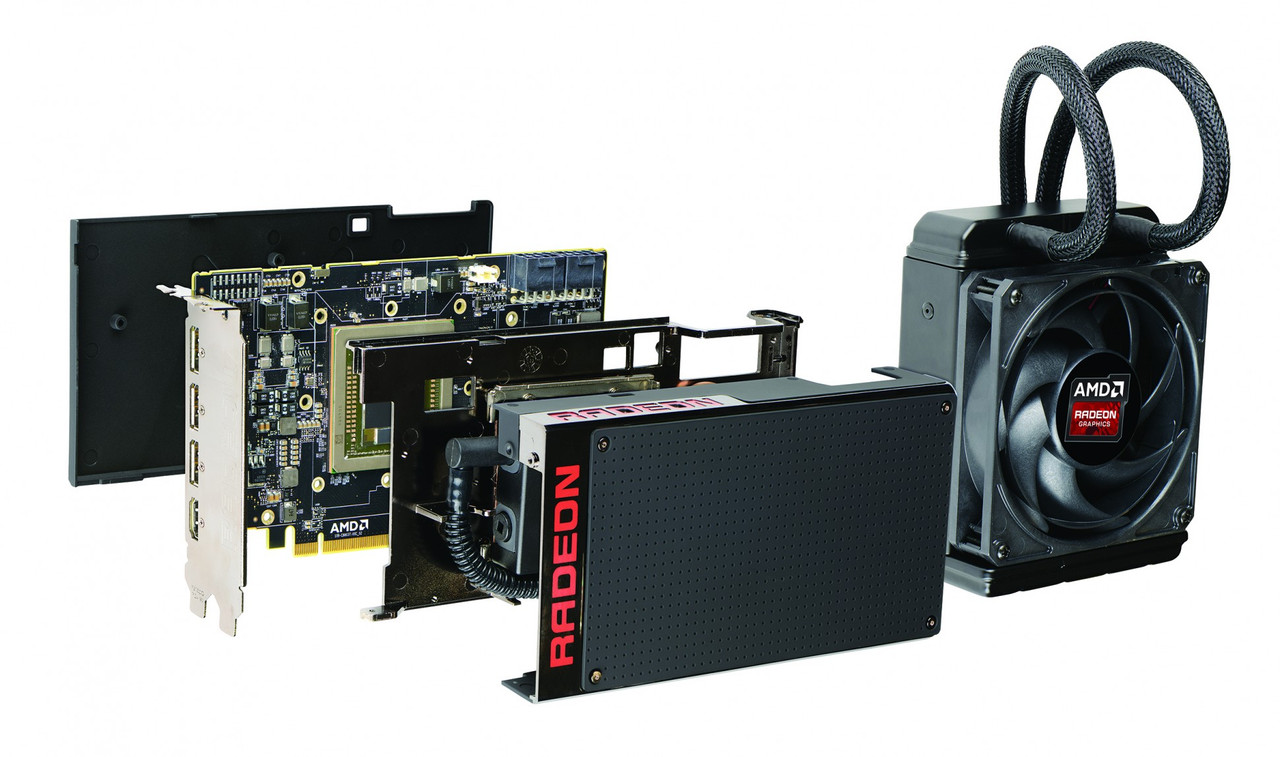

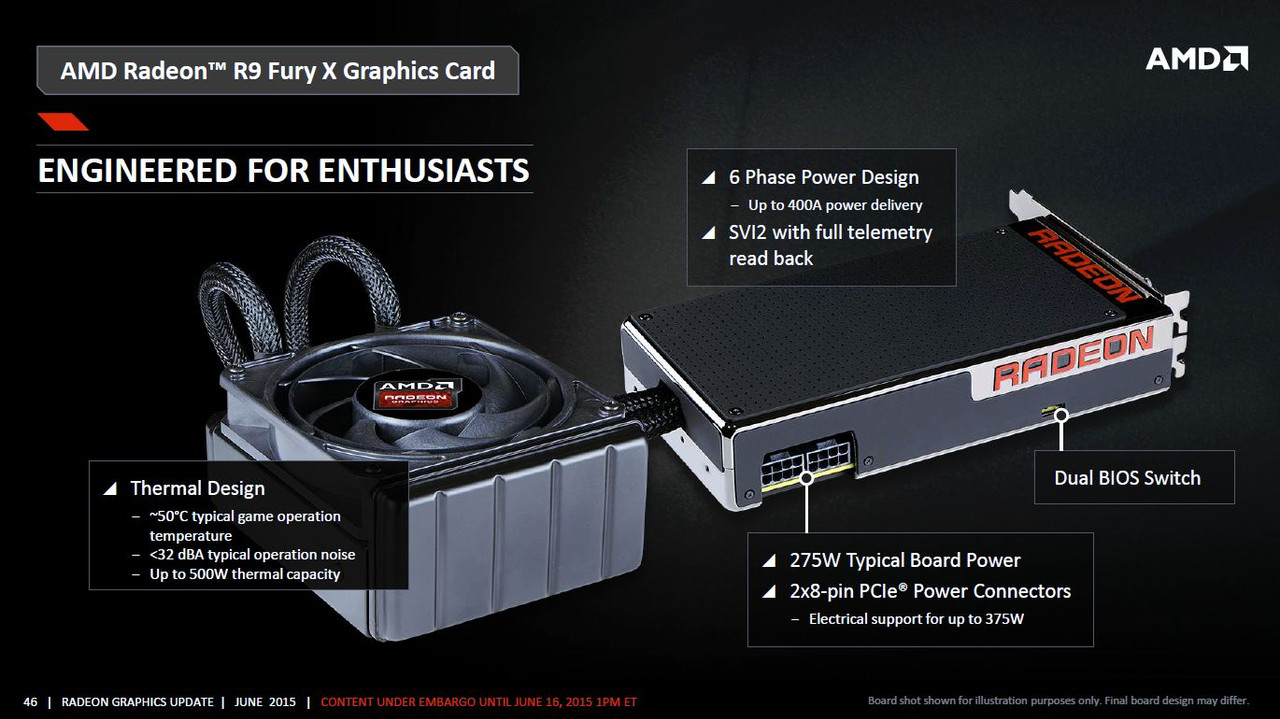

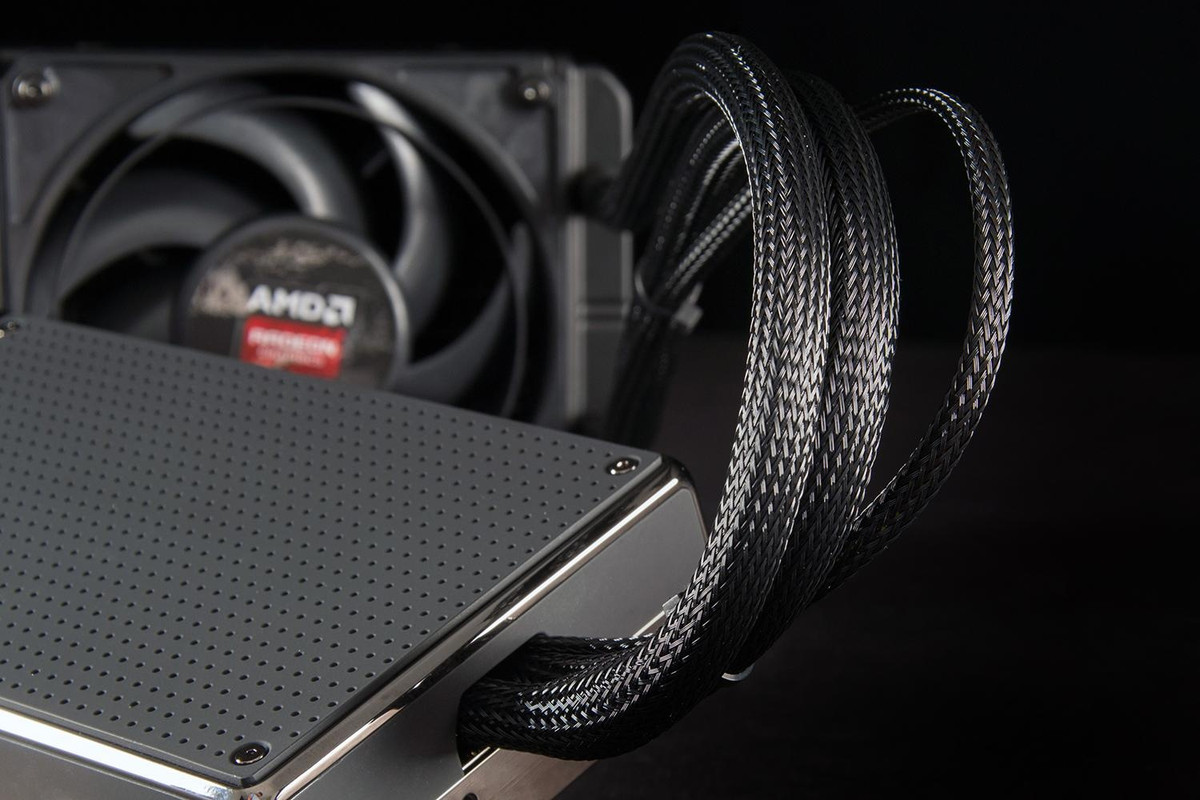

The Fury X matters because this is AMDs latest and greatest modern GPU. The competition is fierce in the GPU market at the moment. AMD had a lot of success with their dual GPU 7990 and 200 series. AMDs GPU Bitcoin performance made it very hard to locate a 7990. AMD did face some criticism from gamers and reviewers. Long story short there were many jokes and memes about volcanos. The heat output and power usage was an issue. Those issues have been eliminated with the Fury X. I absolutely love the fact that AMD has given us a water-cooled solution. HBM allows the card to use less power and it also allows AMD and 3 rdparty companies to manufacture smaller PCBs. Less power consumption usually means less heat and when you add a water cooler it only makes it better. Tessellation performance has also been increased.

The Fury X is priced at MSRP $649.99, but the price varies since the card is hard to find and everyone is trying to make a profit nowadays. Therefore the Fury X is in direct competition with the Nvidia GTX 980 Ti which can be priced from MSRP $649.99 - $1049.99. Nvidia plan was to undercut the Fury X hype [and sales] by releasing the GTX 980 Ti before AMDs E3 presentation & Fury X release date. It’s a hard race and AMD needs to recapture more of the GPU market share. At least that is their goal. I can tell that AMD really tried to impress gamers with this card. Competition is a good thing and it’s looking a bit one sided now.

AMDs Fury X has all of the latest and greatest technology within it. It is 4K ready, supports DirectX 12, Vulcan, Mantle, Open GL 4.5 and of course HBM. GCN has also been updated for a better visual experience and power saving features. The 4096-bit high-bandwidth memory interface should definitely allow for some extreme graphical settings. Fury X supports a dual BIOS and cool LED lights which can be changed to different colors. I have ASUS version of the Fury X. All versions of the Fury X looks and performs the same anyways.

Gaming Rig & Fury X Specifications

What makes my review a bit more unique than other Fury X reviews on the web is that I’m still using the X58. Yes, the 2008 platform that contains Intels 1st generation Nahelem based CPUs and technology. Intel is now moving into their 5 thgeneration. That means I’m sporting PCIe 2.0\2.1, however I am running a highly overclocked X5660. I needed to eliminate as many bottlenecks as I could to squeeze out as much performance as I could. Fury X is manufactured to use PCIe 3.0. PCIe is backwards compatible so no issues here. Fury X is a beast with 4096 stream processors, 8.9 billion transistors and can get up to 8.6 Tflops. Let’s see what my X58 beast & Fury X can accomplish together. To be honest I'm sick and tired of seeing the 1080p benchmarks with high end cards. Seriously this isn't the mid-range GPU area. If you are spending more than $300 for a GPU you must be looking to max out games higher than the 1080p resolution. We are literally getting hundreds of frames @ 1080p at this point. All of my benchmarks will use 1440p & 4K resolutions.

Fury X Specs:

GPU:28nm "Fiji"

GPU Core Clock:1050Mhz

Stream Processing Unit: 4096 -64 Compute Units

Memory:4GBs HBM @ 500Mhz

Memory Bandwidth:512 GB/s

Memory Interface:4096-bit HBM

Form Factor: Full Height & Dual Slot

API:DirectX 12, Mantle, OpenGL 4.58, Vulkan & OpenCL 2.0

PCIe:3.0

Gaming Rig Specs:

Motherboard:ASUS Sabertooth X58

CPU:Xeon X5660 @ 4.8Ghz

CPU Cooler:Antec Kuhler 620 watercooled - Pull

GPU:AMD Radeon R9 Fury X Watercooled - Push

RAM:12GB DDR3-1675Mhz [3x4GB]

SSD:x2 128GB RAID 0

HDD:x4 Seagate Barracuda 7,200rpm High Performance Drives [x2 RAID 0 setup]

PSU:EVGA SuperNOVA G2 1300W 80+ GOLD

Monitor:Dual 24inch 3D Ready – Resolution - 1080p, 1400p, 1600p, 4K [3840x2160] and higher.

OS:Windows 10 Pro 64-bit

GPU Drivers: Catalyst 15.7.1 [7/29/2015]

GPU Speed:AMD R9 Fury X @ Stock Settings

Real Time Benchmarks™

Real Time Benchmarks™ is something I came up with to differentiate standalone benchmarks tools from actual gameplay. Sometimes in-game benchmark tools doesn't provide enough information. I basically play the games for a predetermined time or a specific online map. It all depends on the game mode and the level\\mission at hand. I tend to go for the most demanding levels and maps. I capture all of the frames and I use 4 different methods to ensure the frame rates are correct for comparison. In rare cases I'm forced to use 5 methods to determine the fps. it takes a while, but it is worth it in the end. This is the least I can do for the gaming community and X58 uers who are wondering if our platform can use high end GPUs in 2015.

-FPS Min Caliber?-

You’ll notice that I added something named “FPS Min Caliber”. Basically FPS Min Caliber is something I came up to differentiate between FPS absolute minimum which could simply be a data loading point during gameplay etc. The FPS Min Caliber ™ is basically my way of letting you know lowest FPS average you’ll see during gameplay 1% of the time out of 100%. The minimum fps [FPS min] can be very misleading. FPS min is what you'll encounter only 0.1% of the time out of 100%. Obviously the average FPS and Frame Time is what you'll encounter 99% of your playtime. I think it's a feature you'll learn to love. I plan to continue using this in the future as well.

I feel that no GPU review is complete unless you include the Crysis series. These games can make GPUs burn to the core. Crysis 2 with DX11 and mods requires much more GPU horsepower than you think. Crysis 3 is a gorgeous title as well. No mods are needed in Crysis 3 to stress test any GPU currently in the market. I can also benchmark Crysis 1 if you guys want me to.

Crysis 2 [100% Maxed + MaLDoHD 4.0 Graphic Mod] – 3840×2160 [4K]

Gameplay Duration: 14 minutes 8 seconds

Captured 25,225 frames

FPS Avg: 30fps

FPS Max: 55fps

FPS Min: 20fps

FPS Min Caliber ™: 22fps

Frame time Avg: 33.6ms

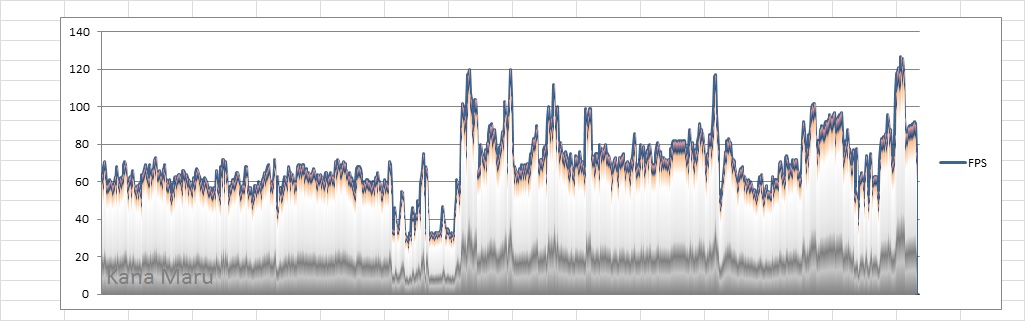

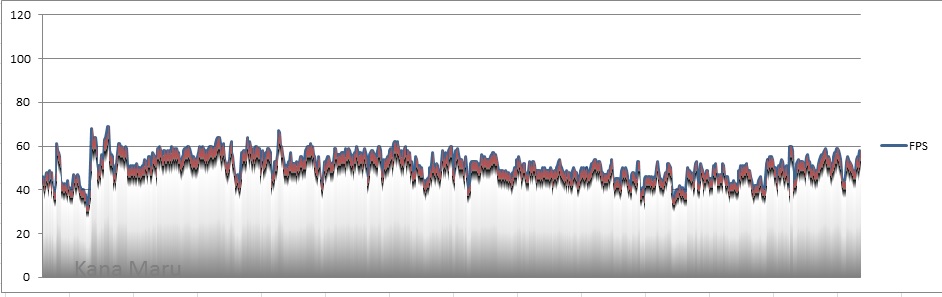

Crysis 2 [100% Maxed + MaLDoHD 4.0 Graphic Mod] – 2560×1440

Gameplay Duration: 8 minutes 11 seconds

Captured 33,198 frames

FPS Avg: 68fps

FPS Max: 101fps

FPS Min: 35fps

FPS Min Caliber ™: 49fps

Frame time Avg: 14.8ms

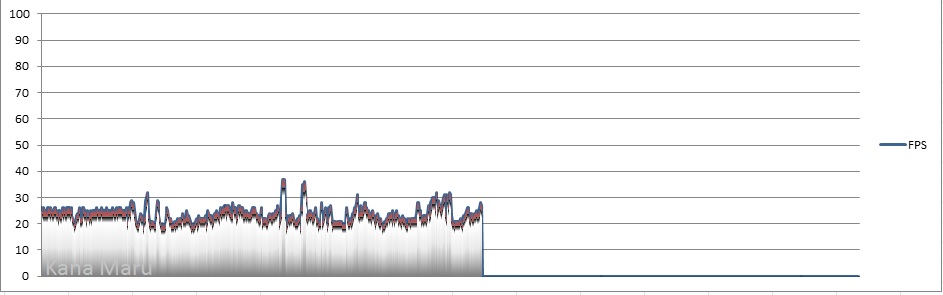

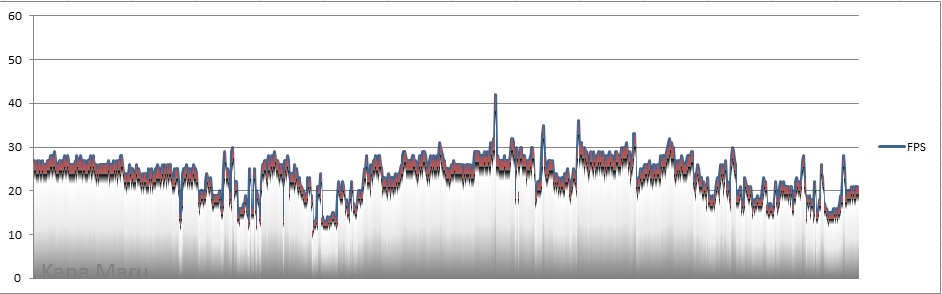

Crysis 3 [Very High Settings + MSAA x4] – 3840×2160 [4K] – Level: “Safeties Off”

Gameplay Duration: 8 minutes 16 secs

Captured 11,860 frames

FPS Avg: 24fps

FPS Max: 38fps

FPS Min: 13fps

FPS Min Caliber ™: 19fps

Frame time Avg: 41.8ms

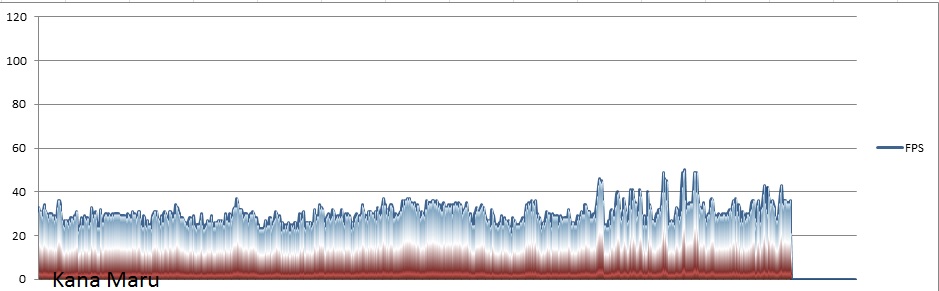

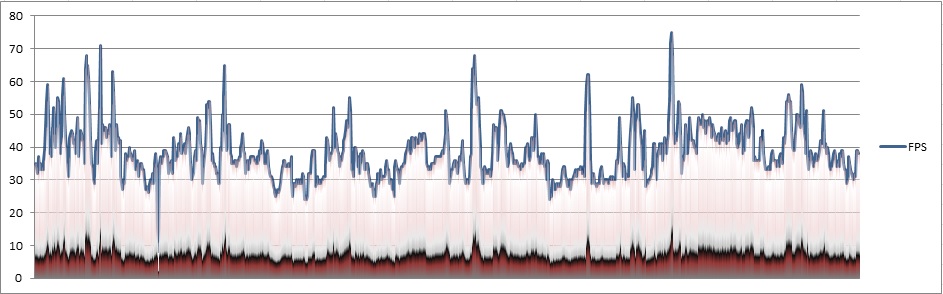

Despite the low frame rate average the Fury X gave a pleasant gameplay experience. MSAA x4 @ 4K does push the 4GB HBM and Fiji to its limits. MSAA x8 is simple unplayable, but you really wouldn’t need to use that much AA at 4K anyway. Yes that last sentence did rhyme pretty well. Being that my 1080p Blu-Ray movies run @ 24fps, playing Crysis 3 @ 3840x2160-24p felt very cinematic. There was no button lag or micro stutter. The frame rate chart tells us a lot. Notice that the frames per second are steady. The FPS only goes up and never really drops below 24fps 99% of the time I played. I literally have 5 different Crysis 3 benchmarks so 8 minutes of “Safeties Off” should give you a good idea what to expect with MSAA x4.

Crysis 3 [Very High Settings + MSAA x2] – 3840×2160 [4K]

Gameplay Duration: 22 minutes 57 secs

Captured 36,992 frames

FPS Avg: 27fps

FPS Max: 43fps

FPS Min: 16fps

FPS Min Caliber ™: 19fps

Frame time Avg: 37.2ms

Crysis 3 [Very High Settings + MSAA Disabled] – 3840×2160 [4K]

Gameplay Duration: 22 minutes 57 secs

Captured 35,242 frames

FPS Avg: 33fps

FPS Max: 44fps

FPS Min: 14fps

FPS Min Caliber ™: 26fps

Frame time Avg: 30.3ms

Crysis 3 [Very High Settings + MSAA x8] – 2560x1440

Gameplay Duration: 21 minutes 11 seconds

Captured 49,878 frames

FPS Avg: 39fps

FPS Max: 77fps

FPS Min: 15fps

FPS Min Caliber ™: 24fps

Frame time Avg: 30.3ms

Crysis 3 [Very High Settings + MSAA Disabled] – 2560x1440

Gameplay Duration: 15 minutes 19 seconds

Captured 62,687 frames

FPS Avg: 68fps

FPS Max: 128fps

FPS Min: 28fps

FPS Min Caliber ™: 32fps

Frame time Avg: 14.7ms

It's obvious that Fury X is 4K ready and was made for 4K gaming. I tried to max out the game when possible. Crysis 3 @ 4K + MSAAx8 was unplayable. This could be because of the HBM1 limitation [4GBs]. Anti-Aliasing really eats up the RAM. Overall I must say that I am impressed with the performance. Gameplay was smooth. I tried to play the most intense levels in both games.

Real Time Benchmarks™

Hitman: Absolution [Ultra Settings + MSAA x2] – 3840×2160 [4K]

Gameplay Duration: 7 minutes 20 seconds

Captured 35,067 frames

FPS Avg: 80fps [79.55]

FPS Max: 111fps

FPS Min: 46fps

FPS Min Caliber ™: 48fps

Frame time Avg: 12.6ms

Hitman: Absolution [Ultra Settings + MSAA x4] – 3840×2160 [4K]

Gameplay Duration: 6 minutes 3 seconds

Captured 16,341 frames

FPS Avg: 45fps

FPS Max: 63fps

FPS Min: 32fps

FPS Min Caliber ™: 34fps

Frame time Avg: 22.2ms

Hitman: Absolution was tough to run when it released a few years back. Even Crossfire X and SLI cards had issues handling this game. IO Interactivealso stated that the engine was for next gen and being held back by consoles. On the PC the game looks great, but it is no match for the Fury X. These benchmarks were done while playing the Chinatown level.

Tomb Raider [Ultimate Setting] – 3840×2160 [4K]

Gameplay Duration: 28 minutes 9 secs

Captured 83,732 frames

FPS Avg: 51fps [50.76]

FPS Max: 69fps

FPS Min: 35fps

FPS Min Caliber ™: 36fps

Frame time Avg: 19.5ms

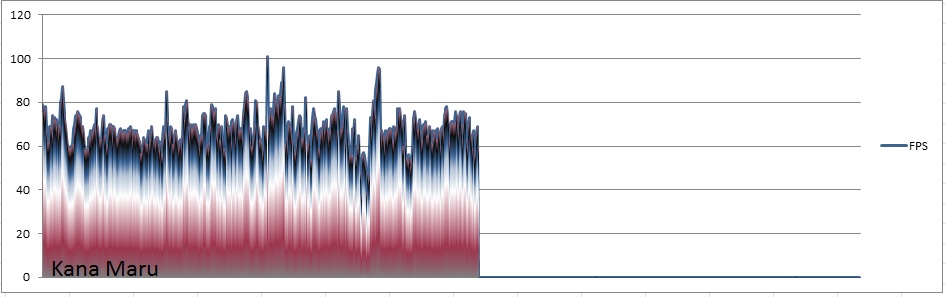

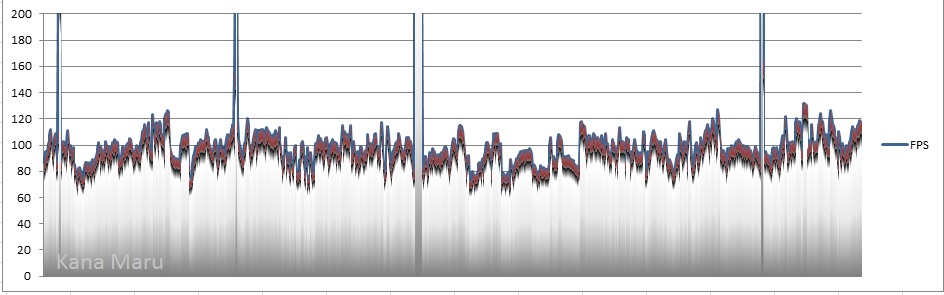

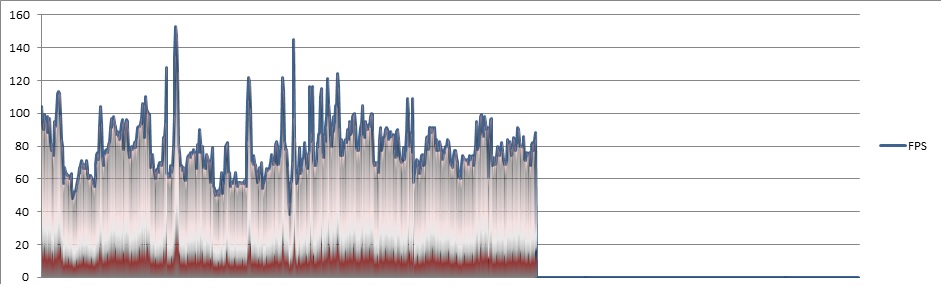

Tomb Raider [Ultimate Setting] – 2560x1440

Gameplay Duration: 27 minutes 41 secs

Captured 165,711 frames

FPS Avg: 100fps [99.75]

FPS Max: 291fps

FPS Min: 47fps

FPS Min Caliber ™: 71fps

Frame time Avg: 10.5ms

Yes your eyes are correct. 4K gave me 51fps. This game is definitely optimized. 1080p must be laughable considering that I benched 100fps @ 1440p. I wish more games were optimized like this title.

Real Time Benchmarks™

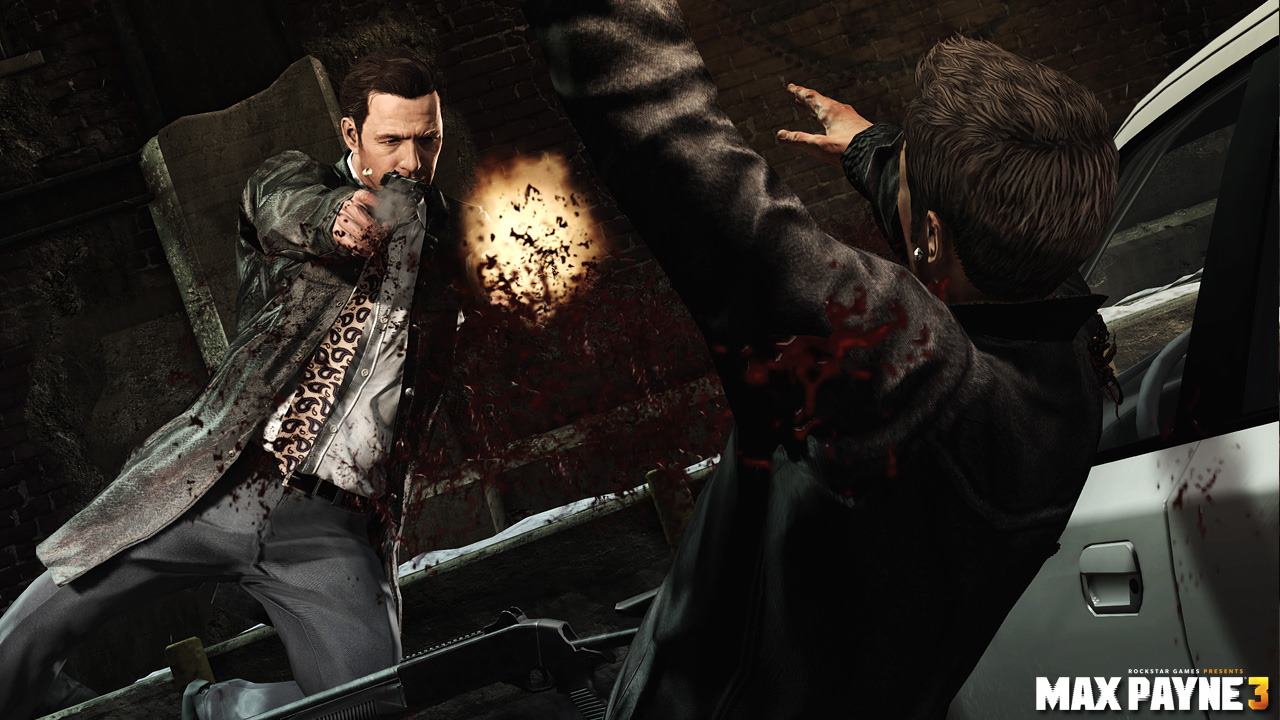

Max Payne 3 [Max Settings + SSAO + MSAA x4] – 3840×2160 [4K]

Gameplay Duration: 13 minutes 58 seconds

Captured 41,360 frames

FPS Avg: 49fps [49.35]

FPS Max: 55fps

FPS Min: 25fps

FPS Min Caliber ™: 32fps

Frame time Avg: 20.3ms

Instead of performing the same old "Bioshock: Infinite" benchmark that we've seen in nearly every review. I'm decided to test out Max Payne 3. I believe that this game should be reviewed with GPUs more often. The game has a a ton of graphical settings. MSAAx8 isn't possible to test since it requires 5777MBs of RAM and the game refuses to let you exceed your GPU vRAM. Therefore I was stuck with max settings and MSAA 4x. Fury X doesn't max the game at 60fps, but it sure runs smoothly. Turn down MSAA or turn it off you are getting well over 60fps.

Metro: Last Light [Max Settings + SSAO x4] – 3840×2160 [4K]

Gameplay Duration: 19 minutes 25 seconds

Captured 26.740 frames

FPS Avg: 23fps

FPS Max: 43fps

FPS Min: 11fps

FPS Min Caliber ™: 13fps

Frame time Avg: 43.6ms

Metro: Last Light [Max Settings + SSAO x2] – 3840×2160 [4K]

Gameplay Duration: 15 minutes 21 seconds

Captured 42,121 frames

FPS Avg: 46fps

FPS Max: 65fps

FPS Min: 19fps

FPS Min Caliber ™: 24fps

Frame time Avg: 21.9ms

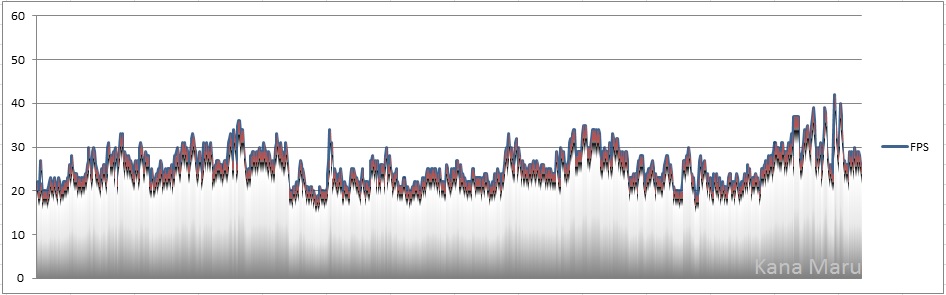

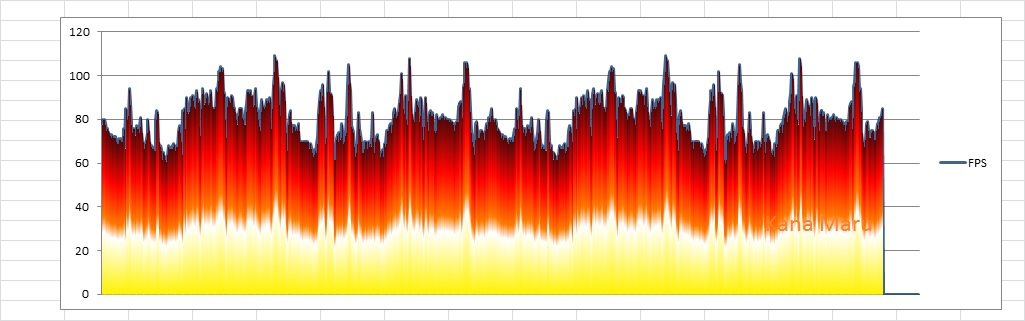

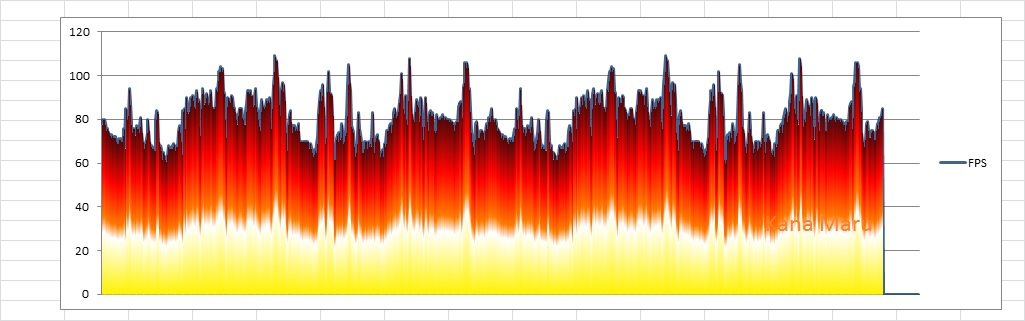

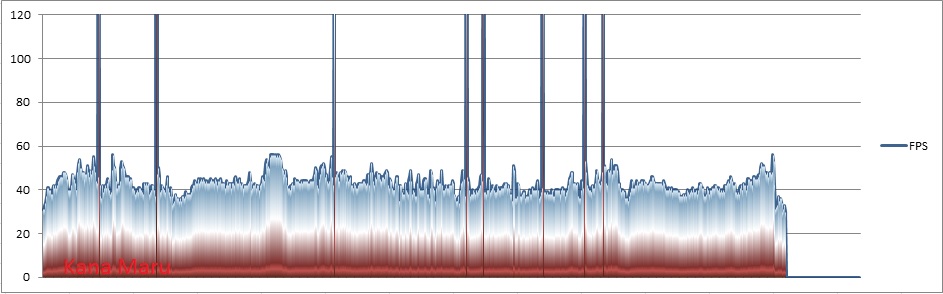

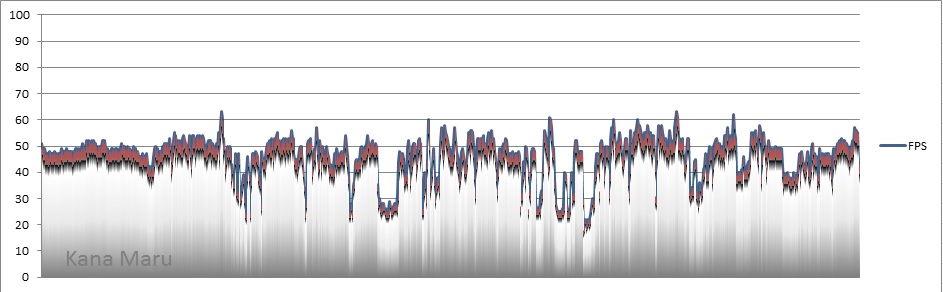

Metro: Last Light [Max Settings + SSAO x2] – 2560x1440

Gameplay Duration: 15 minutes 21 seconds

Captured 64,038 frames

FPS Avg: 47fps

FPS Max: 70fps

FPS Min: 16fps

FPS Min Caliber ™: 16fps

Frame time Avg: 21.2ms

There seems to be some optimization issues. The Fury X can handle the game, but the game randomly dips in frame rate. In one particular room, half of the room runs in the high 50s[fps] @ 4K SSAOx4. However, if I start to turn the fps will dip to 10-15 for no reason at all. This is a Nvidia "The way it's meant to be played" title so maybe that could have something to do with it. It could also be that Fury X needs a optimization patch. Fury X has done wonders with some of the most graphically harsh titles on the market. That isn't the case with Metro: Last Light. There's definitely spiking in the FPS and frame time.

Notice how the 1440p SSAOx2 frame rate matches the 4K SSAOx2 frame rate. The Fury X running @ 1440p also managed to process nearly 22,000 more frames than it did running at 4K. The play time is about the same. Also if check the charts above you'll see the obvious FPS spiking. Definitely some issues that needs to be addressed. The game runs much smoother with SSAO off. You'll get many more stable frames as well.

Real Time Benchmarks™

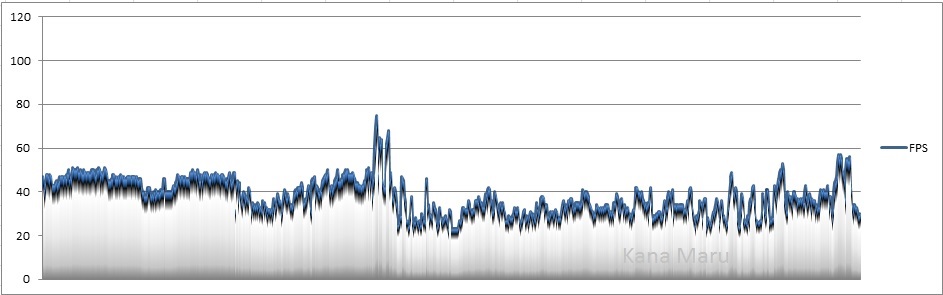

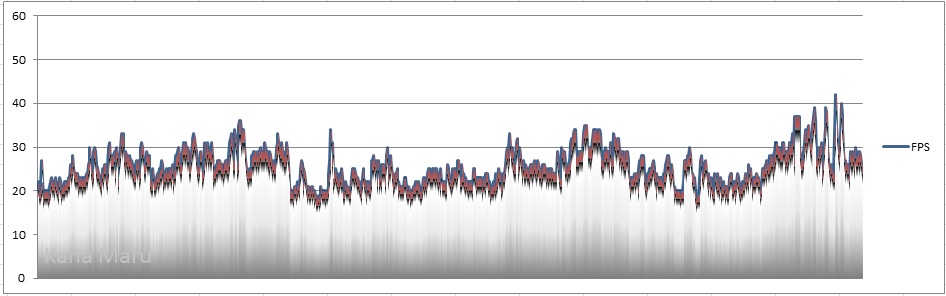

Middle-earth: Shadow of Mordor [Ultra Setting + 6GB Texture Pack] – 3840×2160 [4K]

Gameplay Duration: 25 minutes

Captured 71,944 frames

FPS Avg: 48fps [47.84]

FPS Max: 73fps

FPS Min: 16fps

FPS Min Caliber ™: 23fps

Frame time Avg: 20.9ms

I thought I’ll replay some SoM to see how the Fury X 4GB HBM handles the texture pack that requires 6GBs of GDDR5. At 4K the game is very playable. The experience was smooth and I noticed no input lag or issues while playing. There was no micro stutter. Even though this game requires a lot of horsepower to play @ 1400p & 1600p, the Fury X temperatures were great. Well below 60c throughout my Real Time Benchmark. The built in benchmark was spot on giving me an average of 48.73fps. Compared to my GTX 670s 2GBs the experience was much better since I didn’t have to lower any graphical settings to enjoy this beautiful game. I’m running the Fury with stock settings. I can only imagine how much better it will be once the OC limitations are removed.

Batman: Arkham Knight [100% Maxed + Nvidia GameWorks Enabled] – 3840×2160

Gameplay Duration: 22 minutes 2 secs

Captured 57,723 frames

FPS Avg: 38fps [38.42]

FPS Max: 79fps

FPS Min Caliber ™: 25fps

Frame time Avg: 26.0ms

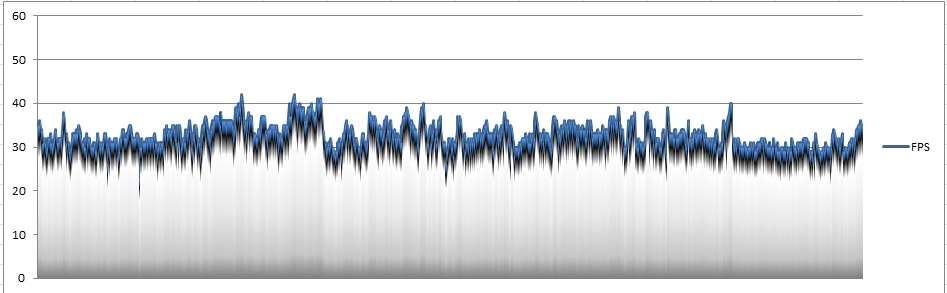

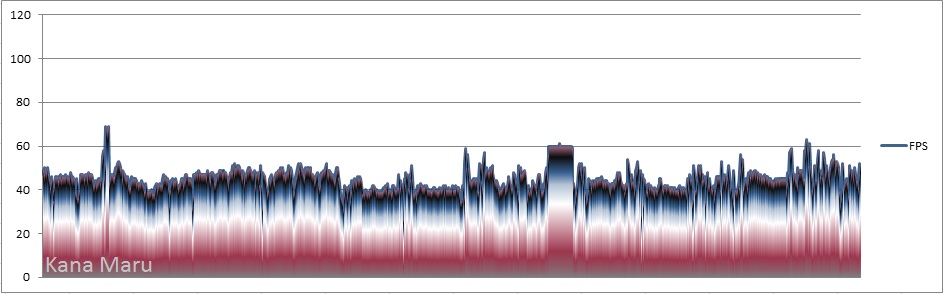

Batman: Arkham Knight [100% Maxed + Nvidia GameWorks Enabled] – 2560x1440

Gameplay Duration: 9 minutes 18 secs

Captured 43,792 frames

FPS Avg: 79fps

FPS Max: 155fps

FPS Min Caliber ™: 49fps

Frame time Avg: 12.7ms

Batman: Arkham Knight was patched on September 3rd, 2015. There are many more options to select and the “Texture Resolution” setting has been unlocked to “High”. Initially you couldn’t max out the settings and was stuck with medium. The only thing I had to change was the Max FPS. 90fps was fine for 4K, but I set it much higher for 1440p. The performance at 4K and 1440p was great. The framerate was steady. I was definitely surprised at the 4K performance. The game is simply gorgeous and plays very well. My GPU temps never went above 45c and stayed around 40c-42c. Although I exceeded the Fury X 4GBs I still had no issues running 4K. The patch has definitely improved the Batman: AK experience. I just have that it took them so long to fix the game. This is just another reason to never pre-order. I actually purchased this game on Day 1.

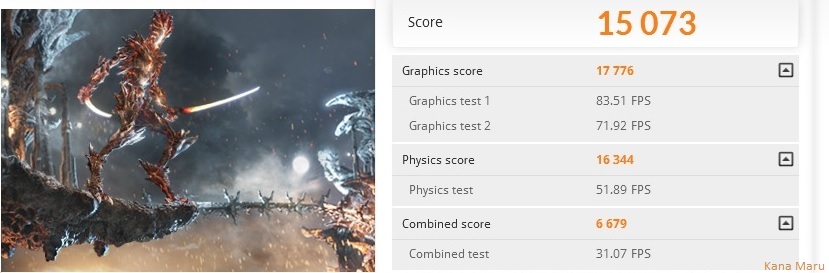

Synthetic Benchmarks

3DMark Fire Strike

Score: 15,073

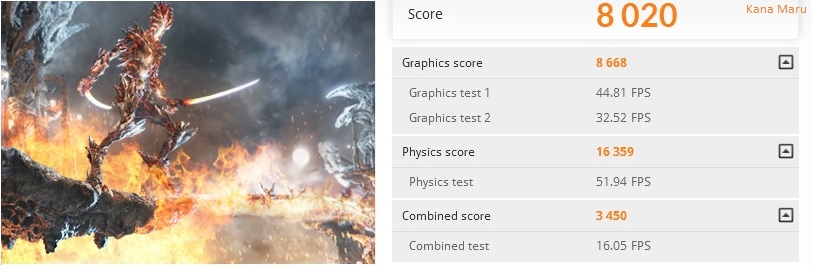

3DMark Fire Strike Extreme

Score: 8,020

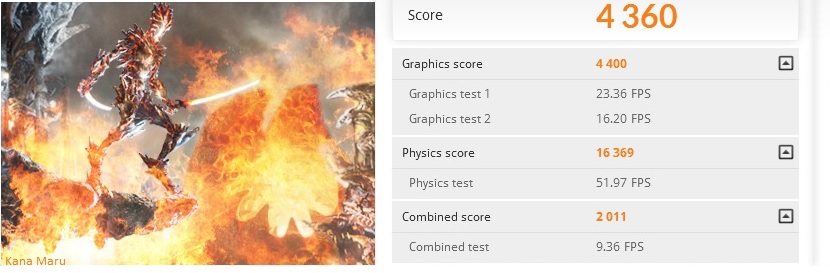

3DMark Fire Strike Ultra

Score: 4,360

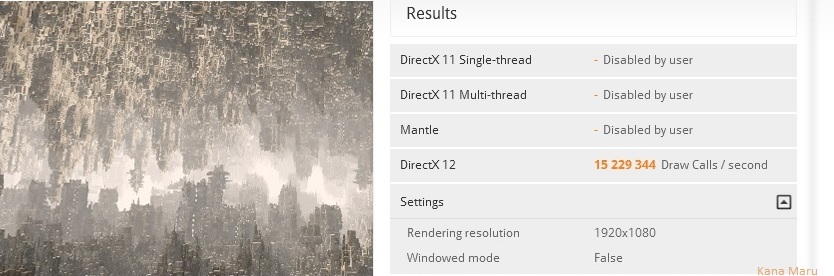

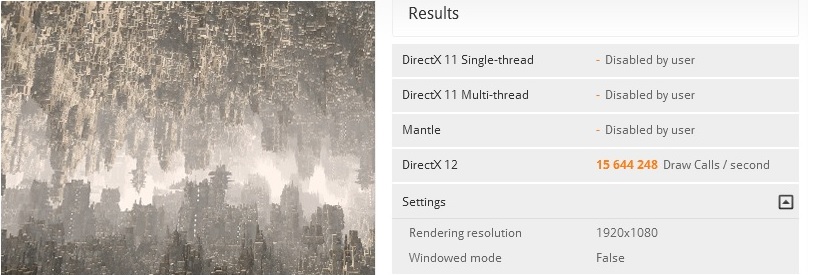

3DMark Future Test - API Overhead

Score: 15,229,344

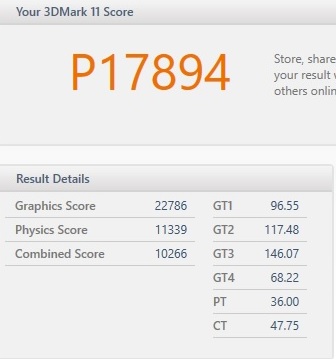

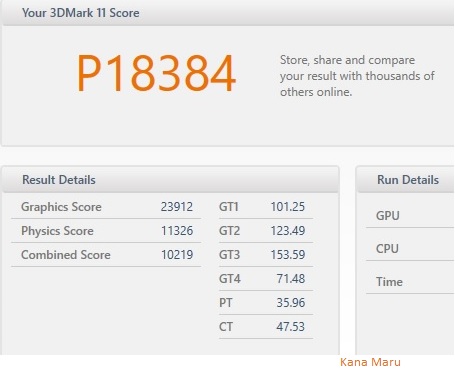

3DMark 11 - Performance

P17894

P17894

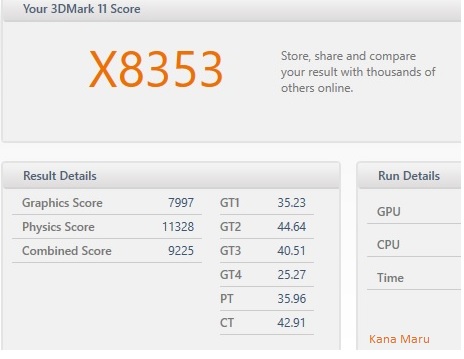

3DMark 11 - Extreme

X7974

X7974

I will be adding more benchmarks soon. I'll also add my in-game benchmarks\\Benchmark Tools here as well.

FURY X OC

Let's see how a limited Fury X core overclock performs

I’ve finally had more time to overclock and run a few synthetic benchmarks. There isn’t much room for overlocking since AMD has these cards locked down pretty tight. HBM is currently locked and can’t be overlocked, but some gamers have found ways to bypass this limitation. The core voltage is also locked and the power target can only be increased up to 50%.

I’m hoping AMD unlocks the Fury X soon and I’m sure they locked it down so people wouldn’t start overclocking the heck out of these cards on day 1. That could lead to a lot of complaints and damage. Overclocking is just one issue that people talk about when comparing the Fury X to the 980 Ti. I will show the Fury X overclock results and then compare the OC Fury X results to the 980 Ti overclock results with another X58 user. I will also focus on the Graphic and Combined score.

So despite the Fury X OC limitations, I was able to overclock to 1125Mhz on the core. That’s a 7.14% increase over the stock settings. I’m not sure how stable the overclock is, but I’m simply running synthetic benchmarks so hopefully everything is stable in the future I'm used to overclocking much higher with previous GPUs. However, The Fury X can maintain it's max core clock easily without performance issues or downclocking due to heat.

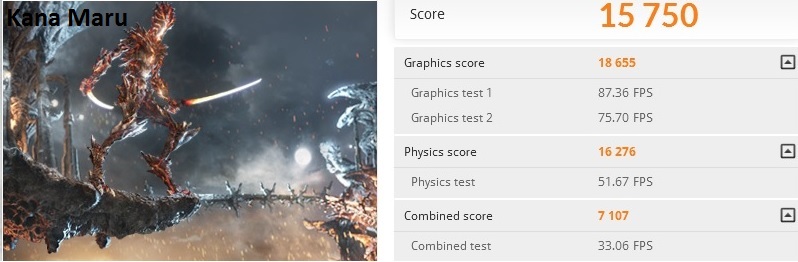

3DMark Fire Strike Performance

Performance [Core 1125Mhz]: 15,750

4.5% increase over the Fury X stock results. 667 point increase for a minor core clock increase.

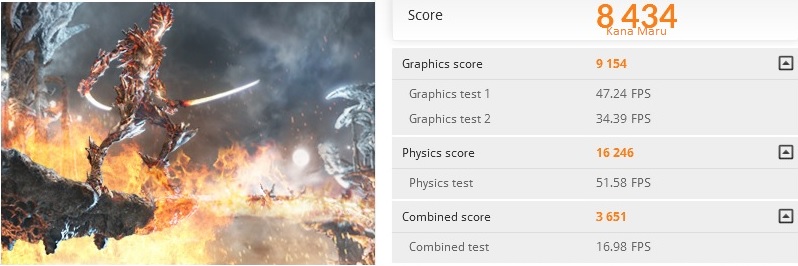

3DMark Fire Strike Extreme

Extreme: 8,434

This is a 5.2% increase over the Fury X stock results.

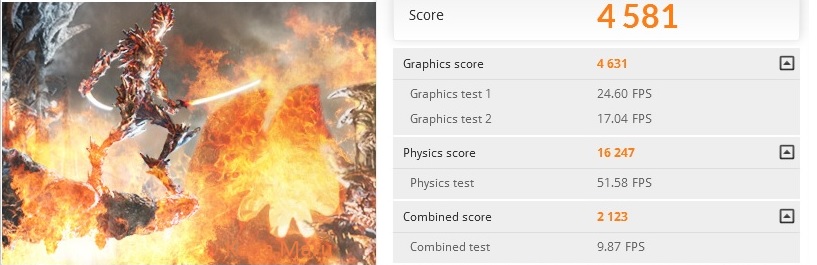

3DMark Fire Strike Ultra

Ultra: 4,581

This is a 5% increase over the Fury X stock results.

3DMark Future Test - API Overhead DX12

DX12 Feature Test: 15,644,248

This is a 3% increase over the Fury X stock results.

3DMark 11- Performance

3DMark 11 Performance: 18,384

This is a 3% increase over the Fury X stock results.

3DMark 11 - Extreme

3DMark 11 Extreme: 8,353

This is a 5% increase over the Fury X stock results.

I only slightly bumped up the core frequency and leaving everything else to default settings. The increase was decent. On the next page I will be comparing stock and overclocked X58-Fury X vs X99-980 Ti.

Fury X vs 980 Ti [Stock Settings]

Nvidia's latest affordable flagship vs AMDs Fiji & HBM combo

Nvidia has had a comfortable lead for a while now. AMD has been on the offensive and is hoping to gain more market share. With the release of the Fury, Fury X, incoming Fury Nano and boosted rebrands, AMD is hoping to spark excitement. Nvidia has had better optimized DX11 drivers for some time now. AMD has stayed consistent over time. Nvidia has much more money so they can hire the best talent and spend more on R&D. Both GPU makers have different architecture standards.

Despite AMD DX11 issues they have always been competitive and keeping Nvidia moving forward while bringing good prices to the market. This definitely benefits the consumer for sure. Nvidia released the 980 Ti to rival the Fury X. Shortly after there were many 3rd party options that sold out quickly. AMD seemed unfazed and continued their marketing and release. It is apparent at this point that AMD is banking hard on HBM and Direct X12 that’s based heavily on Mantle. I will be comparing my Fury X reference to a 980 Ti reference GPU that is stock and overclocked.

My Gaming Rig Specs:

Motherboard:ASUS Sabertooth X58

CPU: Xeon X5660 @ 4.8Ghz

CPU Cooler:Antec Kuhler 620 watercooled - Pull

GPU:AMD Radeon R9 Fury X 4GB HBM Watercooled - Push

RAM:12GB DDR3-1675Mhz [3x4GB]

SSD:x2 128GB RAID 0

HDD:x4 Seagate Barracuda 7,200rpm High Performance Drives [x2 RAID 0 setup]

PSU:EVGA SuperNOVA G2 1300W 80+ GOLD

Monitor:Dual 24inch 3D Readyion - 1080p, 1400p, 1600p, 4K [3840x2160] and higher.

OS:Windows 10 Pro 64-bit

PCIe: 2.0

Comparison GTX 980 Ti Rig:

Motherboard:MSI X99S XPower AC

CPU: Core i7 5960X @ 4.4 GHz 8 Core

GPU:GTX 980 Ti 6 GB GDDR5

RAM:16GB DDR3-2133Mhz [4x4GB]

PSU:1200 Watts Platinum Certified Corsair AX1200i

Monitor:ASUS PQ321 native Ultra HD Monitor at 3840 x 2160

OS:Windows 8.1 64-bit

PCIe: 3.0

Note:The FireStrike Ultra score was used with a i7 5820K CPU + GIGABYTE X99 Gaming G1 + DDR4 2666MHz + Windows 7 Ultimate x64 + PCIe 3.0

FireStrike Total Score [Performance]

GTX 980 Ti: 15,656

Fury X: 15,073

-3.9% difference.

Graphics Score [Performance]:

Fury X: 17,776

GTX 980 Ti: 17,042

+4.3% difference.

Combined Score [Performance]:

GTX 980 Ti: 7,809

Fury X: 6,679

-16.9% difference.

FireStrike Total Score [Extreme]

Fury X: 8,020

GTX 980 Ti: 7,841

+2.28% difference.

Graphics Score [Extreme]

Fury X: 8,668

GTX 980 Ti: 8,541

+4.3% difference.

Combined Score [Extreme]:

GTX 980 Ti: 3,701

Fury X: 3,450

-7.27% difference.

FireStrike Total Score [Ultra]

Fury X: 4,360

GTX 980 Ti: 3,966

+2.28% difference.

Graphics Score [Ultra]:

Fury X: 4,400

GTX 980 Ti: 3,861

+14% difference.

There you have it. AMD wins 3 out of the 5 benchmarks. They are nearly tied and show their strength. However, these are only reference stock clocks and the 980 Ti is an overclocking beast. Synthetic benchmarks can only tell you so much about the performance, but this will do for comparisons since I don’t have a GTX 980 TI to test personally. As you can see above, the Fury X is better in the graphics score department. The 980 Ti still have the better DX11 drivers on its side. 1440p and 4K seems to benefit the Fury X. The card was obviously made for 4K. Both cards sports the 4K logos.

Benching against a X99 Haswell-E 8 core [5960X] and 6-core [5820K] and definitely shows in the Combined scores. The newer architectures, CPUs and PCIe 3.0 definitely show along with Nvidia DX11 superior drivers. On the next page I will be comparing the Overclocked R9 Fury X vs the Overclocked GTX 980 Ti.

Fury X vs 980 Ti [Overclocked]

The 980 TI was able to overclock up to 1477Mhz. I was only able to push my Fury X to 1125Mhz. The 980 Ti core is clocked 28.43% higher than my Fury X. The 980 TI memory also increased from stock 7012 MHz to 7818Mhz. The fury is still sitting at 500Mhz, but it can still move quicker than the 980 Ti GDDR5. Yes up to 9x quicker than GDDR5. Leaving the Fury X at 500Mhz should be fine. Let’s take a look at Fire Strike Performance benchmark

FireStrike Total Score [Performance]

GTX 980 Ti: 17,420

Fury X: 15,750

-10.6% difference.

Graphics Score [Performance]:

GTX 980 Ti: 20225

Fury X: 18,655

-8.4% difference.

Combined Score [Performance]:

GTX 980 Ti: 7,572

Fury X: 7,107

-6.54% difference.

The 980 Ti really picks up lost ground with the 37.72% overclock increase over the 980 Ti stock frequency . I thought the increase would be much higher. The overclock did eliminate any lead that AMD had. Still the increase isn’t “that” dramatic. We aren’t seeing any major spreads here. The 980 Ti with a high overclock nets 10.6%-Total Score, 8.4% - Graphics Score and 6.54% - Combined. There’s another issue that must be addressed.

That issue is heat. According to the reviewer, the 980 Ti overclocked went well into 80c and well in the 90s. The VRMs were scorching hot. Obviously this isn’t a problem for the watercooled Fury X. There are also 3rd party alternatives out there as well to alleviate the heat issues. There were no trading blows after overclocking the 980 Ti. Obviously everything went in Nvidias favor due to the massive core frequencies.

At the end of the day I’m still satisfied with AMDs Radeon R9 Fury X. It doesn’t require any overclock or a huge overclock to get great 1440p – 1600p & 4K gameplay. There’s no reason to put a ton of stress on the core, the VRMs and other components. It also runs silently. Nvidia released the 980 Ti with a lot of headroom to entice purchasers ahead of Fury X release. This is the first time I’ve never focused solely on overclocking since the results were great out of the box. Judging by the results it appears that I have made a fine move. Now Fury X users need to wait for mature DX11, DX12 & Vulkan drivers games for our GPUs.

I will update more comparisons soon.

We have finally reached the end of my review. The Fury X has definitely impressed me. Nearly everything about this card is great. All of the games I played at 4K are definitely playable and smooth. I'm sure if you Crossfire X the Fury X you'll get well over 60fps at 4K and possibly higher resolutions. If you are in the market looking for a 1080p, this isn't the card for you. This card is overkill for 1080p. AMD is aiming and has actually proved that they are 4K ready. I know that pump\\coil whine was a issue during the Fury X initial release. I guess a lot of gamers need pure quietness when running their PC. Well that issues was fixed ASAP and I have no problems with coil whine.

Another major improvement is the GPU temperature. I have my Fury X pulling warm air from inside the case. The temps are still amazing. While benchmarking in 3DMark Fire Strike Extreme the temps averaged 53c. While I was playing Middle-earth Shadows of Mordor @ 1440p Ultra+6GB Texture Pack, the temps stayed in the high 30s and low 40s. The temps never went about 46C! That's simply amazing. I ran all of my test with stock settings. I feel that I made a great decision with my Fury X purchase. This card should last a few years. As long as AMD continues to optimize their games and keep HBM1 optimized we shouldn't have many problems with future titles.

There are a few cons I must get to. The first issue I had with the card was the radiator. I love the fact that we have a water cooled solution, but the radiator has a reservoir attached to it. I really wanted to place the radiator at the top of my case [NZXT Phantom 410] next to my CPU radiator. Well unfortunately the reservoir prevents me from doing so. I wish they would have went with a traditional radiator without the reservoir or at least made that an option. It's a minor complaint, but still was a issue for me. I wanted to bring in cooler air from the outside of the case. Who knows how low my temps could be if that was the case. I'm sure they had no other place to install the reservoir except on the radiator. Most modern cases shouldn't have many problems getting it installed.

Another issue I'm having is the fact that overclocking extremely limited. AMD really has this card locked down. Recently someone hacked some software and overclocked the crap out of the Fury [modded to Fury X] with LN2. I know that these cards have more power. AMD probably has the cards locked down so people won't go crazy with overclocking on Day 1. Hopefully they loosen up on some of the restrictions sooner than later. You can overclock the Fury X, but don't expect much. The cards are beast with stock settings so many people probably won't "need" to overclock anyways.

Other than those few negatives everything is pretty much positive. AMD has done a fantastic job and improved on their previous flaws. I'm not fanboy either. I recently retired my dual Nivida GTX 670s for various reasons, but the biggest reason was the fact that I needed more horsepower and more than 2GBs vRAM. I had a choice to go GTX 980 Ti or Fury X. Well I wanted the EVGA Hybrid 980 Ti, but it was constantly sold out and the price is $749.99. The EVGA cooler cost $99.99. This made the Fury X $649.99 look even better. Although the 980 TI's overclock better than the Fury X, you are pushing the card way to hard. Power consumption goes up as well as the heat. You'll end up downcloking due to heat at some point anyways or degrading the card. In the end this was everything many complained about when it was AMD [power consumption and heat]. The Fury X does everything I want to do. X58 users who want a Fury X shouldn't fear. Our platform is still great and still viable. Thank you for reading my review.

Feel free to leave a comment or start a discussion below.