Hitman DirectX 12 Fury X Benchmarks

I've finally been able to get my hands on a DirectX 12 title. Ashes of Singularity was the first DX12 title to feature benchmarks, but it’s still in Early Access at the moment. Ashes of Singularity actually releases on March 31 st. Hitman has released with DirectX11 and DX12 support on Day 1. DX11 was available during the Hitman Beta. AMD really showed their strengths in DX11 with this title and now everyone wants to see DX12 results. I’ve been playing the Hitman series for many years and I love the franchise.

The latest release has been met with criticism across the board. From the “intro price” [14.99], to the episodic releases, to the game not releasing at midnight on March 11 thon Steam, Hitman has had plenty of people up in arms. This isn’t the first episodic title I’ve come across so it doesn't bother me as much. There was a game on the PS3 named Siren that originally released in an episodic fashion. With all of that being said Hitman fans can finally rejoice. DirectX 12 has met some criticism, but overall it benefits the future of PC gaming. I’m making this intro longer than it really should be so now I’ll give you what you came for; PC specs and benchmarks.

Gaming Rig Specs:

Motherboard:ASUS Sabertooth X58

CPU:Xeon X5660 @ 4.6Ghz

CPU Cooler:Antec Kuhler 620 Watercooled - Pull

GPU:AMD Radeon R9 Fury X Watercooled - Push

RAM:24GB DDR3-1600Mhz [6x4GB]

SSD:Kingston Predator 600MB/s Write – 1400MB/s Read

PSU:EVGA SuperNOVA G2 1300W 80+ GOLD

Monitor:Dual 24inch 3D Ready – Resolution - 1080p, 1400p, 1600p, 4K [3840x2160] and higher.

OS:Windows 10 Pro 64-bit

GPU Drivers: Crimson 16.3 [3/9/2016]

GPU Speed: AMD R9 Fury X @ Stock Settings – Core 1050Mhz

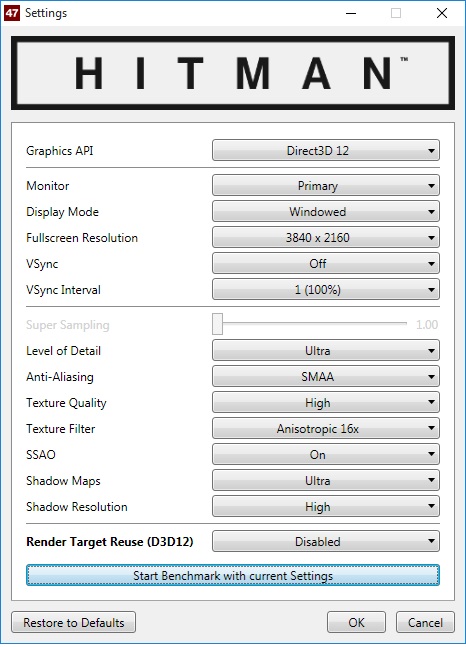

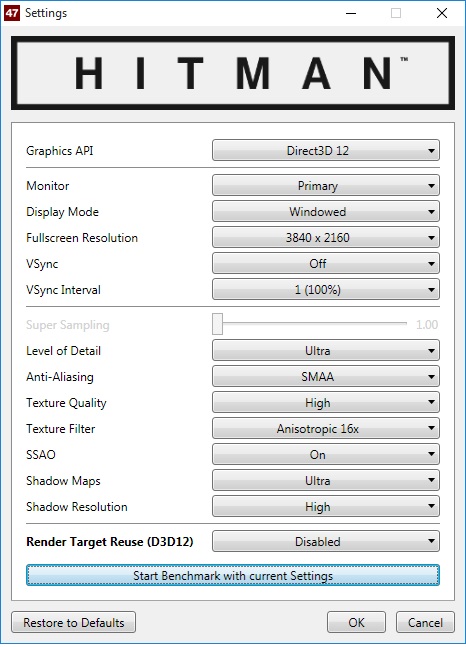

Hitman DirectX 12 Internal Benchmark Tool

First I'll start with the built in benchmark tool that is provided. Usually benchmark tools aren't accurate and doesn't give a good representation of actual gameplay. We will see how accurate the benchmark tool is when compared to my Real Time Benchmarks™. Let's take a look at the settings and the benchmark:

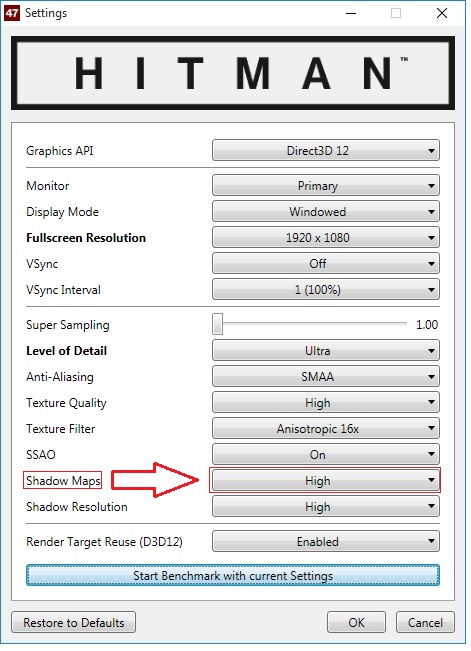

"Render Target Reuse" = Disabled

The first issue I noticed was that there is a setting named "Render Target Reuse"which is a feature only accessible from the DirectX 12 API. This settings had massive issues with the gameplay and caused tons of micro-stutter. High frame times also occured when this setting is enabled. These issues definietly hurts the benchmark results. The graphical settings are at the highest gamers can push them and the "Render Target Reuse" is set to Disable as you can see.

Check out the benchmark below:

Fury X - Hitman Internal Benchmark Tool

3285 frames

28.97fps Average

4.83fps Min

65.35fps Max

34.51ms Average

15.30ms Min

206.97ms Max

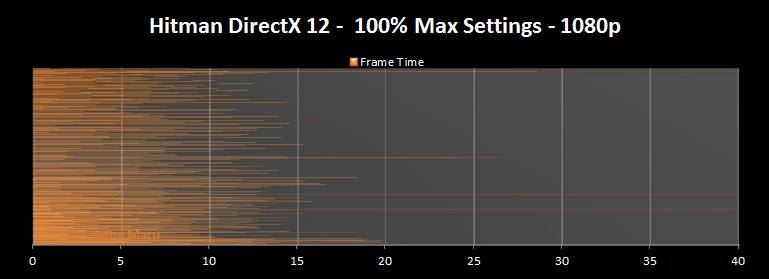

Those aren't the worse stats. but there's more that isn't seen. As I mentioned above there was tons of micro-stutter. The Frame Time was all over the place. There was only 3285 frames rendered as well. This is definietly not a good experience and I urge all Fury X users to "Enable" this feature or set it to "AUTO". This setting definitely shouldn't be used and I've decided to leave it set as Enabled isntead of AUTO just in case. Let's see what happens when I enabled the feature.

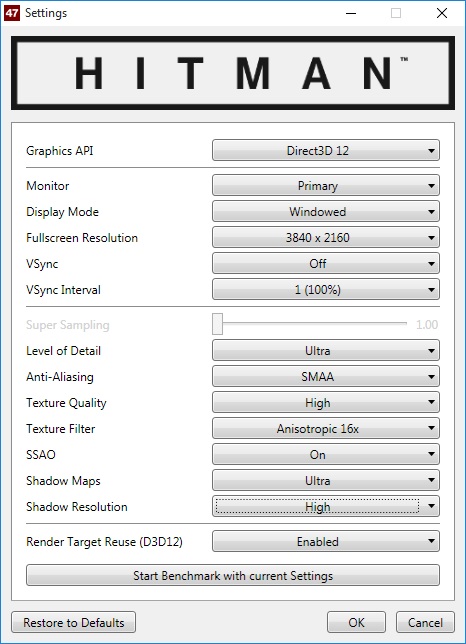

"Render Target Reuse" = Enabled

Fury X - Hitman Internal Benchmark Tool

4081 frames

36.02fps Average

5.24fps Min

65.61fps Max

27.76ms Average

15.24ms Min

190.95ms Max

As you can see there are huge gains across the board. There were more frames rendered and the frame rate & rendered frames increased by 24%. The Frame Time is much better. The benchmarks was much more pleasant with much less micro-stutter and issues found with the the Render Target Reuse Disabled. So once again I highly suggest that AMD Fury X users keep the Render Target Reuse Enabled.

With that out of the way lets looks at how the internal benchmark performance with different settings and resolutions.

Hitman Internal Benchmark Tool GPU Results - DirectX 12

| 1920x1080 | 2560X1440 | 3200x1800 | 3840x2160 |

| 10112 frames | 8164 frames | 4976 frames | 4081 frames |

| 89.43fps Average | 72.30fps Average | 43.96fps Average | 36.02fps Average |

| 8.79fps Min | 10.14fps Min | 8.30fps Min | 5.24fps Min |

| 151.52fps Max | 115.43fps Max | 83.03fps Max | 65.61fps Max |

| 11.18ms Average | 13.83ms Average | 22.75ms Average | 27.76ms Average |

| 6.60ms Min | 8.66ms Min | 12.04ms Min | 15.24ms Min |

| 113.83ms Max | 98.66ms Max | 120.48ms Max | 190.95ms Max |

As you can see the stock Fury Xperforms very well in DirectX 12. The minumum FPS are misleading. THe internal benchmark tool has some issues that it needs to work out. When I benchmarked actual gameplay I never got FPS mins shown above. Next I'll modify a few settings to see how much performance I can squeeze out at 4K when lowering some settings. I came up with this:

| 3840x2160 |

| 4997 frames |

| 44.27fps Average |

| 8.80fps Min |

| 71.11fps Max |

| 22.59ms Average |

| 14.06ms Min |

| 113.67ms Max |

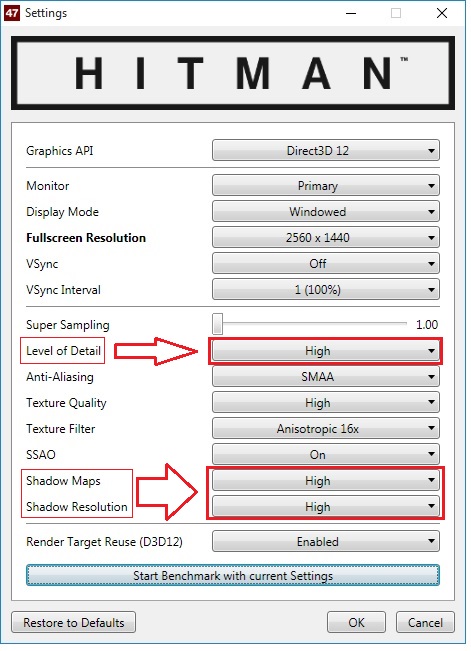

I lowered the LOD to High, completely disabled AA, and dropped Shadow Maps to High. The game still looks fantastic and I increased my FPS by nearly 23%. The rendered frams increased by 22.44%.

I'll be sure to update this post with the DX11 results in the coming days. I'll also be sure to add overclock settings soon as well. Now lets see how well the Fury X performs in DirectX 12 when using my own benchmark program. I had a few issues with DX12 and benchmarking the GPU output, but those issues have been resolved. I spent most of the day programming and ensuring that everything was accurate and working properly. Everything is looking good. Just as a reference I include something that I like to call "FPS Min Caliber".Check the next page for a short explination and my Real Time Benchmarks ™

-What is FPS Min Caliber?-

You’ll notice that I added something named “FPS Min Caliber”. Basically FPS Min Caliber is something I came up to differentiate between FPS absolute minimum which could simply be a data loading point during gameplay etc. The FPS Min Caliber ™ is basically my way of letting you know lowest FPS average you’ll see during gameplay 1% of the time out of 100%. The minimum fps [FPS min] can be very misleading. FPS min is what you'll encounter only 0.1% of the time out of 100%. This results vary greatly, even on the same PC. This normally occurs during loading, saving etc. I think it will be better to focus on what most gamers will experience. Obviously the average FPS and Frame Time is what you'll encounter 99% of your playtime. I think it's a feature you'll learn to love. I plan to continue using this in the future as well.

Real Time Benchmarks ™

Real Time Benchmarks™is something I came up with to differentiate standalone benchmarks tools from actual gameplay. Sometimes in-game benchmark tools doesn't provide enough information. The benchmarks are always in-game instead of using benchmark tools provided by the developers. Usually the in-game results are different than the built-in benchmark tool.

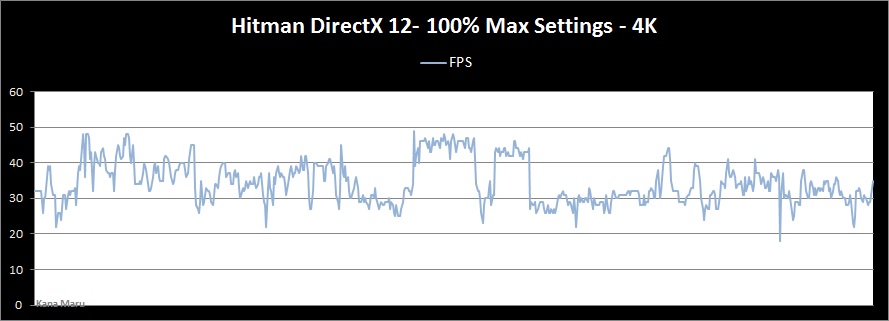

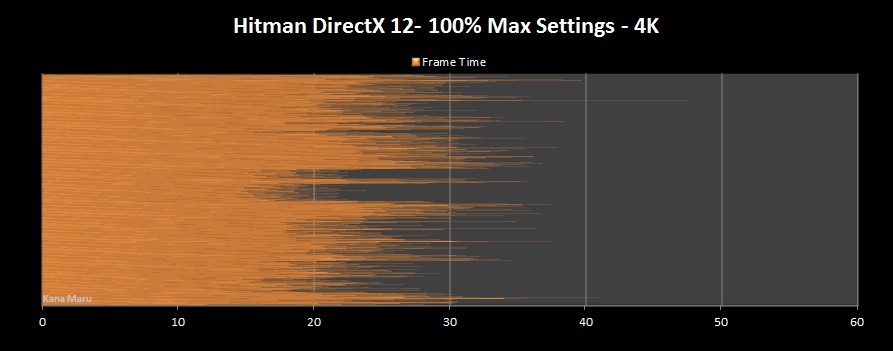

Hitman [100% Maxed Settings + DirectX 12] – 3840x2160 [ 4K]

Training Level with Yacht

AMD R9 Fury X @ StockSettings [Crimson 16.3 Beta Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-1600Mhz

FPS Avg: 36fps

FPS Max: 49fps

FPS Min: 18fps

FPS Min Caliber ™: 26fps

Frame time Avg: 28.9ms

Fury X Info:

GPU Temp Avg: 34c

GPU Temp Max: 37c

GPU Temp Min: 27c

CPU info:

CPU Temp Avg: 41.1c

CPU Temp Max: 50c

CPU Temp Min: 36c

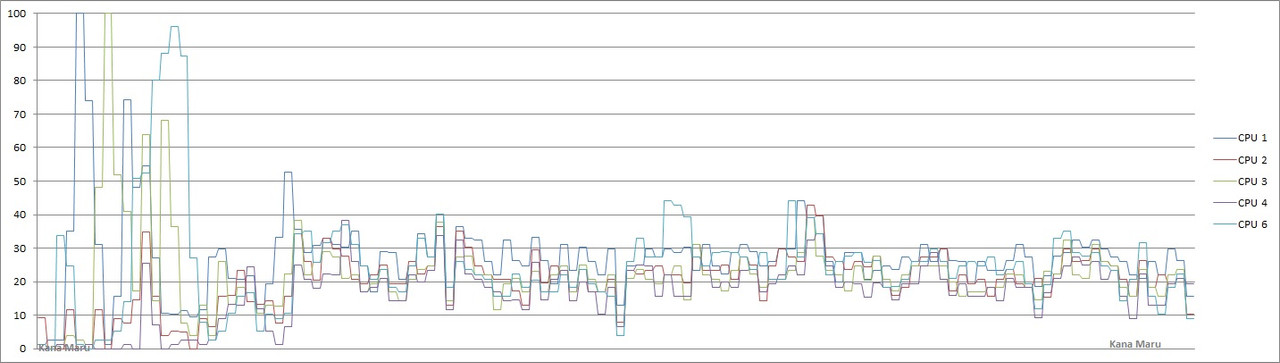

CPU Usage Avg: 23.92%

CPU Usage Max: 50.8%

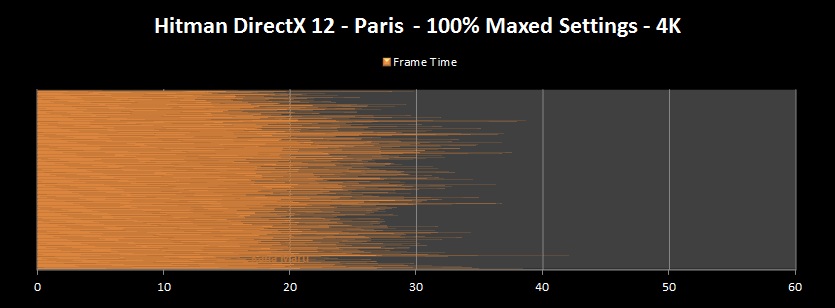

Let me the first to say that I've never seen a chart so clean at 4K or any high resolution for that matter. Usually there's a lot going in the frame time chart. Normally the data is rotten data that can't be taken seriously, but now in DX12 it appears the CPU & GPU working is definitely working in unison. The parallel funtions in DX12 is definitely helping at higher resolutions. The Fury X was already a beast at high resoltuions, but this simply great. During my gameplay there was no screen tearing and no micro-stutter. The game was very smooth and there was no input issues either. The last game where my Real Time Benchmarks™ and the built-in benchmark tool had the same results was when I benchmarked Shadows of Mordor in my Fury X review. You can check out my Fury X review from last year here:

Let's take a look at the CPU Usage:

As you can see all cores are working in unison.

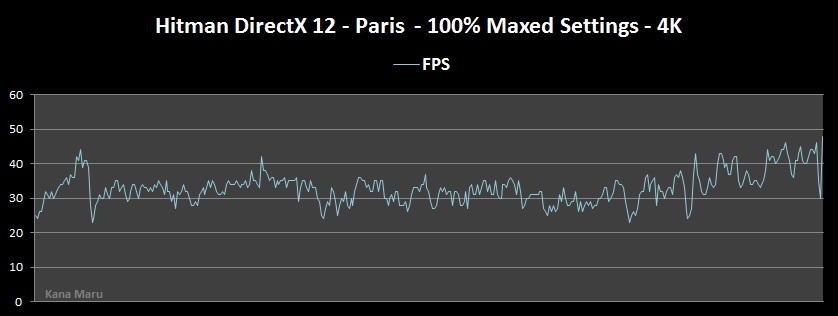

Hitman [100% Maxed Settings + DirectX 12] - 3840x2160 – 4K

Paris

AMD R9 Fury X @ Stock Settings [Crimson 16.3 Beta Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-1600Mhz

FPS Avg: 33fps

FPS Max: 48fps

FPS Min: 23fps

FPS Min Caliber ™: 25.12fps

Frame time Avg: 30. 3ms

Fury X Info:

GPU Temp Avg: 40c

GPU Temp Max: 42c

GPU Temp Min: 36c

CPU info:

CPU Temp Avg: 47.5c

CPU Temp Max: 57c

CPU Temp Min: 41c

CPU Usage Avg: 20%

CPU Usage Max: 40%

The Paris level is huge and there's tons of things going on. There are plenty of NPCs scattered across the level and definitely gives any card a run at 4K. In this case the Fury X performs well, but there was more noticeable micro stuttering. This could be due to the engine loading different areas as you progress forward. There was no input lag and the game was very playable aside from a few hiccups. I will be digging deeper into this when I get all of the data available. Unlike the previous level above, Paris really shows what this title can throw at your system. I'm sure when patches are released the minor micro-stuttering will be addressed. I was able to get more performance out of my Fury X by simply lowering one setting. I lowered the " Shadow Resolution" settings from High to Medium while leaving all other settings maxed out.

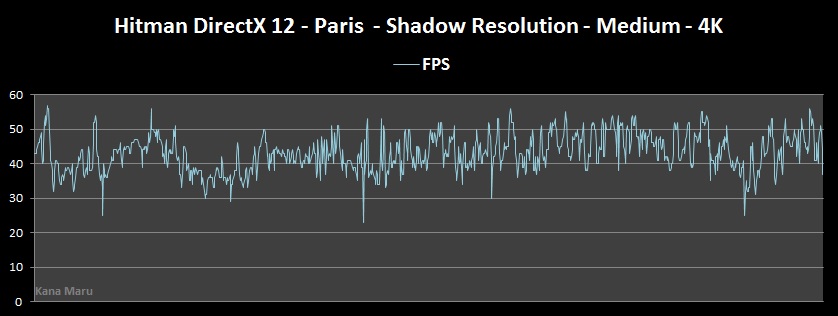

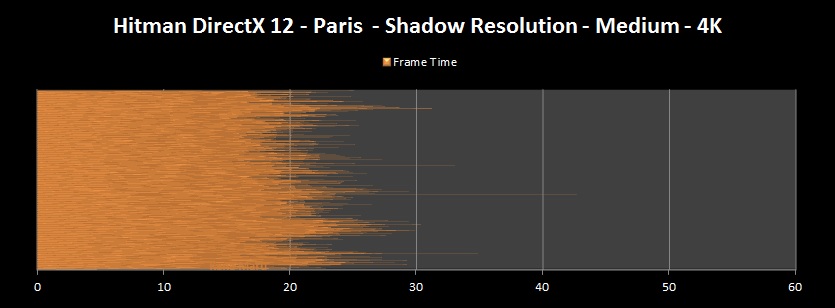

Hitman [Shadow Resolution Medium + DirectX 12] - 3840x2160 – 4K

Paris

AMD R9 Fury X @ Stock Settings [Crimson 16.3 Beta Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-1600Mhz

FPS Avg: 43fps

FPS Max: 57fps

FPS Min: 23fps

FPS Min Caliber™: 34fps

Frame time Avg: 23. 2ms

Fury X Info:

GPU Temp Avg: 39c

GPU Temp Max: 41c

GPU Temp Min: 34c

CPU info:

CPU Temp Avg: 46c

CPU Temp Max: 53c

CPU Temp Min: 40c

CPU Usage Avg: 19.9%

CPU Usage Max: 35.5%

After lowering one setting [" Shadow Resolution" settings from High to Medium] I noticed that the performance greatly increased. The minor, but random micro-stutter had basically disappeared. The game ran very smoothly. The image quality was still gorgeous. I will be taking a look at the vRAM and posting any differences I can find with charts.

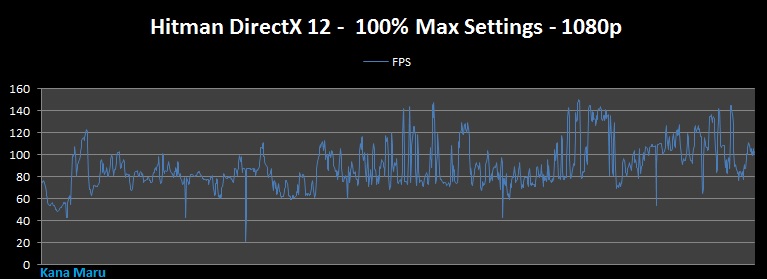

Hitman [100% Maxed Settings + DirectX 12] – 1920x1080

AMD R9 Fury X @ Stock Settings [Crimson 16.3 Beta Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-1600Mhz

FPS Avg: 89fps

FPS Max: 150fps

FPS Min: 21fps

FPS Min Caliber™: 56.27fps

Frame time Avg: 11.23ms

Fury X Info:

GPU Temp Avg: 44c

GPU Temp Max: 45c

GPU Temp Min: 42c

CPU info:

CPU Temp Avg: 46c

CPU Temp Max: 62c

CPU Temp Min: 57c

CPU Usage Avg: 45%

CPU Usage Max: 66%

I had to download a small update patch for Hitman. I have no idea what the 230MB patch addressed. The developers haven't posted any information. As you can see above the Fury X has no problems running 1080p. The results are better than I thought they would be. I actually got 3 more frames per second than the internal benchmark. That's slightly outside of my 3% margin of error. I ran around the courtyard and I made sure to visit all the rooms full of NPC. The game only dips downward during certain areas when a event is about to happen or when the game decides to save. Other than that you'll have no dips below 53-54fps. There's more benchmarks to come. Check back regularly.

Hitman Fury X - AMD 4GB GPU - Optimization

After noticing my increase in performance by lowering one settings. I started to pay more attention to the Fury X 4GBs vRAM. This isn't really a limitation per say, but it can be at high resolutions. So I have taken the time out of my day to optimize the game for you guys. Obviously you are free to do whatever you want, but my settings will ensure that your Fury X doesn't throttle. So what I have done is pushed the settings to the safest max. You want to always leave some headroom because Hitman levels are huge and there can be a ton of NPCs on screen at any given time. The NPCs move around the level to the vRAM varies. Using my settings below when give you the BEST experience with your AMD 4GB GPUs. I've described what settings you should drop from the 100% maxed out settings.

1920x1080p

| 10369 frames |

| 91.54fps Average |

| 10.56fps Min |

| 149.55fps Max |

| 10.92ms Average |

| 6.69ms Min |

| 94.68ms Max |

Gaming at 1080p doesn't really require much. You won't be throttling that much if at all. You'll be better off maxing out the settings and enjoying the pretty graphics. There's a difference of 4fps between the optimized vRAM settings and the 100% maxed settings.

With the game running 100% maxed you'll be better off sacrificing those 4fps and running 86.20fps.That was my average so in this case I'd just run the game 100% maxed if I were you.

2560x1400p

| 8596 frames |

| 76.15fps Average |

| 10.24fps Min |

| 117.39fps Max |

| 13.13ms Average |

| 8.52ms Min |

| 97.62ms Max |

If you have all of the settings maxed, I've highlighted the settings that need to be dropped for good frame rate and frame times with little to no micro-stutter. No worries. The game still looks awesome. You have AA enabled and SSAO will ensure the environment continues to look great. This will put you right below my vRAM safety net just in case there's a ton of enemies on screen at once. With a Fury X you'll be looking at around 80fps as I've shown above.

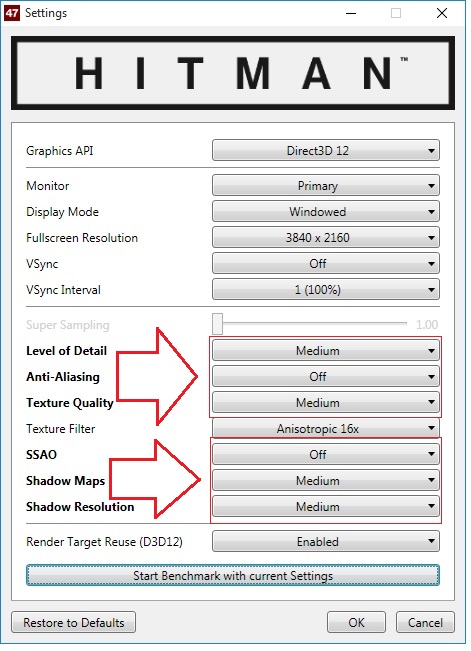

3840x2160 - 4K

| 7460 frames |

| 66.87fps Average |

| 10.21fps Min |

| 95.80fps Max |

| 14.95ms Average |

| 10.44ms Min |

| 97.96ms Max |

To get keep the vRAM below the safty net you'll get a extra bonus which is more than 60fps! You'll have to lower several settings to achieve that as you can see above. Trust me, it's worth it. If you want to max everything out with a decent experience you can max everything and leave Shadow Maps set to High and you'll be looking at around 42fps or so. This info was on the previous page. However, the point of these optimizations are to lower the vRAM usage and prevent throttling and frame pacing issues. Feel free to mess around with the settings and let me know what you come up with.

Well that's all I have now for optimizations. I hope this will help someone. Remember all of the settings above will ensure that you do not exceed the vRAM 4GB limitation for a better experience while gaming at DX12. Things will contiunue to get better as we receive more patches and driver updates.

Hitman Update 1.1.0 - DirectX 12 Internal Benchmark Results

Hitman was recently patched and upgraded to 1.1.0. There’s some controversy around this particular update, but this entire title has been controversial since Square Enix announced it would release Hitman in an episodic fashion. AMD has also release their latest drivers [16.4.1] as well. We already know that AMD worked closely with IO Interactive to implement “Async Compute” which is something that AMD hardware has been supported since the 7000 series. As usual AMD was thinking ahead of time. When GCN first released it was back in 2011/2012. This basically allows AMD GPUs to perform more work in parallel rather than the serial data structure we’ve been seeing in DX9\10\11 with a single main thread.

Other than a few special developers who actually built their engines around multi-core support, most games use only a single thread. Combine the single thread usage with the DX11 Draw Call limitation and you’ll easily reach a bottleneck. Modern CPUs are much faster, but GPUs are still more powerful and quicker. So basically the GPU uses a lot of it’s time “waiting” on the CPU to send data. If that data is being sent in a serial manner then powerful GPUs are wasting energy and performance. If the CPU is sending data constantly in a parallel manner, then you can have concurrent operations executing on the GPU as well. This ensures that that GPU always has something to process. This is the future of computing in general. CPUs are getting close to a point were concurrent operations and parallel programming must take advantage of multi-core support we have had for many years now. Believe it or not Quad cores aren’t being fully utilized in a ton of apps.

You are probably wondering what the point is. Well the point is that AMD hardware is capable of running games better when using asynchronous workflow. The other point is that a lot of PC gamers need to understand what DX12 and Vulkan is providing for the PC gaming community and innovation. This is something DX12 and Vulkan provides, thanks heavily to AMDs Mantle technology.

Update Patch 1.1.0 - Broken

The results that originally posted came from the Internal Benchmarks that the devs supplied. I have ran a my own Real Time Benchmarks™ and it appears that the results aren't accurate. For example I checked the data and although the cores are working it appears that the settings aren't being set properly. My vRAM usage @ 4K was only using 3129MBs. As we all know at 4K the 4GB vRAM can throttle performance. As far as I can tell after setting and saving the graphical options there appears to be no difference in the "actual" settings. SMAA appears to be the only working option. I'll update the results once the devs address this issue and release another patch.

Eventhough the I'll need to benchmark everything again I can still use the current data. Since all of the graphical settings are the same I can use this to see how well the Fury X performs with the DX11 Draw Call limitation removed.

| Patch 1.1.0 -DX11 - 4Ghz 1920x1080 | Patch 1.1.0 - DX12 - 4Ghz 1920X1080 | Patch 1.1.0 Performance % Increase |

| 10278 frames | 11293 frames | 10% [9.87%] |

| 89.40fps Average | 99.11fps Average | 11% [10.86%] |

| 10.87ps Min | 14.86fps Min | 37% [36.70%] |

| 284.33fps Max | 463.00fps Max | 63% [62.83%] |

| 11.19ms Average | 10.09ms Average | 10% [9.83%] |

| 3.52ms Min | 2.16ms Min | 39% [ 38.63%] |

| 92.00ms Max | 67.29ms Max | 27% [26.85%] |

Although you can't really rely on the max and min results in this benchmark, this patch does allow for "Apples to Apples" comparisons with the graphics. From my previous test the FPS Averages I benched during the Real Time Benchmarks™ matched the internal benchmark so they are accurate. With the CPU performing more work you clearly see the benefits. DX12 definitely improves the performance. With my PC running 4Ghz + DDR3 1400Mhz we see a decent frame rate average increase by 11% Now if the devs can push out a quick fix I can start benchmarking again. Then we can see if the patches and AMD driver updates will indeed increase performance.

When I overclocked my CPU even further to 4.8Ghz + DDR3-2095Mhz I was able to pull 126fps @ 1080p + DX12. This puts my FPS average 27.15%over my 4Ghz + DDR3-1400Mhz overclock. So DX12 appears to be working fine in this game as far as removing the CPU limitation. Here's a chart with the DX12 4Ghz vs DX12 4.8Ghz performance differences.

| Patch 1.1.0 -DX12 - 4Ghz 1920x1080 | Patch 1.1.0 - DX12 - 4.8Ghz 1920X1080 | 4.8Ghz DX12 Performance % Increase |

| 11293 frames | 14381 frames | 27% [27.34%] |

| 99.11fps Average | 126.02fps Average | 27% [27.15%] |

| 14.86fps Min | 17.04fps Min | 15% [14.67%] |

| 463.00fps Max | 671.75fps Max | 45% [45.08%] |

| 10.09ms Average | 7.94ms Average | 21% [21.30%] |

| 2.16ms Min | 1.49ms Min | 31% [ 31.01%] |

| 67.29ms Max | 58.69ms Max | 12% [12.78%] |

I'm sure gamers using newer AMD and Intel CPUs\architectures will benefit more unless they hit the GPU limit. Now we just have to wait for IO Interactive to sort out the graphic setting issues.

Update Patch 1.1.0 Fixed

The developers have resolved the graphical problems as well as other issues in the game. I can now benchmark the game with 100% maxed settings properly. I've ran my benchmarks and the only FPS average difference is I can see is the 4K average FPS. This isn't bad news at all due to the amazing increase. The overall FPS Average increased by 22%! I am running AMDs latest drivers as well [Crimson 16.4.2 Hotfix]

Apples to Apples

| Day 1 -DX12 - 4.6Ghz - 3840x2160 [4K] |

Patch 1.1.0 [Fix] - DX12 - 4.6Ghz 3840x2160 [4K] |

4.6Ghz DX12 Performance % Increase |

| 4081 frames | 5020 frames | 23% [23.01%] |

| 36.02fps Average | 43.82fps Average | 22% [21.65%] |

| 5.24fps Min | 10.02fps Min | 92% [91.22%] |

| 65.61fps Max | 532.19fps Max | 711% [711.14%] |

| 27.76ms Average | 22.82ms Average | 18% [17.79%] |

| 15.24ms Min | 1.88ms Min | 88% [ 87.66%] |

| 190.95ms Max | 99.82ms Max | 48% [47.72%] |

Day 1 - 4.6Ghz vs Patch 1.1.0 [Fix] - 4.8Ghz

| Day 1 -DX12 - 4.6Ghz - 3840x2160 [4K] |

Patch 1.1.0 [Fix] - DX12 - 4.8Ghz 3840x2160 [4K] |

4.8Ghz DX12 Performance % Increase |

| 4081 frames | 4975 frames | 22% [21.90%] |

| 36.02fps Average | 43.43fps Average | 20% [20.57%] |

| 5.24fps Min | 11.47fps Min | 119% [118.89%] |

| 65.61fps Max | 545.76fps Max | 732% [731.82%] |

| 27.76ms Average | 23.02ms Average | 17% [17.07%] |

| 15.24ms Min | 1.83ms Min | 88% [ 87.99%] |

| 190.95ms Max | 87.21ms Max | 54% [54.32%] |

The tighter RAM timighs with 4.6Ghz gives me a slightly better FPS Average, but the larger memory frequencies shows better Max and Min FPS. The actual difference is so minor that it doesn't really matter all that much. The 4K performance increase is all that matters in this case. Great work IO Interactive and AMD!

Hitman Update 1.1.2 - Benchmark Results

Just like my previous Real Time Benchmarks™ I visit different parts of the levels and cause as much chaos as possible. Sometimes I try to be stealthy when I am outnumbered or when the enemies have shotguns and assault rifles. It's worth noting that the frame rate and frame times drops are due to the game randomly saving. I addressed this issue a few pages back where I explained what " FPS Min Caliber™" is and why I add it to my benchmarks. There are plenty of areas to explore and here are my results.

DirectX 12

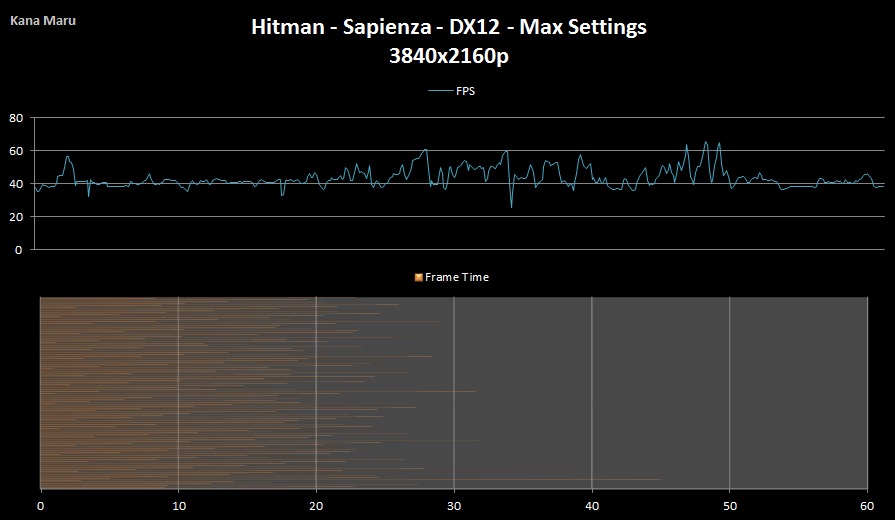

Hitman [100% Max Settings + DirectX 12] - 3840x2160 – 4K - Sapienza

AMD R9 Fury X @ Stock Settings [Crimson 16.4.2 Beta Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-2095Mhz

FPS Avg: 44.40fps

FPS Max: 68.1fps

FPS Min: 25fps

FPS Min Caliber™: 36.5fps

Frame time Avg: 25. 5ms

Fury X Info:

GPU Temp Avg: 45.76c

GPU Temp Max: 47c

GPU Temp Min: 42c

CPU info:

CPU Temp Avg: 48c

CPU Temp Max: 64c

CPU Temp Min: 43c

CPU Usage Avg: 16%

CPU Usage Max: 26%

DirectX 11

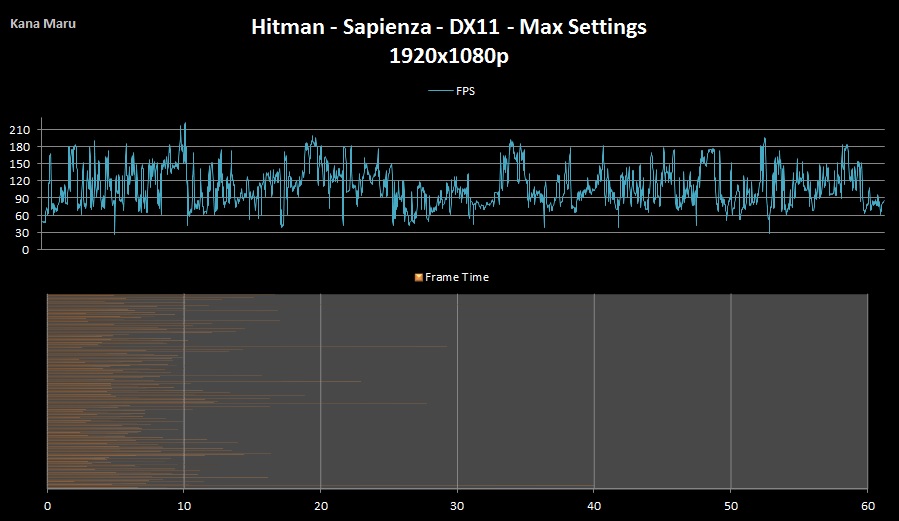

Hitman [100% Max Settings + DirectX 11] – 1920x1080p - Sapienza

AMD R9 Fury X @ Stock Settings [Crimson 16.4.2 Beta Drivers]

This email address is being protected from spambots. You need JavaScript enabled to view it.

RAM: DDR3-2095Mhz

FPS Avg: 110fps

FPS Max: 221fps

FPS Min: 26.7fps

FPS Min Caliber™: 56.6fps

Frame time Avg: 9.13ms

Fury X Info:

GPU Temp Avg: 47c

GPU Temp Max: 50c

GPU Temp Min: 39c

CPU info:

CPU Temp Avg: 58c

CPU Temp Max: 67c

CPU Temp Min: 50c

CPU Usage Avg: 39%

CPU Usage Max: 62%

Check back soon for more updates.

Upcoming Updates:

- Add Internal DX12 Results

-Add Paris Level Real Time Benchmarks™

-Add CPU Usage Chart

-Add 4K, 1440p, 3200x1800 and possibly 1080pReal Time Benchmarks™

- Play and enjoy the game

-Wait for another patch\update

-Upload Episdoe 2 Sapienza 4K Real Time Benchmarks™

Thank you for reading.

Feel free to comment below.

Archived Comments

Please post NEW comments below: