X58 + RTX 3080 Production Benchmarks

After writing my RTX 3080 review I received a request to benchmark the X58 and the RTX 3080 in actual production related apps. In my previous RTX 3080 article I focused mostly on the gaming aspects and the synthetic benchmarks focused on Unigine, 3D Mark and CryEngine (ray tracing). This time around I will try my best to provide more information regarding the X58 & RTX 3080 while using actual programs for work & production related tasks. The two major apps I decided to use was Blender and Unreal Engine 5.

Is Intel’s X58 still viable?

The question about whether Intel’s X58 is still viable or not depends on the user needs. Obviously the X58 can be fairly cheap to purchase nowadays. After my initial 2013 Xeon L5693 article and my 2014 Xeon X5660 article the prices simply skyrocketed for the older X58 platform. Those prices stayed fairly high throughout 2014-2017. Motherboards that would go for $50 any day of the week shot upwards towards $200+ dollars. I’ll speak more on the X58 pricing and my influence in the future article, however, the X58 remained viable during the Sandy and Ivy Bridge era. The X58 also remained a viable solution after AMDs Ryzen 1000 series, especially in gaming scenarios. The RTX 3080 review proved that the X58 was still viable for high end 4K gaming scenario’s as well. That’s not to say that the X58 + Xeon L56xx or Xeon X56xx was the best machine to run during those years, it wasn’t, but it was far from the worst. It still had its purposes and for those who needed a machine for coding, gaming and nearly any other task the X58 still packed a punch at a great price. Personally I would use Blender, Zbrush, Unity, Unreal Engine, MS Visual Studio’s, SQL\MSSQL, DaVinci Resolve and various programs to complete my daily tasks during those years (Xeon X5660 - 2013-2018). Since then I have scaled back a bit since I have moved into different areas in technology.

X58 + RTX 3080 Production Benchmarks YouTube Video

Specifications

Not much has changed from my RTX 3080 article. I am running the EVGA RTX 3080 Gaming ‘Black’ at the stock settings out of the box. Hopefully this article will give a little more insight into how well the X58 performs in 2021. The only error is the GeForce driver, I ran the GeForce Driver v466.47 in this review. Please be sure to read my other articles and watch my video reviews on YouTube for more X58 information in 2020\2021. I am still planning on bringing more content so stay tuned. Now let’s take a look at some of my results.

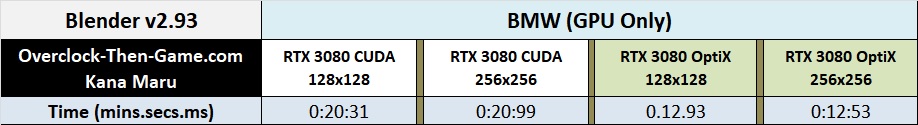

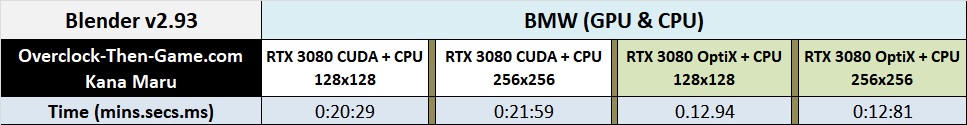

Blender

I ran quite a few renders in Blender v2.93. I quickly learned what were the best performance options for my X58 & RTX 3080 combo. I split some of the benchmarks into “GPU Only” & “GPU + CPU” results. Blender supports GPU Rendering for both AMD and Nvidia. Nvidia supports OptiX and of course CUDA. Nvidia’s OptiX is capable only with Nvidia’s RTX GPUs since OptiX is a Ray Tracing API. This allows Nvidia RTX Tensor Cores to be utilized during GPU rendering. CUDA is still available and useful, but will see how much better Nvidia’s RTX OptiX API performs compared to CUDA. CUDA is also required in order to use OptiX since CUDA will provide the data and OptiX will compute the data using the Tensor Cores. Besides GPU rendering there is also CPU rendering (homogeneous) which I am obviously going to avoid with such an old platform and CPU architecture. Using only the CPU for rendering isn’t recommended. However, we have Heterogeneous Rendering which allows both the CPU & GPU to be utilized. Blender can split the workload between the two. Newer CPU architectures and platforms will benefit this type of rendering much better than the X58 based CPUs, but I will show various settings and Heterogeneous Rendering.

Blender - Xbox Controller

While I was deciding which Blender images\renders I would use I came across this cool Xbox Controller that was created, animated and blended within Blender. It was very cool and appeared decently demanding. The X58 + RTX 3080 had no issues while using Blenders Viewport which topped out at a constant 144FPS to match my monitors refresh rate (1080p is what I was currently using for the highest refresh rates during my benchmarks). Early in my benchmark tests I would use a mixture of tiles settings: 64x64, 128x128 and 256x256. I quickly learned that 256x256 was my best settings for rendering. Tiles determines what dimensions will be rendered with any particular image. Blender internally breaks down the images into pieces and each “piece” is rendered, just think of puzzle pieces being placed. Smaller Tile sizes are better for CPUs (32x32 or 64x64) and larger Tiles are better for GPUs (256x256). So when using Heterogeneous Rendering (CPU & GPU) it’s best to find a nice balance between the two. Since the RTX 3080 is a beast at rendering I learned quickly that it has no issues with 256x256.

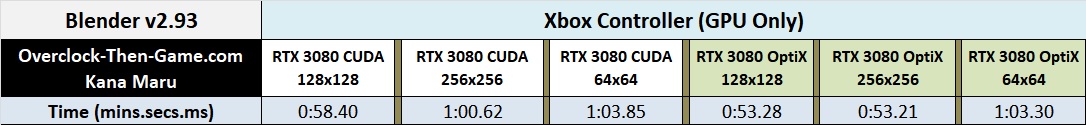

Xbox Controller – RTX 3080 GPU Rendering

Looking at the results we can quickly see that OptiX is faster than CUDA. We also see that using CUDA with128x128 is slightly faster than 256x256 by 3.66%. That would be CUDA 128x showing roughly 58 seconds and CUDA 256x coming it at 1 minute. Very minor, but CUDA 128x wins here. The 64x64 tiles show the worse results coming in at 1 minute and 3 seconds (& 85 milliseconds). Nvidia’s OptiX on the other hand shows improvements over CUDA once the RTX Tensor Cores are utilized. In this case 256x256 was the best option and 64x64 was the worse. OptiX was roughly 5 seconds to 7 seconds faster than CUDA in this specific Blender Xbox Controller render. The results seem minor, but it adds up when you have to render several images for an animation over time. There are many ways to speed up rendering, but during my benchmark testing I used the default settings and only changed the Tile settings to give a fair and balance view of the results. Also the fact that I don’t use Blender nearly as much nowadays also kept me away from modifying a lot of the settings. While using Nvidia’s OptiX 128x128 & 256x256 they both rendered in roughly the same time coming in at roughly 53 seconds.

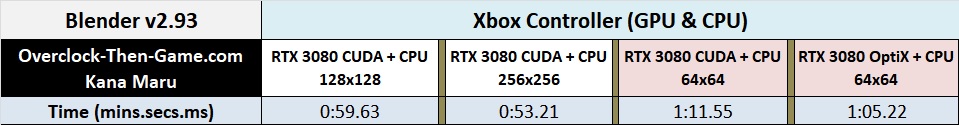

Xbox Controller – RTX 3080 GPU & CPU Rendering

Now we will take a look at the Heterogeneous Rendering which allows both the CPU & GPU to be utilized. My X5660 was clocked at 4.6Ghz and the RAM was clocked at 1600Mhz (DDR3 RDIMM). Not the most impressive specs, but I can’t complain. I ran the benchmarks using various options: CUDA + CPU and OptiX + CPU while using different tile settings (64x64, 128x128 & 256x256). It was clear that OptiX was the better choice just by looking at the 64x64 results. “CUDA + CPU” took nearly 1 minute and 12 seconds while OptiX took only 1 minute and 5 seconds. Sadly I didn’t run more” OptiX + CPU” benchmarks using larger tiles in this specific benchmark. Comparing the “GPU + CPU” results to the “GPU Only” results shows that there are situations where adding the CPU can be beneficial such as the “CUDA 128x128” vs the “CUDA + CPU 256x256”; the “CUDA + CPU” was roughly 7 seconds faster than simply using the GPU (CUDA) by itself. Using the only the GPU (OptiX) shows that it performed the best out of all of the test, coming in at 53 seconds.

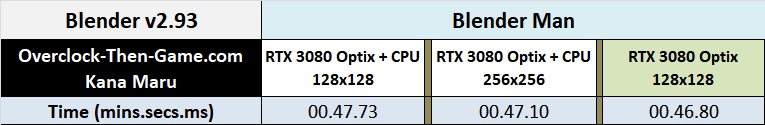

Blender Man Render

Blender - BMW Render

I decided to use the well-known BMW image for rendering because it seemed like the correct decision. There is plenty of data out there to compare using this particular benchmark. I decided quickly that showing the 128x128 and 256x256 would be the best benchmarks for testing. Comparing both the “GPU Only” and “GPU + CPU” results shows that regardless of what API (CUDA or OptiX) that you decide to use the results will be roughly the same using either 128x128 vs 256x256. However, it worth noting that you can clearly see the advantages that Nvidia’s Optix has over their CUDA implementation. Just focusing on the “GPU Only” results we can see that Nvidia’s OptiX is roughly 8 seconds faster than CUDA. Comparing Nvidia’s CUDA best score to Nvidia’s OptiX best score shows a nice 38% increase in the BMW Render. We are now seeing the power of Nvidia’s Tensor Cores in action.

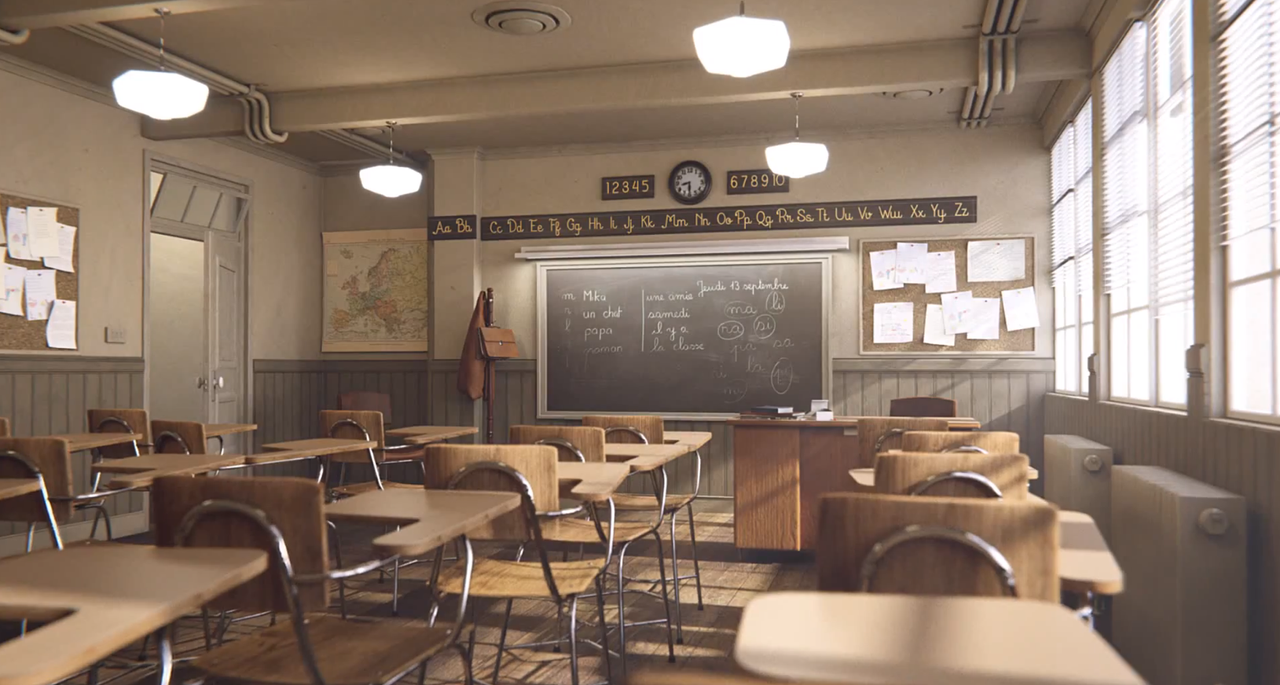

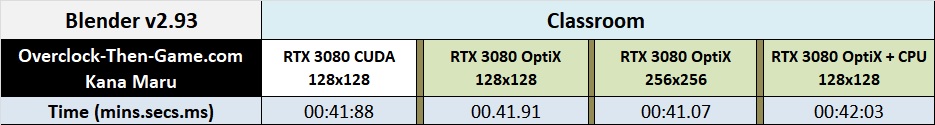

Blender - Classroom

The Classroom scene is also a well-known benchmark to use in Blender. CUDA and OptiX perform roughly the same even when using different Tile settings. I also included the “GPU + CPU” benchmark and the results were only 1 seconds slower than the “GPU Only” results. Overall I’m impressed with the results compared to many newer platforms which I will compare later in this article. Meaning that the X58 can still be a great backup for production if it is ever needed.

Unreal Engine 5

I downloaded Unreal Engine 5 which is still in early access. The Engine is quite large and is still getting ready for production. During the Playstation 5 release Epic revealed their new engine and its capabilities. Unreal Engine 5 will allow high quality ‘film-like’ art to be directly imported in to the Unreal Engine. Powerful models from programs such Zbrush will be supported as well as large polygon counts will be supported as well and was showed in the UE5 gameplay trailer. Unreal Engine 5 has a ton of other new features that will assist creators and I was able to get my hands on UE5 and the Valley of the Ashes files.

UE5 is a 35GB download and Ashes of the Valley was a 100GB download. However, once you begin to load and unpack Ashes of the Valley within the Unreal Engine (model\textures\compiling\shaders) it expands to a whopping 206GBs! This is very large, however I go above and beyond when I’m gathering data for you enthusiast to read and enjoy. In this particular benchmark suite I decided to benchmark both the Viewport (Unreal Engine 5) Frames Per Second and the standalone “In-game” FPS benchmark, but the different is that when I was in the viewport I was moving around the scene and editing models etc.

Unreal Engine 5 – Loading Performance

When loading the Ancient World Map into the UE5 app the UE editor skips to 55% nearly instantly and afterwards it takes roughly 1 minute to load the entire level. After loading the level you are free to edit and look around the large scale world. You float around the world or load the game and use a drone to fly around the area. To compile and run the game within the Unreal Engine 5 editor it took about 20 seconds on my X58 platform. There are two game worlds in the Ashes of the Valley Demo: the Ancient World & Dark Ancient World. Once you leave the Ancient World to enter the Dark World it took me roughly 1 minute and 3 seconds while in the UE5 editor. This is mostly due to loading the assets, textures, dark world map and so on.

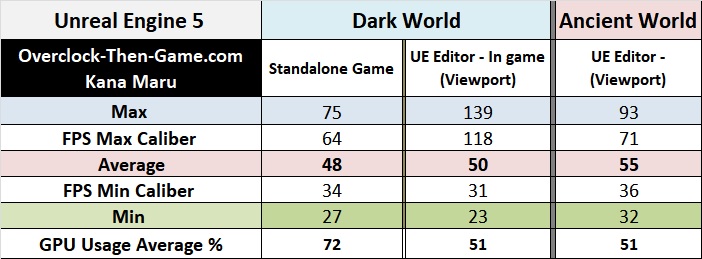

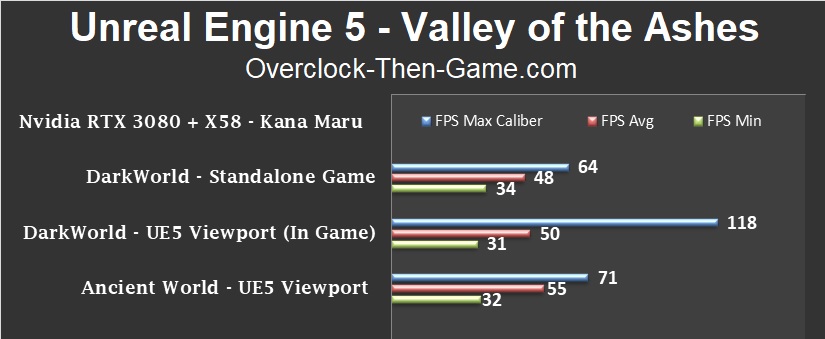

Unreal Engine 5 – Frames Per Second Performance

Now let’s take a look at the FPS while using the Unreal Engine 5 editor Viewport and Standalone Game benchmarks. Within the U5 editor the FPS are a bit higher in -game due to some areas of the screen being used for editing purpose. Obviously the “Standalone Game” uses “all” of the screen space (1080p for the highest refresh rates). The RTX 3080 isn’t breaking a sweat coming in at only 51% average GPU Usage while working within the UE5 Editor and 72% on average while playing the Standalone Game. It is also worth noting that “Ashes of the Valley” isn’t necessarily the most optimized game either. It uses a ton of large assets and expands to 200GBs as I explained earlier. The FPS Minimum Caliber is normally the most important FPS category when it comes to minimums for me and the X58 (& RTX 3080) does decently well while being 13 years old.

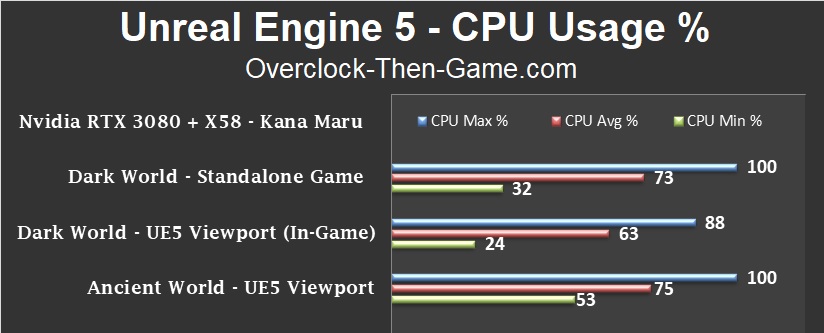

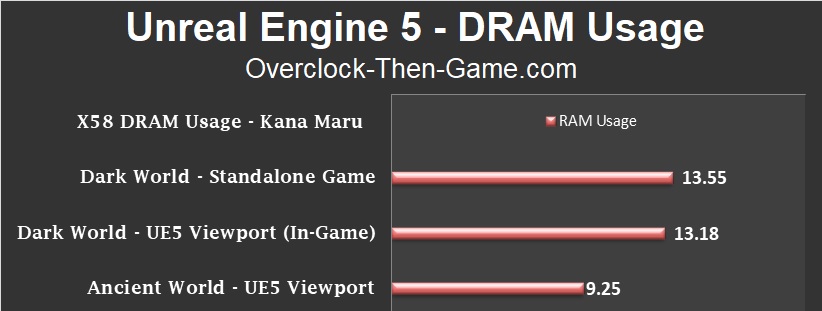

UE5 - X58 CPU Usage & DRAM Percentage

The CPU Usage % was obviously quite high being that I am running a very old Intel platform. The Average was 73% within the standalone game and 63% & 75% while running the Dark World & Ancient World respectfully within the UE5 Editor. While loading and playing the game within the UE5 Editor, the RAM usage average 13.55GBs on the Dark World map. In the “Standalone Game” the Dark World used a little less at 13.18GBs. The Ancient World only use 9.25GBs within the UE5 Editor which was expected as it is mostly a large and empty open world.

DRAM Usage

UE5 - RTX 3080 vRAM Usage, Temps & Power Usage

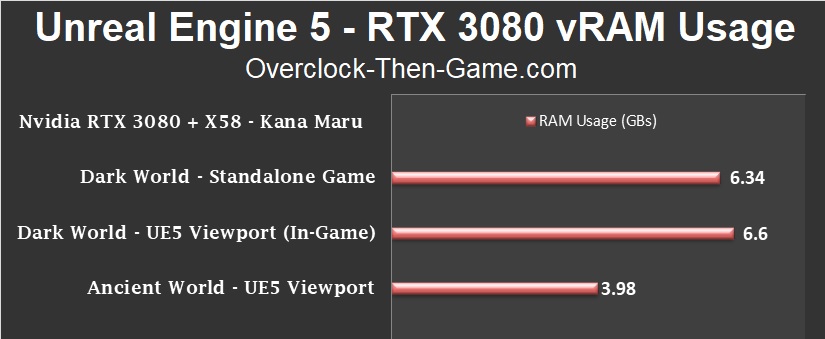

The RTX 3080 vRAM Usage averaged 6.34GBs in the “Standalone Game” on the Dark World map and 6.6GBs while playing the Dark World area within the UE5 Editor. The Ancient World shows an average of 3.98GBs.

UE5 - RTX 3080 Power Usage and Temperature

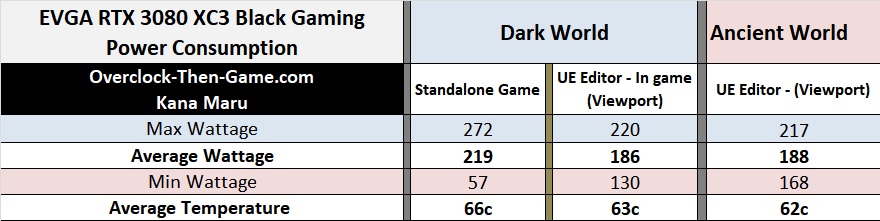

The Nvidia RTX 3080 pulled an average of approx. 187watts in the UE5 Editor within and outside of the game. The Standalone Game pulled 219watts. The Standalone Game runs the GPU at its maximum GPU core clock.

X58 + RTX 3080 Blender Comparison vs Modern Machines

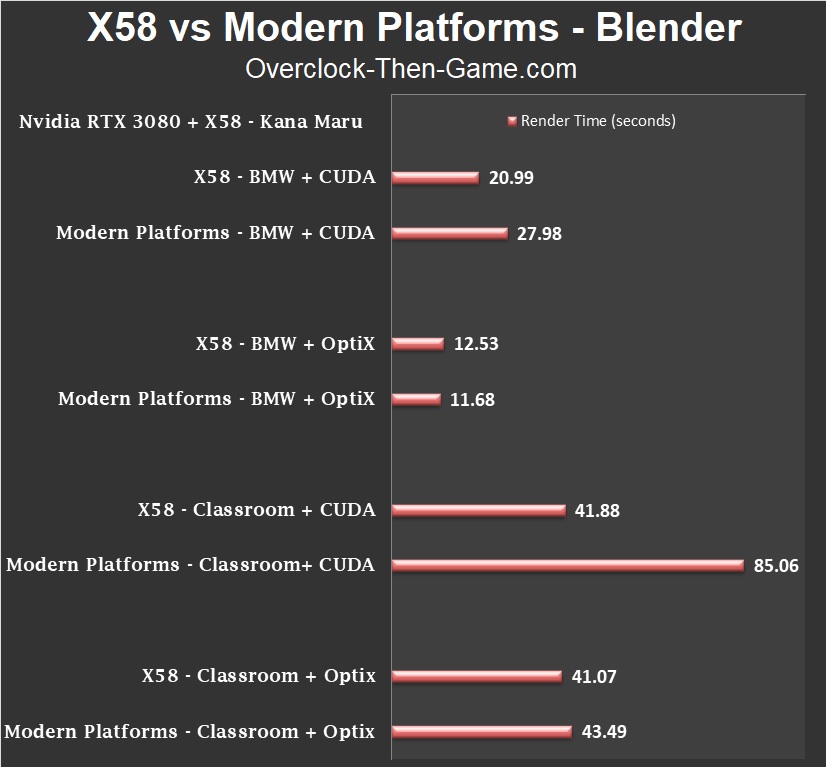

In my previous RTX 3080 review I compared the X58 gaming performance to Modern Platforms running newer Intel\AMD platforms. I have decided to do the same in this article as well. My X58 + RTX 3080 performs just as well as the newer platforms. Since most of the work is done using Nvidia’s RTX Optix and CUDA the workloads\renders will run fine. The refresh rates were very nice while working around Blender’s UI \ Viewport and I was able to get 144FPS at 1080p with ease. The results below against the Modern Machines are all running different types of hardware and different brands of Nvidia’s RTX 3080. I am personally running the EVGA RTX 3080 Gaming ‘Black’. In the chart below we can see that the X58 and RTX 3080 combo performs very well against the modern competition. Looking at the BMW CUDA Render we see that the X58 comes in 33.3% faster than a wide range of machines running the RTX 3080 on average. Turning to the BMW OptiX results shows that the X58 was only 6.78% slower than the Modern Platforms from Intel and AMD. Or in other words while using Optix the X58 + RTX 3080 was only 0 seconds and 85 ‘milliseconds’ slower, very minor indeed. The data presented in this section comes directly from Blender, but there was one set of data that was unusual and that was the Classroom CUDA results. The results I gathered showed an average of 85.06 seconds which is much higher than my results (41.88 secs). So I am not sure if there are different settings being used. I did find another Classroom Render with result that was much closer to my results; and those results were 31 seconds (CUDA) which was 35.48% faster than my CUDA results (approx. 42secs). Although I was only able to find one sample it should provide a better comparison over the other large samples that showed 85 seconds. Focusing on the Classroom OptiX results we see the results align much better than the CUDA results explained above. In this test across a large sample we see the X58 platform perform 6% faster than the modern machines running the RTX 3080.

(Lower Is Better)

Conclusion & Comments

Here we are once again wrapping up another X58 article in 2021. The X58 platform is nearly 13 years old and it is still going strong. In this article I have covered roughly 28 benchmarks using Production related workloads for creators. The RTX 3080 is a workhorse and Nvidia’s OptiX leverages the power of the RTX Tensor Cores. OptiX shows nice increases over CUDA in most cases. My Unreal Engine 5 results show what the loading & compiling performance is on the X58 platform even with an unoptimized demo. “Ashes of the Valley” is a very large download at 100GBs and unpacks to a very large 206GBs. However, the X58 is still able run the Unreal Engine and the large inefficient demo fairly well in my opinion. Once the demo has been optimized and the Unreal Engine 5 is moved from Early Access to Full Release we could see much better results for both the RTX 3080 and the X58 based CPU\Platform performance. Only time will tell. Overall the Viewport performance was good in both Blender and Unreal Engine 5. Both engines give you plenty of options optimize the Viewport performance (FPS & Frametimes). Unreal Engine 5 is bit harder to run since it’s a full-fledged production engine for AAA games and industrial applications. Blender is also another awesome piece of open source software, but its main focuses are modeling, animation, rigging, rendering, but not limited to just that. Blender can also be used for 2D animations\modeling and motion tracking. Blender is far easier to run than Unreal depending on the workload that is required as I was able to reach 144FPS easily. Given its nearly 13th year on the market I feel that the X58 still does a really good job against the current technology workloads and apps.

Thank you for reading my "X58 + RTX 3080 Production Benchmarks" article. Feel free to leave a comment below and feel free to share this article. If you would like to see more content like this please consider donating to my Patreon or PayPal by clicking the images below. Thank you.