RTX 4000 Specs & Price Revealed And It's Not Pretty

Introduction

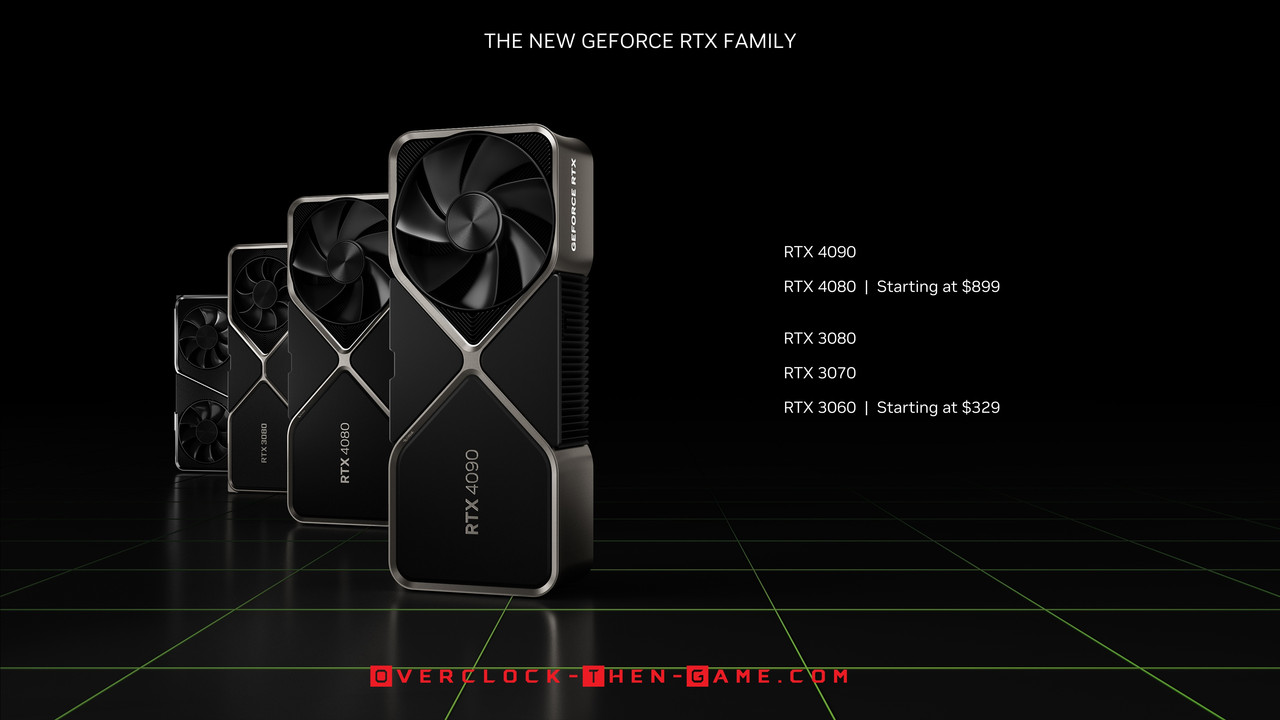

Nvidia recently held their yearly GTC Conference on September 20, 2022 and shocked the world in more ways than one. They started off with their Nvidia Racer RTX video which looks very impressive. I loved the realistic lighting and post processing. Moving on from 3000 series “Ampere”, Nvidia’s 4000 series is named “Ada Lovelace” who is credited as being the first female programmer. The RTX 4090 and 4080 will definitely bring some serious performance, but there are a few issues that I must discuss. I have been very busy lately and I finally got a chance to sit down and view the GTC Conference several days after the conference. I received a message from a friend and he spoke about an RTX 4080 “Lite”. Initially I thought he was talking about the RTX 4080 initial release that would be followed up by the “Ti” version. After checking out the GTC Conference and the RTX 4000 series price and specifications I knew exactly what he meant. I will save the in-depth micro-architecture features for a RTX 4000 series review article. This article will focus on my issues that I am having with the 4000 series compared to the 3000 series and possibly Nvidia’s reasoning behind their decisions.

Pricing

It should come to no surprise that the RTX 4000 series would be more expensive. The economy appears to be in a recession and stock markets are crashing all over the place. The market was settled on the RTX 3080 selling between $1,200 - $1,800 easily between Q4 2020 to Q2 2022. The RTX 3080 “Ti” release (Q2 2021) didn’t do much to settle prices either, but luckily now that RTX 3000 series have been much easier to buy we have seen prices settle down in 2022. In Q3 2022 you can find RTX 3080 Ti’s for around $700 to $900 and RTX 3080 for roughly $500-$600. So now that Nvidia knows what people are willing to spend they are pricing their GPUs accordingly to the market (inflation, recession etc.). The RTX 4090 will cost $1,599, the RTX 4080 (16GB) will be $1,199 and the RTX 4080 (12GB) $899. Nvidia can price their GPUs however they please, but there is more to the picture. Similar to the RTX 3080 confusing and staggered releases consisting of multiple hardware configurations; we get the same treatment this time around, but on the release day.

More Gaming Performance, but other workloads throughput could be hindered

For most enthusiasts currently using the RTX 3080\RTX 3080 Ti the RTX 4080 12GBs is simply off the table at the $900 price point. In my opinion the memory bandwidth for any GPU higher than $700 should be 512-bit by default but I can’t always have my way, however I can live with the 384-bit memory bus for the RTX 3090 and 320-bit for the RTX 3080. With the RTX 4000 series things will be changing dramatically. Only the RTX 4090 will have the 384-bit memory bus. The RTX 4080 16GB will only have 256-bit memory bus and the RTX 4080 12GB will release with only 192-bit memory bus. To keep things simple I will be using the original RTX 3080 (MSRP $699.99) for comparisons throughout this article. I will not include the 3000 series “Ti” prices. To be honest it would be difficult to use the actual price of the RTX 3080 since it has fluctuated widly over the past two years.

Focusing on the RTX 4080 16GB for a moment

The RTX 3080 that I purchased (at MSRP in 2020) has a 320-bit memory bus which moves 760 GB/s. The RTX 4080 (16GB version) will release with a 256-bit memory bus which moves 717 GB\s. The memory bus decreases by 20% and the throughput decreases by 6%. The MSRP for the RTX 3080 was $699 and the RTX 4080 16GB MSRP be $1,199. That is a whopping 71% increase not including tax. Not many people will feel good paying an extra $500.00 for inferior specs, however the performance is what truly matters right? As you can see this isn’t added up from a technical perspective, but the PC market have changed dramatically since 2020. It appears that Nvidia doesn’t want the x80 getting anywhere near the x90 in raw performance.

RTX 4080 12GB = 192-bit Memory Bus

It gets even worse for the RTX 4080 12 GB version which will only get a 192-bit memory bus. Nvidia is obviously crippling the memory bus & bandwidth. That 192-bit memory bus width is usually reserved for the lowest tier (x60) graphic cards and last gen that would have been the RTX 3060 (MSRP $329.99). The RTX 4080 (12GB) bandwidth will only be capable of moving 504 GB\s at stock. That is a 51% difference in memory bandwidth between the RTX 4080 12GB ($899) and RTX 3080 10GB ($699) or if you are a current RTX 3080 user that would be a 34% decrease in bandwidth. Now the biggest issue for current RTX 3080 users is that the cheaper RTX 4080 10GB version will cost 28% more. Let’s be honest, similar to the RTX 3000 series the RTX 4000 series will be more expensive regardless of what price Nvidia sets at MSRP. We still have AIBs that will release their cards at higher prices over the next few months and lets not forget our lovely scalpers & bots that will come into the market. This memory bandwidth decrease will definitely effect certain applications outside of gaming. Regardless of how well the RTX 4000 series runs no one wants to pay so much more while losing so much raw theoretical performance. Remember that I can easily overclock my RTX 3080 vRAM and get 840 GB\s. So comparing the RTX 4080 12GB version bandwidth to my overclocked RTX 3080 vRAM, there is a 67% difference in bandwidth throughput so in my case that would be a 40% decrease in performance in memory bandwidth if I switch to the stock RTX 4080 12GB. That’s not to say that the RTX 4080 12GB\16GB memory won’t overclock easily as well, but I am only comparison against the information that Nvidia has released.

| RTX 4000 Series SpecsOverclock-Then-Game.com | ||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Performance – Nvidia does not want the x80 anywhere near the x90

Now focusing on the RTX 4090 for a brief moment

So for clarity the RTX 3090 at release had 14% more CUDA cores than the RTX 3080. The RTX 4090 it will have 68% more CUDA cores than the RTX 4080 16GB and 113% more CUDA cores than the RTX 4080 12GB. Nvidia simply does not want the x80 cards anywhere near the x90 card this time around. Outside of gaming the RTX 3080 was great for certain workloads and professional applications that could utilize CUDA\Optix without using a lot of wattage. Nvidia has also left themselves plenty of room to counter anything AMD tries with their RTX 4000 “Ti” series.

x80 vs x90 – Ampere then Ada

Memory Bandwidth

When the RTX 3080 being was being compared to the RTX 3090 there wasn’t a huge difference in specifications. Overall the RTX 3080 was roughly 20% below the RTX 3090 across all major specs, however the theoretical performance numbers were roughly 21% slower the 3090 as well. Overclocking the RTX 3080 memory could definitely push the performance numbers closer to the stock RTX 3090. I could easily overclock the RTX 3080 GDDR6X memory and push the throughput to 840 GB\s which is only 11% less than the RTX 3090 stock memory throughput. So for workloads that depends heavily on Optix\CUDA cores and memory throughput you will be getting very nice performance for the price in your professional apps or specific workloads. Speaking of prices we all know how the prices went with the Nvidia 3000 series and the AMD 6000 series. It was worth calling the RTX 3080, "flagship". Theoretical performance will be roughly 70% faster on the RTX 4090 over the RTX 4080 16GB from my early speculations, but we will have have to wait for benchmarks. Things get far worse for the RTX 4080 12GB, the RTX 4090 will be over 100% faster than the RTX 3080 12GB. So it’s not even close to what we had to the 20% difference with the RTX 3090 vs 3080.

Memory Bus Width

Nvidia Decisions Has Given Us An Interesting Predicament

Nvidia has established the ‘x80’ card as being the flagship that is usually followed up with the x80 "Ti" version the following year. This time around it feels as if the x80 is no longer the flagship and is severely limited. The 4080 12GB definitely feels more like an ‘x70’ tier based on the low performance numbers compared to the RTX 4090. Nvidia has placed the company in an interesting position in the eyes of enthusiast and gamers, but it has all come from Nvidia’s own decisions. Nvidia first introduced the Titan in 2013 with their 700 series and prior to the 700 series the x90 was the top dual-GPU for prosumers who wanted to game and create content. Nvidia moved away from the dual-GPU x90 cards and started the successful Titan brand with 700 series. The Titan took over the role as being the top enthusiast and content creator GPU. The Titan replaced the GTX\RTX x90 GPU from the GTX 700 series until the RTX 2000 series; however Titan RTX released at the professional and enterprise market prices ($2,499) so we can’t really count that one. So the for the 2000 series the RTX 2080 ‘Ti’ was the top of the of the line card for enthusiast. Multiple 3080 releases over the past few years have been very confusing due to Nvidia changing core counts, vRAM and other specifications while using the same tier name. Luckily this time we are getting the multiple 4080 variants upfront on day one.

| RTX 4000 - RTX 3000 Specifications Overclock-Then-Game.com |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Traditional Price difference between the x80 & 90

For most of recent history the x80 has been anywhere from $350 to $800 (MSRP) less expensive than the x90. So that would mean from the GTX 500 series to the RTX 3000 series the average MSRP price difference between x80 to the x90\Titan\2080Ti is $443. The two most expensive price differences between the x80 and x90 was the 600 series ($600) and the 3000 series ($800). If we remove the two most expensive price differences between the x80 and x90, the 600 series and the 3000 series, that average drops to $340. Earlier I stated, and I will repeat, that the 2080 Ti price was calculated because the 20xx series did not have an x90 GPU variant; the Titan RTX (2000 series) MSRP was $2,499 which was aimed at an entirely different market so I did not include that price. So with the RTX 4000 series the price increases falls in-line with what we normally see if we compare the 4080 16GB to the 4090 ($400 difference in MSRP), but this number jumps to $700 if you compare the 4080 12GB to the 4090. Nvidia decided to cover the entire price difference over the past decade or so with the RTX 4080\90 on release day this time around. Instead of simply making one 4080 flagship Nvidia is trying to fill in the price gaps from the last generation and the incoming 7000 series from AMD, while leaving themselves and AiBs a large cushion to price their GPUs accordingly (4080 OCs \ RTX 4080 Ti).

4080 Price Outpaces x80 Prices From Previous Generations

x80 Sticker Shock

Some ethusiast might have an issue due to Nvidia using the x80 branding on GPUs that are clearly inferior by a large margin to the x90. The other issues are that the prices are not what we would typically pay for an x80 GPU. The average price for a GTX\RTX x80 GPU over the past 12 years has b een $599.99. So revealing that the 4080 16GB and the 4080 12GB sell for $1,199 and $899, respectfully, is a large increase for gamers. This is especially true given the fact that we have reached record inflation this year. Nvidia has slowly increased the x80 prices over the years with very few decreases and at this point you won’t see many (or any) decreases moving forward. The 2000 and 3000 series saw the release of the RTX 2080\3080 for $699 (MSRP). So seeing a $200 (4080 12GB) to $500 (4080 16GB) increase for a x80 GPU is pretty tough for the average consumer, especially knowing that it is clearly not the flagship and isn’t anywhere near the x90 this time around. Most people would probably just wait and see how the 4080 “Ti” performs when it releases. One thing we must not forget is that many people had no problems spending $1,200 to $1,800+ for a RTX 3080\3090 during the pandemic and shortages. So while it is easy to blame Nvidia, we cannot act like gamers & enthusiast\gamers didn’t have a hand in this. There were a lot of things that happened and are happening right now that will dictate how companies react to the market.

RTX 4080 12GB doesn’t use the same GPU die as the RTX 4080 16GB

The RTX 4090 & 4080 16GB uses the xx102 (AD102) GPU die. The final straw for some gamers and enthusiast would be that the RTX 4080 12GB GPU xx104 die size. Just using the RTX 3000 series as a reference the 3080 used the same GPU die as the 3090, obviously the x80 GPU die is cut down from the x90 die. The RTX 3070 used the xx104 (GA104) GPU die. This time around with the 4000 series Nvidia is releasing the RTX 4080 12GB with the xx104 (AD104) GPU die which is usually reserved for the x70 GPUs (RTX 3070\2070). Nvidia is selling a GPU and naming it an x80 tier while it uses an x70 GPU die (xx104), yet they are pricing it much higher than the previous x80 GPU as I explained earlier. This wouldn’t be the first time Nvidia has tried this and has got away with it. Several years ago Nvidia released the GTX 1080 using the xx104 die which is the same GPU die that the GTX 1070 used (the 1070 obviously had lower specs, lower SM count etc.). 10 months later the GTX 1080 “Ti” was released with the full xx102 GPU die. I remember having a few conversations about this on a small scale in the enthusiast communities, but it did nothing to stop GTX 1080s (xx104) from flying off the shelf.

Now the Good Stuff

The RTX 4090 appears to be the best GPU for content creators and professional. Last generation the 3080 performed well, but it's clear that the RTX 4090 will be the GPU to buy for professional usage. Nvidia has upgraded their unique and very nice looking Founders Edition design for the 4000 series. The fans will be slightly larger for better airflow and the power supply has been upgraded to 23-phases. The 4000 series will use the 16-pin connector that allows you to use up to four 8-pin power connectors. Only three 8-pin power connections are required, the fourth 8-pin connection will allow for higher overclocks. This new 16-pin connection will support the ATX 3.0 power supplies that are coming to the market in a few weeks. The micro-architecture based on AD10x will have some very nice features for content creators, streamers and professionals. Nvidia's superb Encoder (NVENC) will be getting an upgrade and will include dual AV1 Encoders. This will be important for streamers because Nvidia is claiming up to a 40% streaming efficiency increase over the current RTX 3000 series. Software will be upgraded to take advantage of the new dual AV1 encoders that will ensure FPS stays high and streams continue look great & run smoothly. The best thing is that the picture quality should get even better at the same or lower birates than we are using today. This will allow optimized\smaller file sizes and less bandwidth being used. Gamers will be able to record gameplay up to 8K at 60FPS. For content creators, hobbyist and professionals the dual AV1 encoders\decoders should allow workloads to complete two times faster than the RTX 3080. The RTX 3000 series is capable of AV1 Decoding, but not AV1 Encoding. The RTX 4090\80 will enable both. This was prviously only seen in the enterprise grade GPUs from Nvidia. CUDA will also be getting upgraded from version 8.6 to 8.9 as well. DLSS 3 is only available for RTX 4000 users. DLSS 3 & Optical Flow will now allow the GPU to render actual frames instead of pixels to the final output that we see on-screen. This also could mean that we will see less denoising in future titles and sharper results. DLSS 3 will also increase the framerates and frametimes which means that even if you are CPU bottlenecked the GPU will do most of the heavy lifting. Another thing to note is that Nvidia will still be using the old Display Port 1.4a. This definitely matters for certain professional workloads. For the record Nvidia has been using the old Display 1.4a standard since 2016 (GTX 1080 officially used DP 1.2, but could use DP 1.4 standard). For the average gamer this probably won't matter as much since they are usually only chasing framerates and frametimes. It is also possible that Nvidia will update the Display Port standard on the enterprise GPU release based on Ada Lovelace, but there are many more variables that are more important than the Display Port standard when it comes to professional workloads. Regardless of what Nvidia does at the enterprise level the DP 1.4a is still capable of some very impressive resolutions and framerates (8K @ 60FPS / 4K @ 120FPS). The HDMI will continue to use the 2.1a standard that the RTX 3000 series used which offers 8K @ 60FPS and 4K @ 120FPS as well.

Conclusion

Nvidia is placing the RTX 4090 in a league of its own in regards to specification, price and performance. As I stated earlier in the article, Nvidia does not want the RTX x80 anywhere near the x90 GPU this time around. It’s clear that the RTX 4090 is aimed at prosumers, professionals, content creators and those who need more than trivial gaming performance. The Titan is a thing of the past and the RTX x90 is filling that gap. Nvidia has priced it accordingly for those who don’t want to buy a much more expensive enterprise Nvidia GPU for professional use. Nvidia is claiming that the RTX 4090 is said to be two times faster than the RTX 3090 Ti and that the RTX 4080 is twice as fast as the RTX 3080 Ti. Nvidia set the stage with the RTX 3080\3080 Ti & 3090\3090 Ti and are now increasing the price of the upcoming RTX 4080 16GB\12GB. Nvidia appears to be positioning their tiers and their future tech in a window that they have carefully planned over the past several years. The only way to upset this plan is for AMD to do the unthinkable and match Nvidia with competitive prices and performance results. However, this is highly unlikely. AMD is in a prime position to undercut Nvidia, but I don’t think AMD will be able to do it based on the current economy. Nvidia must feel very comfortable with anything that AMD will release based on their current pricing. Nvidia has also given themselves a nice cushion for the inevitable RTX 4080 “Ti“ release regardless of what AMD does. Hopefully AMD has an answer for the incoming RTX 4000 series impressive performance increase. Eventhough the RTX 4080 16GB has scaled back some of the memory configuration hardware, it will perform very well against the current RTX 3080 and the RTX 3080 Ti. Other GPU hardware has been increased basd on the info that I have seen (SMs, ROPs, RT Cores, L3 Cache etc), but current RTX 3080 users will definitely be taking a 20% decrease in memory bus width and a 3% decrease in memory bandwidth. This was obviously done to keep the tiers in check. It is worth notice that the RTX 4080 16GB memory is clocked 21% higher than the (stock) RTX 3080. Now when it comes to the RTX 4080 12GB it appears that Nvidia did the opposite and you’ll actually get less of everything compared to the RTX 3080 based on the information that I have seen so far (SMs, ROPs, RT Cores, L3 Cache etc), but the RTX 4080 12GB should actually outperform the RTX 3080 easily thanks to the updated micro-architecture and L2 cache increase. The memory bus width is 40% higher on the 3080 and the memory bandwidth is 34% higher as well when compared to the RTX 4080 12GB. Depending on the workloads current RTX 3080 users will need to decide if losing memory bandwidth is worth it. It is worth noting that the RTX 4080 12GB memory is clocked higher than the 3080. So while the RTX 4080 12GB will have lower memory bandwidth it should perform well against the RTX 3080.